This week we prepared for our interim demo. I worked mainly on isolating cars from the road for our crash detection system. Essentially the data we were feeding to our system last week had lots of noise present. I worked on further calibrating / post processing the car masks so that we could pass better data to the system.

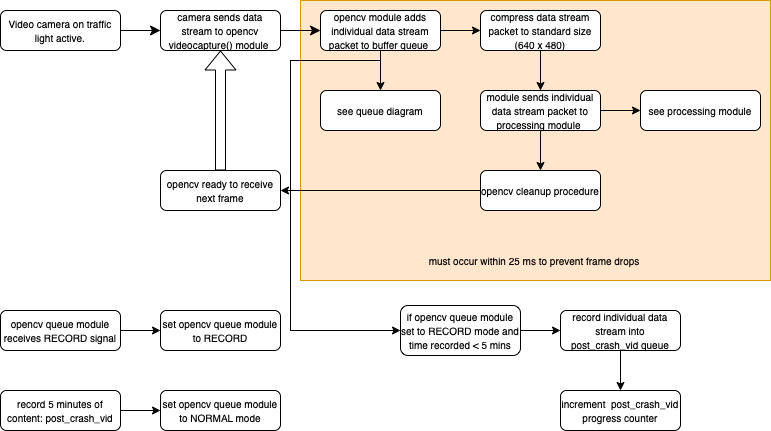

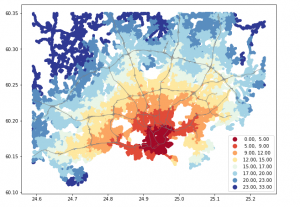

I used a combination of gaussian blurs w custom kernels and further edited the background removing KNN algorithm (last week I tried using a MOG2 background removing algorithm which worked pretty well but still wasn’t good enough. This week we switched to a KNN algorithm which produced much better results) and in the end we see that much of the noise seen previously has been reduced:

As we can see in the image above, much of the noise from background removal has been removed. Now we essentially use these white “blobs” to create contours and then send that to our object tracking algorithm (different from this and it uses the raw camera data in RGB) to track the cars.

This progress was helpful in gaining more accurate crash detection.