Smart Traffic Light Project Proposal

Hello Blog. For our capstone project we (Jonathan, Goran, Arvind) are implementing a smart traffic light system using various computer vision technologies. The problem we set out to solve deals with modern traffic intersections. There has been a notable increase in the use of smart traffic camera lights to monitor intersections / traffic yet in our research we have yet to see a broad adoption of advanced technologies with these systems. For example, most intersections don’t record video because there is no widely adopted “system” to detect car accidents. We would like to implement a smart traffic camera system that can detect car accidents and act on this information.

Use Case:

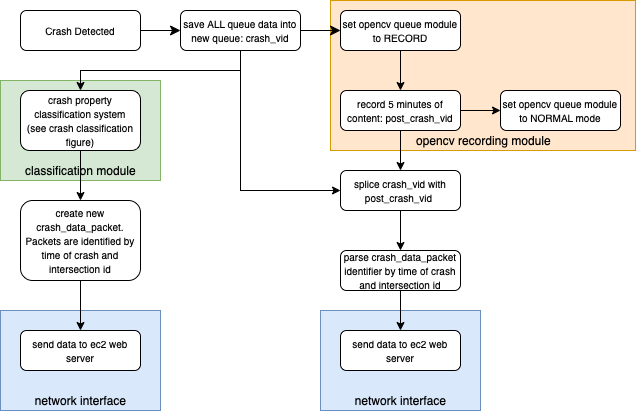

- Improve safety and traffic efficiency in traffic light intersections after a crash is detected

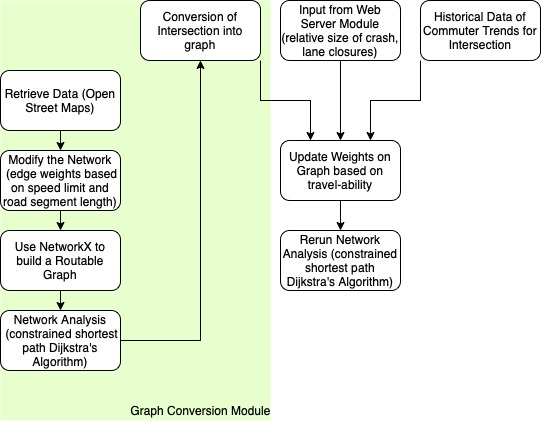

- Once a crash is detected: Properly communicate with other nearby traffic lights / road signs (will depend on the road type and severity of crash) to properly reroute traffic

- Transmit a message to 911 / other governmental services through a web server

- Store video from buffer to a database

Technical Challenges:

To implement this project we need lots of intersection training data. We have found quite a bit of readily available footage online but there are some caveats. We are training a model where our main feature (crash detection) is an “edge case.” Most footage doesn’t include a crash. This means that we will have to self implement lots of the training as there isn’t enough data to let the network train for crashes. Additionally, we cannot implement this “in real life.” This means that we will have to augment some data and feed it into the system as if it is real time camera data. There are many natural weather environments in which our system should work such as rain, snow, sunset, sun glare, night time, etc. We won’t always have “sunny day” type conditions. Also, Additionally, we will need lots of compute power which we can obtain through either AWS or Google Colab.

Solution Approach:

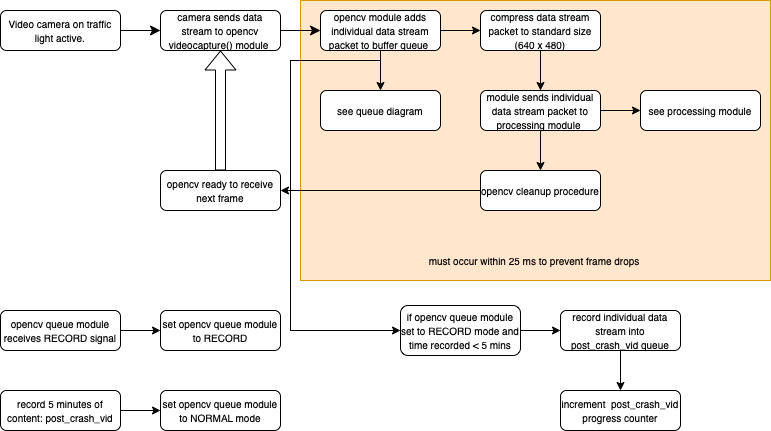

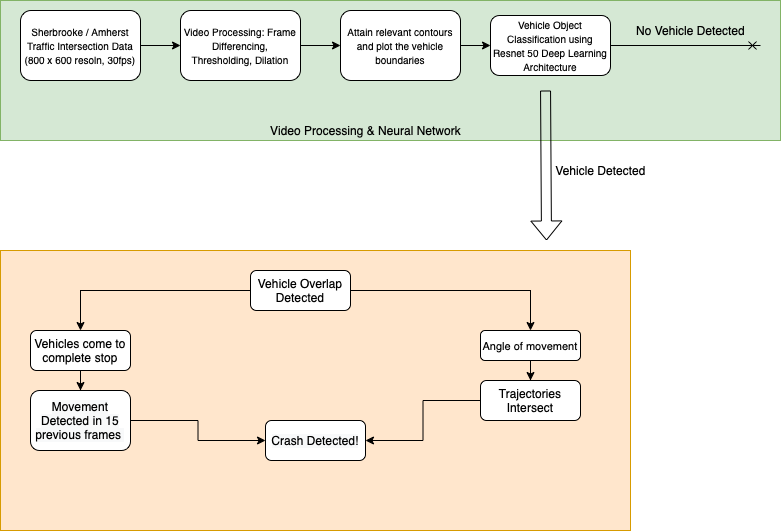

There are two major parts of the implementation. Object detection (cars, pedestrians, cyclists, etc) and collision detection. Object detection would be achieved through computer vision based deep learning. Collision detection can be achieved by mapping objects that are detected through our object detection system and coding for cases that would be indicative of a collision. Additionally, even though we are using pre recorded data, we are treating the data as “live footage” through OpenCV. Thus we can implement a “buffer” feature where collision detection will trip a video recording to take place.

Testing, Verification and Metrics:

Testing can be done by splitting up the test data. We can use a majority of the data for training and classification while using a small segment of data for testing. For object detection, we can use many forms of computer vision video found online. For collision detection, our “pool of data” is smaller but there still exists many intersection accident videos and even simulation videos designed for training. To recap again, we are treating the footage as “live” camera information. The system won’t have access to “future” data.

References:

https://arxiv.org/pdf/1911.10037.pdf