Jonathan’s Status Post 04/30/2022

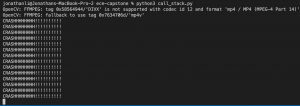

During this week our group finished up the last bits of testing on the whole system and I spent time getting the web server to work. We also started working on the final presentation poster. We increased the testing that we’ve done by increasing our footage count for 20 total clips to 36 total clips. While yes, we would have liked to test on more data, it was surprisingly difficult to find more and more data. It is very easy to find traffic camera footage but finding traffic camera footage with car accidents is more of an oddity as those events are less common. Additionally, as we mentioned in the beginning of the project, many traffic cameras do not record video as they are not “smart” enough to detect crashes thus a reason for the project to exist in the first place!

Additionally, I’ve worked on adding more “presentable” graphics for the final presentation and the tech spark showcase. This included adding metrics to the bounding boxes so viewers can see what is happening, increasing the size of metrics that we use such as directional arrows (increase size of arrows) and adding other metrics in text form.

We are all very excited to showcase everything that we’ve done this past semester and also very grateful for professor Kim’s guidance and our entrance into the techspark showcase!

Jonathan’s Status Report 04/23

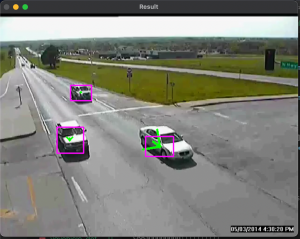

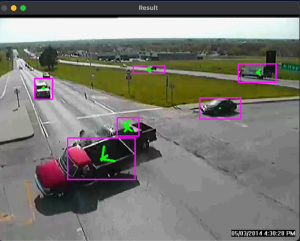

This week I worked with Arvind on further optimizing our crash detection and bounding box algorithms. We followed Professor Kim’s advice on focusing on the crash detection part of the project. The first thing we focused on was adding some live drawings to overlay over vehicles to better get an understanding of how our system was understanding the environment. We added bounding boxes (not shown in the image), tracking objects (the purple/pink boxes shown below) and vector /center points (the green arrows shown below) to all of the cars we picked up.

After doing so we were able to fix some minor bugs relating to how we track objects. At one point we realized we had a small bug in which we swapped x,y values during a tracking part of our algorithm. Using the images also helped us notice that certain non car objects were being tracked which led to false positives in our crash detection.

I remedied this by recalibrating how we implement background detection. Essentially we are training a simple KNN algorithm that reads in frames to build a background mask. What would happen is sometimes in some videos cars would be stopped for a very long time and the algorithm would end up defining that the car was part of the background. When the car finally moved, then the road itself would be tracked. To remedy this we used more frames for the background mask and tried using frames with no moving objects.

Finally, we worked on further refining the crash detection modules. Here is a set of screenshots from our example video that we have been using in our status posts.

Jonathan’s Status Report 04/16

This week I worked with Arvind on expanding on input given to us during our interim demo and our professor meeting the week after. Arvind and I increased our poll of crash videos together and ended up with ~20 video clips of unique crash situations that we tested our crash detection algorithm on. We were able to test all of these video clips with the algorithm and the results were mostly accurate. Some of the issues we noticed (like stated in previous weeks) were A) large bounding boxes due to shadows, B) multiple vehicles being bounded by one box, C) poor / irregular bounding due to contour detections.

Professor Kim gave us the idea to focus on using more “non detailed” methods and focus more on the crash detection part of the project rather than the vehicle detection part of the project. We are almost at a point where we can exercise these ideas. This week we spent lots of time removing “erratic” behavior from the vehicle bounding boxes to support a larger focus on crash detection. This was achieved by using a centric point to track vehicles.

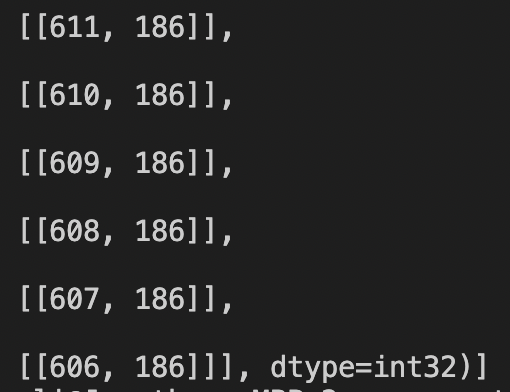

We used the built in numpy library which can take a image mask (vehicles / contours create an image mask of the vehicle) and calculate an average midpoint of the mask. With this image mask, we narrowed our focus to work on creating less noise / erratic behavior in the center points of vehicles. Primarily, we further tested / fully implemented last weeks shadow detection algorithm on other vehicles. With better center tracing, the crash detection suite can focus more on location tracking etc. The data is just more dependable.

As of now, this current alg is well enough for MVP. We are always looking to improve on the current algorithms / design which is why we are exploring these newer techniques.

Jonathan’s Status Report – Apr 09 2022

This week I worked on further editing the precision of vehicle detection. Our tracking system works pretty well but precision of vehicle detection is still a bit of an issue.

Here are some of the edge cases I worked on:

- Stacked Vehicles

The issue associated with this case is that stacked vehicles prevent the vehicle in the rear from getting picked up until way further when the cars separate.

This is the post back ground subtraction mask. Clearly our system cannot discern that these are two separate vehicles as it mainly uses background subtraction to detect objects on the road.

Solutions I explored for this include utilizing the different colors of the vehicle to temporarily discern that two seperate objects exist.

2) Shadows

Harsh shadows create artificially large bounding boxes as the “background” is being covered by the shadow as seen in this image of the truck. Things become worse in videos captured during hours with “harsh” shadows.

Solutions to harsh shadows include utilizing a “darkened” background subtraction on top of the current background subtraction. This is still a work in progress but essentially we can darken the background and further mask shadows out using the darkened background. Additionally, in this image it is shown that the shadow can be seen “outside of the image of the road.” We are currently working on being able to “mask out” non road pixels when determining contours.

Team Status Update 4/02/2022

This week we focused on refining our interim demo based on the feedback we got last week. Since Professor Kim was not present in person last week, we will be presenting to him virtually.

Jon and Arvind have been working on improving the bounding box algorithms that are able to track the moving objects in the video frames for crash detection. This algorithm works a lot better than the contours based one we had shown the previous meeting. this algorithm works on removing the background, choosing the background frame from the video feed as one with no (or few) objects other than those always present in the background. After removing this we get outlines of all objects that were not present in the background, and can track them using optical flow packages.

Goran has been working on the rerouting aspects of the project. The rerouting is now able to work interactively with the crash detection aspects. Whenever the rerouting receives a 1 in a text file, it will begin to reroute traffic based on the site and severity of the crash. We are currently using the traffic lights and traffic data on 5th avenue in Pittsburgh as our model.

Overall, the project is on schedule and we don’t expect any major changes to our gant chart.

Jonathan’s Status Report – 4/02/22

This week we prepared for our interim demo. I worked mainly on isolating cars from the road for our crash detection system. Essentially the data we were feeding to our system last week had lots of noise present. I worked on further calibrating / post processing the car masks so that we could pass better data to the system.

I used a combination of gaussian blurs w custom kernels and further edited the background removing KNN algorithm (last week I tried using a MOG2 background removing algorithm which worked pretty well but still wasn’t good enough. This week we switched to a KNN algorithm which produced much better results) and in the end we see that much of the noise seen previously has been reduced:

As we can see in the image above, much of the noise from background removal has been removed. Now we essentially use these white “blobs” to create contours and then send that to our object tracking algorithm (different from this and it uses the raw camera data in RGB) to track the cars.

This progress was helpful in gaining more accurate crash detection.

Jonathan’s Status Report – 3/26/2022

This week I worked on further honing the object detection and tracking schema.

I utilized the MOG2 background subtractor algorithm which dynamically subtracts background layers to mask out moving vehicles. Shown below is a screenshot of this in practice. Using MOG2 works very well for our raw footage because the traffic light camera footage is from a fixed POV and the background is generally not changing. Additionally, We plan on using the masked image below to detect motion and then we plan on using the colored image (as it contains more data) to create the bounding boxes.

I have also made changes to how we detect / track our bounding boxes. This algorithm shown above will detect the vehicles but I am currently working on implementing a CSRT algorithm for tracking vehicles and using the colored image.

Jonathan’s Status Report – 3/19/2022

This week I worked more on using the contour map Arvind created to detect crashes. Currently I am using the contour map to draw rectangles around vehicles and map them on a plane to identify relative velocity / relative direction.

The current contour map we are using is essentially a pickle file where each index represents each frame. Within each index, there is are multiple arrays. Each array holds contour edges which combined create the object contour as shown below:

Notably during this integration step, we have been running into issues with our current algorithm for detecting moving vehicles. This has stalled progress on my side. For example, when multiple vehicles are in close proximity. The contours overlap and become one. This has become an issue when we tried testing our collision algorithm.

We plan to address this by exploring other algorithms for object tracking. We we still utilize the motion detecting algorithm that we have established.

Jonathan’s Status Report – 2/27/2022

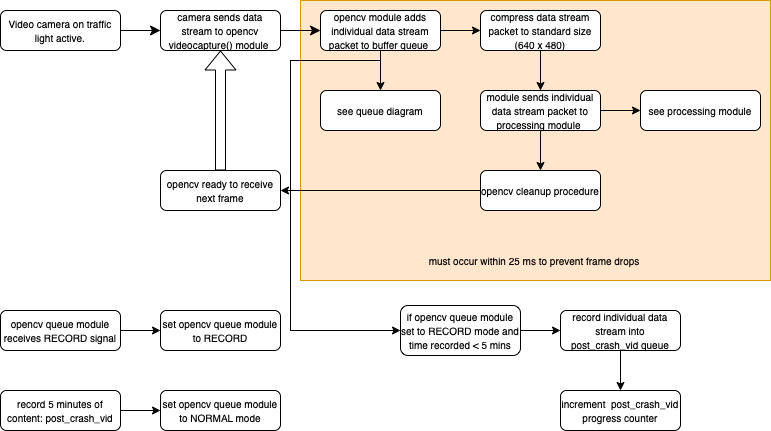

Finished Recording Stream.

We are now using the python abstraction of “threads” to implement all of our modules concurrently.

I have finished implementing the signals related actions as in setting opencv queue module to RECORD when the signal is received. We copy our queue and then create a new video file in a separate thread which runs concurrently with our recording module. Then when we process a normal frame we add it to our child thread that records the video