This week I have worked on finalizing and fine tuning the crash detection algorithm. The crash detection module works by deciding how similar the angle of two incoming cars are, as well as how quickly the speed is changing (acceleration). After the bounding box for two cars intersect, we say a crash is likely if the cars are travelling in perpendicular directions (so towards each other) and if there is a rapid change in speed. Different paramaters lead to different success rates of different situations. For example, if we prioritize the angle too much, then we will not detect crashes well when the cars are running parallel, such as fender benders.

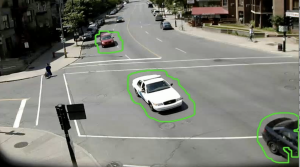

Firstly, in our testing with 20 videos of crashes, we always (100%) success rate detect the basic head on intersection crash. This is a crash such as the one shown below.

However, we are having variable success rates with other types of crashes. For example, in one of the videos we have a rear end crash where the cars are not rapidly decelerating as the cars are slowly coming to a stop due to a red light, but then one of the cars goes too far and crashes into the car in front of it. The cars are both travelling in parallel directions, and there is no abnormal change in direction/speed. One potential fix for this is to have some kind of test where we check for abnormal movement (or lack thereof). After a crash, cars don’t move, so if all the other cars we are tracking are moving, and these two cars are just staying still for a very abnormal/extended period of time that might be a way to do it. Overall, however, we are very happy with the 100% success rate on the typical case, and we can continue to refine paramaters and develop other strategies for the edge cases.

Finally, I am just working on making slides and making sure we are good to go for our presentation next week. (I am not presenting, so just working on slides and with the group member that is presenting to make sure he is knowledgable about the work ive done).

I think we are on track in terms of schedule. By next week I hope to have a completely finalized crash detection algorithm, and I can report how successful it is at tracking different types of crashes with more video data (currently testing on 20 clips, want to test on more). We would also like to finalize what we are showing for the demo.