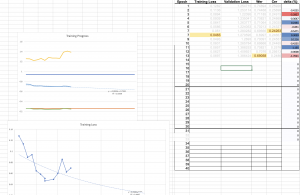

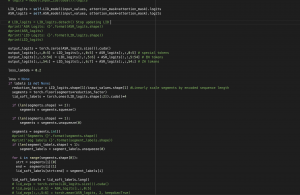

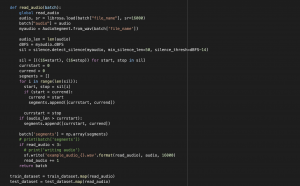

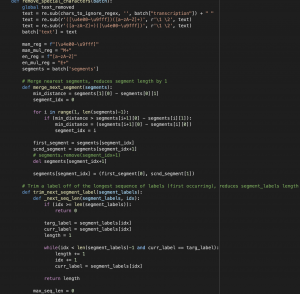

This week I pushed forward as far as I could with improving the model accuracy by using new techniques for training and combining the LID and ASR modules to almost exactly replicate the techniques used in the primary paper we’ve been using for guidance. The model completed over 40 epochs of training this week across 2-3 different configurations. The biggest and most successful change was using a segmentation process I devised to create soft labels for the current language. The audio is first segmented by volume to create candidate segments of audio for words or groups of words. I then perform a two-way reduction between each list of segments and the actual language labels calculated from the label string. This results in a 1-1 match between a language label and a segment which allows for performing cross-entropy loss across the LID model. Using this technique, I was able to achieve a 20% boost in performance across the WER of the hardest evaluation set we have, lowering the current best performance to 69%. This training process will continue until the final demo as we are able to easily substitute in a new version of the model from the cloud each time a new best is achieved. Great focus will also be placed on finishing our final presentation for Monday.

Carnegie Mellon ECE Capstone, Spring 2022; Nick Toldalagi, Tom Chen, Marco Yu