This week, very little was done, due to it being finals week, and our already being complete with the majority of the work needed for the live demo. As such, we mostly just worked on the presentation poster, and the video. We expect the next week to be much the same, minus some very minor HTML tweaking/ possible CV optimizing.

Team Status Report for Apr 23

This week has mostly been preparing for our presentation, and finalizing our integration stuff/deployment. Highlights include:

- Launching the website(see Harry’s report)

- Some issues with instance size due to RAM limits and modules setup

- Ajax is working (see Jay’s report)

- Still some hard to isolate bugs

- Testing is almost done (see Keaton’s report)

- Initial results look pretty good, but our misidentification rate is FAR higher than what we need for our use case requirements

- All other requirements seem like they’ll be fine (right now)

- Various CSS/HTML fixes/improvements

Overall, we’re mostly happy with where we are, for the presentation. We’re fully integrated and deployed, minus a few minor bugs that crop up here and there. Honestly, I would say that we’re pretty ready to perform the live demo, if it weren’t for the issue of getting our results up to scratch to meet our use case. While this is obviously an issue, I don’t think it will be insurmountable. We can probably implement a few additional specific secondary checks, and hopefully just barely squeak by.

Team Status Report 4/16

This week has mostly been integration week, which has been overall successful. Highlights include:

- Full integration of web app with hardware device

- Fully working communication between RPI and web app

- Support for users identifying images/adding new Iconic classes, based on cropped images sent from RPI && support for arbitrary categories

- Slight improvements on the CV side

- Can now take a list of expected items from the web app, and filter looking for only the expected items to improve accuracy (when item is removed)

- Now accepts arbitrary additional iconic images to be compatible with user registration on Web app side

- Web app/CV now supports custom registered items (fully integrated with previous debugging-stage custom items): users can now identify their custom items using the viewfinder on the webapp.

- Several UI/CSS enhancements

Overall, I think we’re in a pretty good position. We have almost everything essential for the project finished, and we have about a week left to perform the needed testing and create the slideshow. The main concern is finalizing the AJAX for the current recipe listings, and possibly needing to eke out some extra performance on the CV side to meet our criteria. To that end, we’ve decided to shuffle around some responsibilities so that Jay can focus on AJAX (see Jay’s report). There are also some remaining less essential issues, including RPI online/offline indicators, CSS, and website UI enhancements.

Team Status Report 4/10

Summary: last Tuesday was the demo, and the past few days was Carnival.

Demo summary

- Good: working CV, functional webapp, Keaton’s CSS contributions

- Bad: mis-ID’ed a can of tomatoes as beans (or vice-versa), webapp needs lots of fine-tuning

Harry has begun implementing the API from the RPi to the webapp. Jay continues to make progress with the webapp. Keaton continues to beautify the website, and is investigating the cause of the mis-ID.

Team Status Report 4/2

This week has mostly been cleaning up stuff and adding some polish in preparation for the demo. Some highlights include:

- Adding recipes/grocery list functionality

- Finalizing backend user model registration (see Jay’s post)

- CSS additions/improvements

- Dealing with hardware crisis (see Keaton’s post)

- Updated Gantt chart

Overall, we have almost everything we need for the demo, but we’ll probably need to put in some work on Sunday/Monday to ensure everything is polished and in a presentable state. On the updated Gantt chart, we’ve fairly heavily eaten into our flex time, but we may be able to catch up during Carnival weekend. We’re about where we should be in terms of progress, but the lack of flex time going into the last two integration weeks is definitely concerning.

Team Status Report 3/26

While we’ve all been swamped with midterms and academic fatigue, work on the group project continues apace. Harry created a working version of OAuth2 (more on his blog). Jay is continuing to work on the wireframing, and will incorporate CSS shortly. Keaton is taking a much-needed respite from CV, and is looking into hardware design considerations.

We hope to see some progress on the database component of the website’s backend this week.

Team status report for 3/19

Made some progress with CV component:

- Pixel diff is working sufficiently (may still need fixed light if we’re doing it in different lighting conditions)

- Tested directly under the camera, and off to the side with anti-distortion of the image

- Overall fine, a few standout items that were confused with each other, but nothing that can’t be handled on a case by case basis (specifically applesauce was a bother)

- Have a confusion matrix based on the number of matches we saw for each situation (See Keaton’s report)

Harry got OAuth working for various services. Jay expanded bringing the wireframes to life, though he acknowledges progress is slower than he’d like.

Team Status Report March 12

This week was fairly uneventful, as it was the week of Spring break, and the week prior we were primarily focused on finishing the design document. During the current week, some very minor prototyping/testing occurred with the fish eye lens camera, with the camera in bird’s eye position. The localization/pixel diffing worked pretty well (with one caveat) but the results when performing sift on the images were pretty bad, so will likely need to iterate as a group (see Keaton’s status update).

Team Status Report Feb 26

This week we have been working on the design report and unfortunately we weren’t able to make much progress on the project itself. We will continue working on the design report and try to build the web-app prototype. We are still waiting for our parts to come in and hopefully we can get more done during the spring break when most of our midterms are over.

Team Status Report for Feb 19

Feb 19

This week has mostly been finalizing the details of our project in preparation for the design presentation/documentation. At this point, we feel fairly confident in the status of our project, and our ability to execute it.

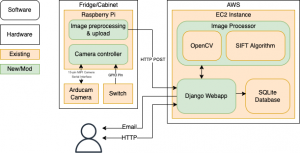

Major design decisions:

- Cut back on the scope of the project/increased the burden placed on the user.

- Scale back scope of website

- Commit to SQLite

- Reorganized Gantt chart

- Finalized overall design

1) On the advice of Prof. Savvides and Funmbi, we decided to decrease the scope of this project by imposing the following requirements on the user:

- The objects must be stored such that the label is visible to the camera

Originally, it was near impossible to identify an item using ORB/SIFT/BRIEF unless the label was facing the camera. We initially tried to resolve this by resorting to using some sort of R-CNN/YOLO with a dataset which we would manually annotate. However, the process of generating enough data to make it viable was too much work, and it didn’t guarantee our ability to resolve the issues. As such, we are adding this requirement to the project, to make it viable in the given time frame.

- The user can only remove/add one item at a time

This is a requirement which we impose in order to handle unsupported items/allow registration of new items. When the user adds a new item which we do not support, we can perform a pixel diff between the new image of the cabinet and the previous image before the user added the unknown item. We are not currently working on this, but it will become important in the future.

- Finalized the algorithm (SIFT) and finalized supported grocery list

Baseline SIFT performed the best in our previous experiments, and continued to perform better this week when we were adjusting the grocery list. We may experiment with manually changing some of the default parameters while optimizing, but we expect to use SIFT moving forward. When basic eye tests with various common items, we found these items to perform well with the label visibility restriction: Milk, Eggs, Yogurt, Cheese, Cereal, Canned Beans, Pasta, and Ritz crackers.

2) Additionally, with input from both Prof. Savvides and Funmbi, we’ve majorly cut back on the stretch goals we had for the website. Given that this project is meant more as a proof of concept of the computer vision component rather than an attempt to showcase a final product, we’ve used that guideline to scale back the recipe suggestions and modal views of pantry lists. The wireframing for the website isn’t as rigorous anymore, though I (Jay) still want to explore using Figma (more in my personal blog post).

Account details won’t be as rigorous of a component as we had initially envisioned, and, given that performance isn’t as much of a concern for a few dozen entries per list, we’ve decided to stick with SQLite for the database.

3) Minor housekeeping: after seeing what other groups did for their Gantt charts, we decided that converting our existing Gantt chart into a format requiring less proprietary software would be beneficial for everyone and make updating it less time consuming. Updates to the categories (for legibility) and dates (for feasibility) have been made. New Gantt chart can be found here.

We also moved to a shared github group, code can be found at: https://github.com/18500-s22-b6.

4) Finalized overall design. After some discussions, we finalized the design of the different components of our project. For the physical front end, we found appropriate hardware and placed the order. For the backend, we decided to use a single EC2 instance to run the webapp and the CV component. Although scalability is important for smart appliances, it is not the priority for this project. Instead, we will put our focus on the CV component and make sure it works properly. We do have a plan to scale up our project, but we will set that as a stretch goal.