Unfortunately our team did not sort out all the details of our project before the proposal presentation so this week we have been playing catch-up. We discussed/decided on implementation details and performed benchmarks on different object detection algorithms. While we made progress, we failed to make significant headway and catch up to where we feel we ought to be.

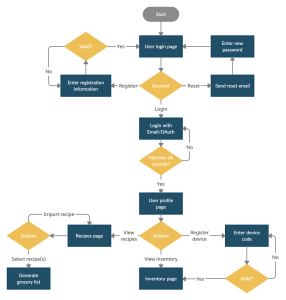

Flow diagram for the website

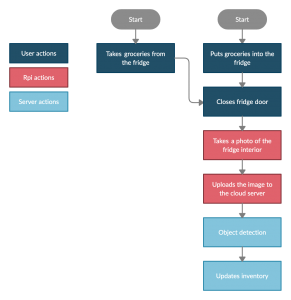

Flow diagram for a user of the system

As shown above, we’ve decided against using a Jetson Nano for hardware. Instead, we will be using a cheaper RPI, which will snap photos, and send them to the cloud for processing. Our reasoning for this is that our use case is highly tolerant of delay, and has very low usage (a few times a day at most). As such, there’s no reason to have high power expensive hardware bundled with the application itself, when we could just as easily have the processing done remotely. We also don’t expect this to have significant downside, as the CV component already required internet access to interact with the web app component. This will become an issue, however, if the project needs to scale up and support thousands of users, where the computing power of the cloud server will become a bottleneck. In that case, we will turn to distributed computing.

We also decided on hardware for the Camera (Arducam OV5647, with a low distortion wide angle lens, to be mounted on the ceiling of the storage area). Lens can be removed/changed, if it becomes an issue while testing.

The work on benchmarking different object detection algorithms was a largely failure. SIFT currently seems to be the favored algorithm, but there were several issues while doing the benchmarking. A fuller list/description of the issues can be seen on Keaton’s personal post. Essentially, all the algorithms performed exceedingly poorly for geometrically simple items with no labels/markings (oranges, apples, and bananas). We expect this was likely exacerbated by some background noise which was thought to be insignificant at the time. Because of this, we didn’t feel confident in making a hard decision on the algorithm moving forward. Since this is the most important component and we are already behind, we likely devote two people the following week to work primarily on this, to ensure we get it up to speed. We will continue doing the benchmarks over the next week to decide what hardware components & algorithms to use. We will also talk with the faculty members to iterate our design.