Capstone Final Video

Kelton’s Status Report 04/30/2022

This week I finalized the depth imaging model with Ning as detailed in the team status report. And then I tested the integrated sensor feedback model with Ning and Xiaoran. Through this testing, we first, discovered and fixed latency issues between ultrasonic sensing and vibrational feedback; second, changed the number of threat levels from three to two to make the vibration difference more distinguishable; third, made communication between Raspberry Pi and Arduino more robust by explicitly checking for milestones like “sensors are activated” in our messaging interface instead of relying on hand-wavy estimates of execution times.

I also tried two different ways of launching the raspberry pi main script at boot up, i.e., @reboot with cron job scheduling and running in the system admin script rc.local , without success. Upon boot up, while the process of the script can be seen, the physical system is not activated. Now the system can be demoed by running remotely through SSH, but I will troubleshoot this further during the week. Between now and demo day, I will also further test, optimize, and present the system with the team.

Team Status Report 04/30/2022

This week, we are mostly testing and optimizing based on the tests.

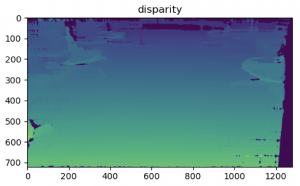

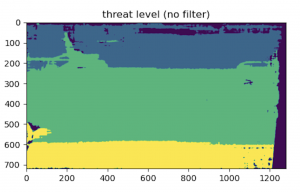

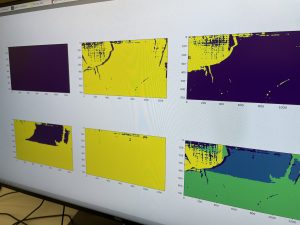

For depth image modeling, we visually examined the threat levels generated from the stereo frame of the depth camera. The disparity frame below is calculated at room HH1307.

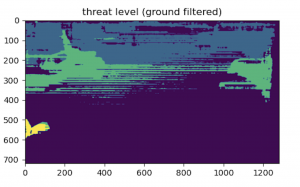

But the threat level from our model shows that ground is not recognized as a whole due to perceived depth based on depth camera’s downward orientation.

With the addition of a filter based on a height threshold, we are able to achieve the effect of ground segmentation and thus more realistic threat level as shown below (the deeper the color the smaller the threat).

Beside tests revolving around accuracies such as the one above, we also looked into latency across every stage of the sensing and feedback loop. To make sure data sensed by depth camera and ultrasonic sensors model the same time instance, data rate from Arduino to Raspberry Pi is tuned down to match the depth camera.

During user testing, we found it hard to tell the three vibration levels apart and thus changed the number of threat levels from three to two to make the vibration difference more distinguishable.

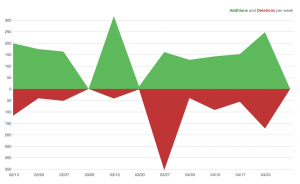

We also made communication between Raspberry Pi and Arduino more robust by explicitly checking for milestones like “sensors are activated” in our messaging interface instead of relying on hand-wavy estimates of execution times. This marked the 49th commit in our GitHub repository. Looking at the code frequency analysis of our repo, it can also be seen 100+ additions / deletions are made every week throughout the semester as we iterate in terms data communication, depth modeling, etc.

Meanwhile, the physical assembly of electronic components onto the belt is completed as two batteries, the Raspberry Pi and Arduino board have been attached besides just ultrasonic sensors and vibrators. And we put in our last order for a USB to Barrel Jack Power Cable so that the depth camera can be individually powered by a battery as opposed to through Raspberry Pi.

From now until demo day, we will do more testing(latency, accuracy, user experience) , design (packaging, demo with real-time visualization of the sensor to feedback loop), and presentation (final poster, video, presentation).

Ning Cao’s status report 04/30/2022

This week my work primarily focused on testing. During testing, we found our system to be less robust than expected, so I worked with Kelton to increase the robustness. I also helped in developing the testing scenarios. Planned testing scenarios include:

- stationary obstacles: we place multiple objects of varying heights (chairs, boxes, etc.) and lead the blindfolded user wearing the belt (“user” in later occurrence) to the site. We will ask the user to tell us the direction of the obstacles.

- Moving obstacles: We ask the user to stay still while our team members move in front of the user, effectively acting as moving obstacles. We then ask the user to tell us the movement directions of the moving obstacles.

- Mixed scenarios: We will ask the user to walk down a hallway with obstacles we place in advance. We will time the movements and observe the occurrence of any collisions.

Xiaoran’s report 4/30

This week, I have primarily focused on fixing physical connections and making sure that everything works fine. We now have a second battery that we plan to attach to the depth camera so we have more battery life to support both the camera and the raspberry pi. I have also planed out some exterial design, we will be cutting out a piece of black table cloth to cover up large electronic parts such as the raspberry pi, the arduino, the breadboard etc. Since our project is supposed to wearable, we will spend some time next week to make it visually attractive as well.

I am currently also working on a python visualization code for the belt. The python code will communicate with the raspberry pi on an usb chord using serial communication, and naively receive inputs and visualize the information. I have yet to decide what additional features I want to add to help us demo the belt, but at the moment I am planing to display the reading for the six sensors, the vibration level for the six vibration motors as a starting point. Passing in depth camera information might be difficult since frames would take a lot longer to process then numbers, and it might slow down the raspberry pi which is not what we really want.

Next week, I will be finishing up the python visualization, finishing up our external decorations, and conduct more testing to make sure that our project would perform well .

Kelton’s Status Report 04/23/2022

This week I tried using the error between feature pixels and their baseline correspondents to classify the alert levels.

However, the model failed to output different levels no matter what the depth camera points to. Then I worked with Ning and updated the model to classify alert levels based on hard depth thresholds.

Besides depth image modeling, I finalized the design of the final presentation and build more features of Bluetooth audio and running the raspberry pi main script at boot up.

Xiaoran Lin’s Report 4/23

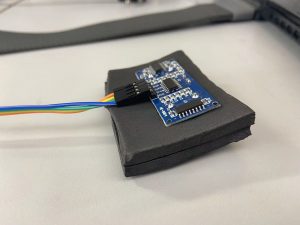

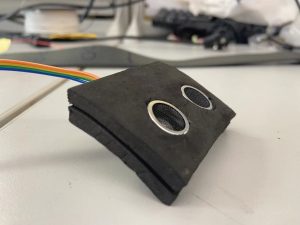

This week, I have been focused mainly on restructuring the belt and reassembling the belt. This includes making a new protective cover for the ultrasonic sensors, reconstructing all of the circuits and checking all of the components out again to make sure nothing is wrong. Examples of assembly can be seen below:

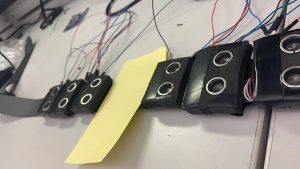

Front of the belt and back of belt (Yellow space is reserved for inserting depth camera)

Sample of circuit assembly

We were able to run some tests, and all of the sensors are working correctly. Tmrw we will conduct tests with all modules installed.

As for next week, I plan to finish up the visualize palrts and add decoration to the belt. Covering up more of the underlying structures and adding design to fit the bat theme

Ning Cao’s status report 04/23/2022

This week I mainly focused on working with Kelton on condensing the 720P depth map into 2 threat level numbers. We eventually settled on using hard-coded thresholds to classify threats.

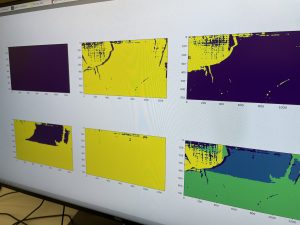

Below is a showcase of our work. From a single frame, we use hard thresholds to generate masks that signal a threat of at least level 1 (bottom center), 2 (bottom left), or 3 (top right). In these masks, the yellow part is where the threat lies. When we add all three masks together and pass it through a validity mask (top center, yellow parts are valid), we get the frame simplified to 4 threat levels (bottom right; the brighter the color, the higher the threat level.)

We do notice that the ground is considered a threat level of 1/2; we have theorized a way that utilizes the baseline matrix that we managed to generate in previous weeks to filter it out. Hopefully, we can show our result in our presentation next Monday.

Team status report 04/23/2022

For this week, our week focuses on the algorithm of the depth camera and the assembly of the belt. We will attach the depth camera to the belt and test the whole system on Sunday.

Below is a showcase of our work on the depth camera. We successfully separated the depth information into 4 threat levels (lv. 0 to 3). The frame on the bottom right is the processed frame, in which the yellow area (a chair in the vicinity) is correctly identified as a level 3 threat. We will filter out the ground (green part) on Sunday before testing.

Below are showcases of our sponge-protected ultrasonic sensors and the partly assembled belt. We expect the sponge to serve as protection as well as cushioning that prevents the vibration of one vibrator to pass through the entire belt. The center of the belt, now covered with a piece of yellow paper, is where the depth camera will be.