This week, we are mostly testing and optimizing based on the tests.

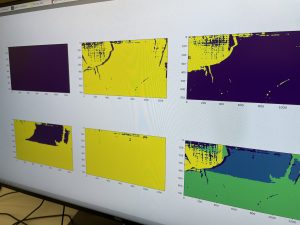

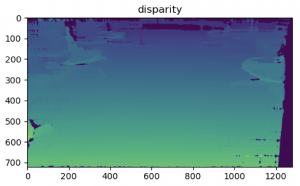

For depth image modeling, we visually examined the threat levels generated from the stereo frame of the depth camera. The disparity frame below is calculated at room HH1307.

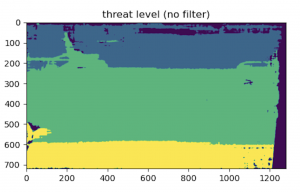

But the threat level from our model shows that ground is not recognized as a whole due to perceived depth based on depth camera’s downward orientation.

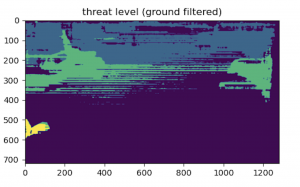

With the addition of a filter based on a height threshold, we are able to achieve the effect of ground segmentation and thus more realistic threat level as shown below (the deeper the color the smaller the threat).

Beside tests revolving around accuracies such as the one above, we also looked into latency across every stage of the sensing and feedback loop. To make sure data sensed by depth camera and ultrasonic sensors model the same time instance, data rate from Arduino to Raspberry Pi is tuned down to match the depth camera.

During user testing, we found it hard to tell the three vibration levels apart and thus changed the number of threat levels from three to two to make the vibration difference more distinguishable.

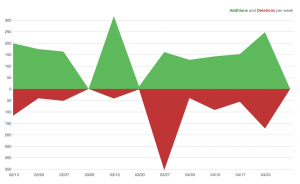

We also made communication between Raspberry Pi and Arduino more robust by explicitly checking for milestones like “sensors are activated” in our messaging interface instead of relying on hand-wavy estimates of execution times. This marked the 49th commit in our GitHub repository. Looking at the code frequency analysis of our repo, it can also be seen 100+ additions / deletions are made every week throughout the semester as we iterate in terms data communication, depth modeling, etc.

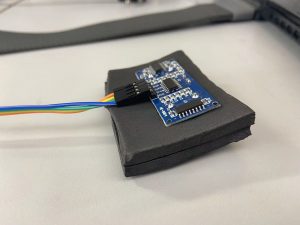

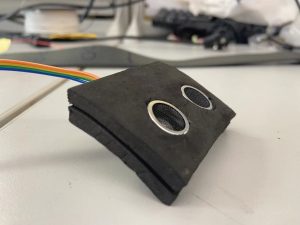

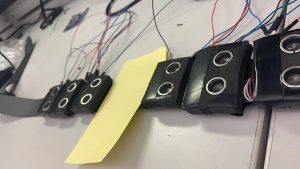

Meanwhile, the physical assembly of electronic components onto the belt is completed as two batteries, the Raspberry Pi and Arduino board have been attached besides just ultrasonic sensors and vibrators. And we put in our last order for a USB to Barrel Jack Power Cable so that the depth camera can be individually powered by a battery as opposed to through Raspberry Pi.

From now until demo day, we will do more testing(latency, accuracy, user experience) , design (packaging, demo with real-time visualization of the sensor to feedback loop), and presentation (final poster, video, presentation).