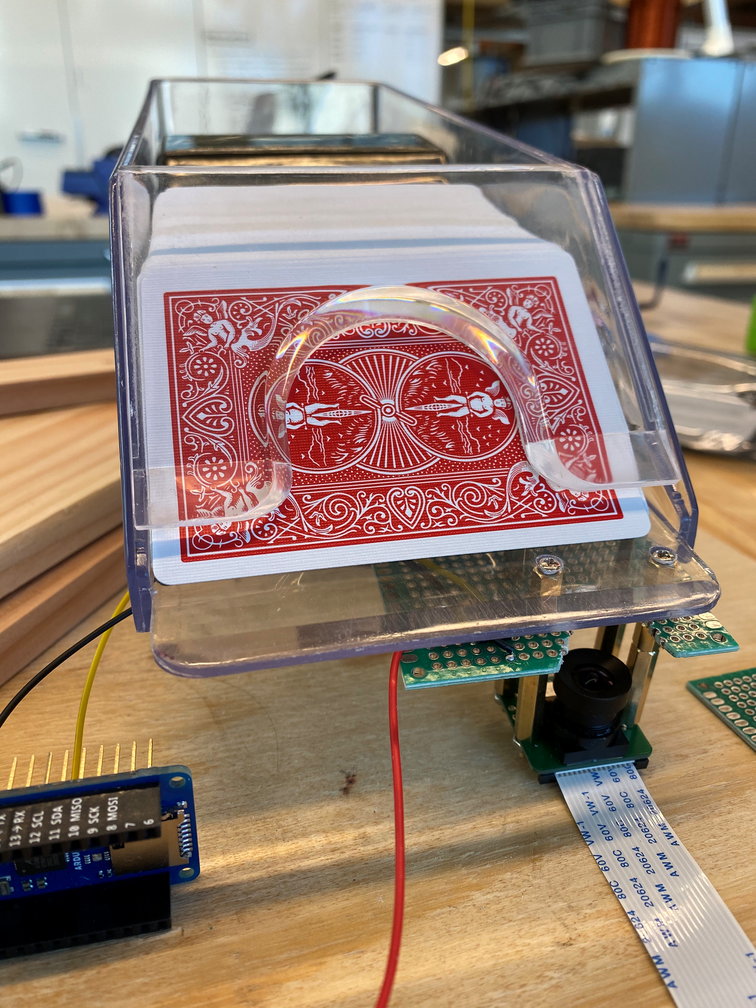

This week, I worked on bringing up the trigger and syncing the camera captures with it. I implemented I2C communications with the ADC evaluation board to sample the analog voltages from the infrared sensor.

The is currently set to 1600 samples/s, but we can increase that if necessary. Right now, we believe a 0.625ms sample period (1600 samples/s) is adequate given that the camera shutter time is 16.667ms. The main python loop polls the ADC to respond quickly to card triggers.

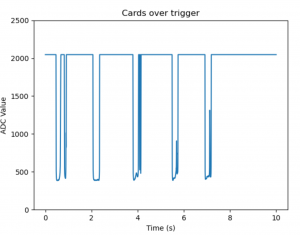

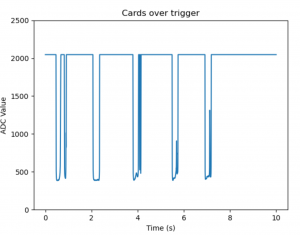

Since the sensor measures infrared reflectance, the measured reflectance depends on the surface’s color. This means that the trigger signal is slightly different for face cards since there are contrasting colors (white, black, red) that pass over the sensor. However, the white edge of the card that passes over the trigger first always creates a voltage that is consistently below the threshold. Therefore, this should not be an issue for our project. The graph below shows the trigger signal when we deal an ace of clubs, five of hearts, 9 of spades, jack of spades, and king of hearts. Note that the signal includes spikes for cards with a black rank, but it consistently falls below 500 at the beginning. Perhaps a future revision could learn characteristics of the trigger signal to use a priors when classifying the card…but that’s out of the scope of our project.

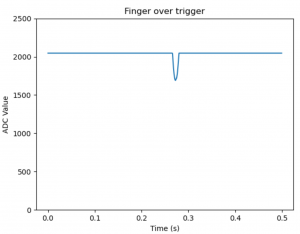

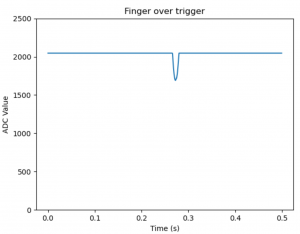

The second graph shows the trigger values when we place a finger directly over the imaging stage, an expected mistake during usage. This will not dip below our trigger threshold. We found that placing a phone flashlight directly over it will trip the sensor if it is within a few inches, but we have not yet saved those signals.

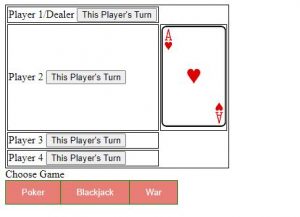

Before we integrated the trigger with the ADC, we used an arduino to perform A/D conversions. With that prototype, we imaged an entire deck once to obtain a toy dataset for machine learning. Luckily, we found that frame 12 (where frame 0 is the first continuous capture when triggered) consistently contains the rank and suit in on image. This video loops through each frame-of-interest for each card. This second video shows those frames after preprocessing (cropping and Otsu’s thresholding). This preprocessing is not robust enough since it misses some digits.

Both defocus and motion blur are an issue. The camera’s listed minimum object distance of 3cm still gives images that are out of focus. The motion blur is due to the 60Hz framerate limit, but it only blurs the images in one direction. We can overcome defocus with thresholding, but motion blur is trickier. The “1” in cards of rank “10” is often blurred due to motion that gives little contrast in the image. The current global threshold misses that single digit, so I may experiment with adaptive thresholding to see if that makes it more sensitive.

I was unable to experiment with the lighting system this week since we do not yet have a PCB. While we will continue working without consistent lighting, Ethan plans to work on that next week. I have also not yet finalized the edge detection and cropping to separate the rank and suit, but I do not expect that to take very long. Because of this, I am slightly behind schedule. Now that we have the trigger working, I hope to get back on schedule this week and obtain a larger dataset for Sid to work with.

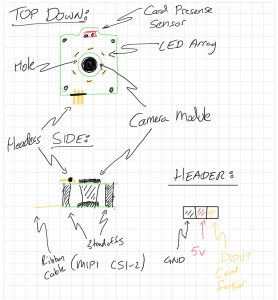

It uses the Arducam module boards we already have and allows us to simplify our design as well as get the LEDs closer to the base of the card shoe (creating a better lighting environment in the process). There will be an additional header (currently debating whether to include a separate PWM-enabled power input for the LEDs to allow for dimming (however this includes its own challenges when it comes to the video and syncing everything together).

It uses the Arducam module boards we already have and allows us to simplify our design as well as get the LEDs closer to the base of the card shoe (creating a better lighting environment in the process). There will be an additional header (currently debating whether to include a separate PWM-enabled power input for the LEDs to allow for dimming (however this includes its own challenges when it comes to the video and syncing everything together).