This week, we made a lot of progress on our independent work streams. The housing prototype has been assembled and is ready to be used for testing. Currently JP, is testing the cup detection algorithm with the camera positioned how we would like for our demo. The housing has the support on the rear panel for the touch screen UI that Juan is implementing, and space to house the internal launching mechanism that Logan is building. We believe it is sufficient enough for our demo, but there will likely be small changes as we continue to test.

We are nearing a point where we can test the integration of our independent workstreams. For our demo, we plan to integrate mostly the cup detection and the launcher, since those are the most difficult parts. By the end of this week we should have the following ready to demo:

- Detecting cup rings

- Filtering out erroneous ellipses

- Generating 3D point locations of each detected cup

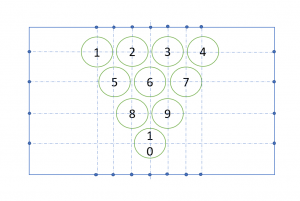

- Mapping detected cup to calibration map (linking cup position to cup number 1,2,3 etc.)

- Rotating launcher to aim at specific cup

- Triggering ball launching at specific cup