I spent the first half of the week converting our build engine to CMake from MSBuild. I was testing our application on my laptop until the Jetson arrived, so MSBuild from Visual Studio sufficed at the time. CMake allows us to cross compile for Windows x64 (laptop) and Linux ARM64 (Jetson Nano). This took a lot longer than I expected due to dependency issues with opencv specifically. Now I can test the camera and ellipse detection on the Jetson. The application runs smoothly on the Jetson when I ran our testbench on it. The second half of the week I spent enhancing the coordinate transformations so that I can send that data to the Arduino. Using the data from the camera intrinsics and extrinsics, as well as the depth map data, I can map 2D image coordinates, to the 3D position coordinates so the launcher knows where to aim relative to the camera’s position. Below is a picture describing image projection from a 2D image to a 3D space.

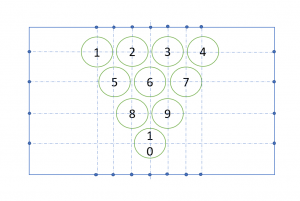

I also started to derive the calibration technique to help the UI pinpoint which cups in a given formation have been identified. We decided to use a map with dots along the 4 edges. These dots will connect to form horizontal and vertical lines that intersect. These intersections pinpoint predefined cup locations for specific formations. This is how our application will identify specific cups to select in the UI. Below is an example of our calibration map.

This coming week I plan on working almost exclusively on testing our detection algorithm with the Jetson Nano. The housing should be dry enough to mount the camera on it so I can do some real-time testing on the table we will be using. Previously I have been using a tripod to mount the camera, but now I will get a more accurate representation of the exact camera angle we will be taking images from. By the end of the coming week our application should be able to:

- take images

- detect cups

- get 3D cup coordinates

- link detected cups to predefined cup targets for selection