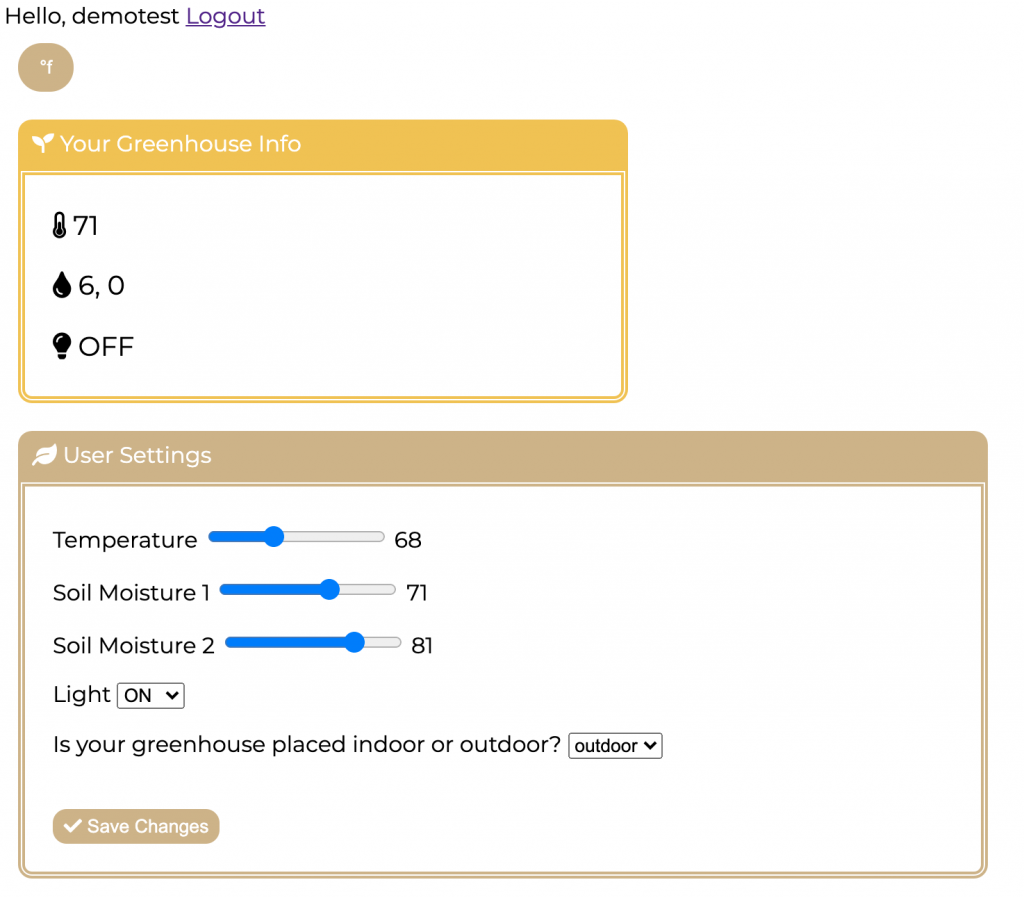

This week, we all met in person and focused on combining our own parts into one to produce our final product. I also implemented a functionality on the website where you can set your lighting schedule (pic: https://www.dropbox.com/s/vtao0fk6bdl1m7b/Screen%20Shot%202021-05-08%20at%201.06.46%20AM.png?dl=0).

Hiroko and I had another testing process to make sure that users can control all parameters including heating, soil moisture, and lighting schedule. I also worked with Sarah to integrate her live stream onto the website.

Because our final demo is coming up next week, we also created our final poster. We had a bit of hard time to integrate all the information within one page because we had a lot of materials that we wanted to cover. We also started to make our video and are hoping to finish it by Sunday.

Next week, I will be working on the final report and summarize how much money I have used within AWS credits.