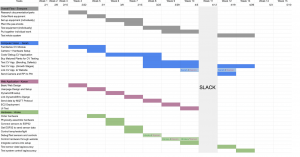

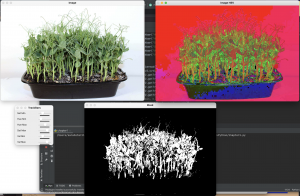

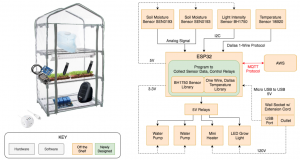

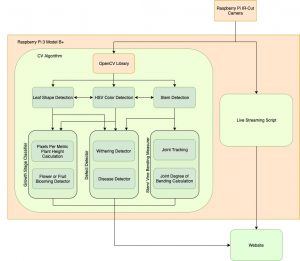

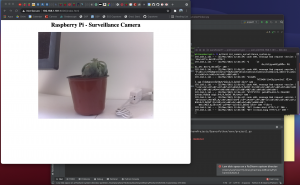

This week, the team and I continued to work on our individualized parts. I was able to complete the live streaming script for 24/7 monitoring of the greenhouse, and I am hoping to link that to our website once the RPi is sent to Kanon and Hiroko. Instead of doing HSV Color Detection and Edge Detection on online images of pea shoots, I was able to do that with images through my RPi of some of the succulents I have at home so that the CV is applied on realistic greenhouse/outdoor lighting. Currently, I am working on getting the CV to distinguish leaves and flowers, and I’ve completed my pixel per metric by measuring the bounding box that outlines the stem and top of the plant in the image to the real size of the succulent that I was testing. I figured out a way to work with the RPi without using a monitor or keyboard and instead using a VNC viewer which connects to my RPi through Wifi and displays the RPi OS right to my computer, which allows me to test my CV analysis through real time videos from the RPi instead of through RPi images . I also planted some of the pea shoots so that some can sprout by next week and I can properly test my growth stage categorization algorithm on pea shoots rather than the succulents.

I am slightly behind my tasks, as I wanted to test the night vision and get the flower and leaf recognition implemented, but I completed the growth stage pixel per metric algorithm and refined my HSV Color Detection and Edge Detection. My RPi stopped working in the beginning of the week, so I had to borrow my friend’s RPi until my new one came through.

Next week, I hope to be able to test my growth stage classifier with the sprouting pea shoots. I would like to finish my implementation on leaf and flower recognition, so that I can have the minimum to test the defect detection which would use another layer of HSV Color Detection on the parts of the leaf and flower. I will also make sure that the 24/7 monitoring is applicable during night.

.

.