This week we worked on further integrating the web app, gantry, and computer vision pieces. We have refined and calibrated the x-y motion of the gantry system and are working on integrating the z-motion which is functional on its own. The computer vision is still a bit finicky, so we plan to work on that a lot this week to improve accuracy. We also plan to work on fully integrating our 3 sub systems.

Lillie’s Status Report for 04/24/2021

This week I worked on refining the gripper mechanism and redesigned the plate for the y-front axis so the pulley that raises/lowers the gripper stays on more securely. I also worked a bit on the website and have been researching web sockets after trying to update across multiple pages with AJAX wasn’t working.

This week I plan to finalize the webapp interface/functionality and do more movement testing with Juan.

Team Status Report for 04/10/2021

This week we worked on attaching the rest of the gantry parts. In the pictures below you can see the gantry on its stands. On the top is the Raspberry Pi and camera. We had been having issues with weight distribution, but Lilies stands and mounts resolved it. Aside from this we have been testing our gripper mechanism along with the Z-axis but we did not take a picture before leaving and will show it during the demo instead. Interfacing between subsystems has improved as we are sending the data between Pi, Arduino and Webserver. We will continue to work on the interfacing in this week.

Juan’s Status Report for 04/10/2021

This week I worked on the control of the gantry and the website. As for gantry control I am able to move to every square using the Universal Gcode Sender. As I mentioned before this serves more as a testing platform as we need to continuously stream commands from our Raspberry Pi to the Arduino. To do this I have been making an Arduino script that uses the AccelStepper and MultiStepper libraries to control the motors to desired locations. Currently It is moving to fixed locations as we are testing the Z mechanism with the X-Y now.

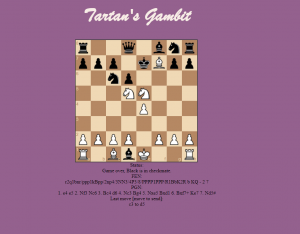

For the website I have used the chess.js library to implement a full chess game. Currently the same user has to do both moves, but only legal moves are allowed. Moves are logged in actual chess notation and the previous move is shown. The previous move is the output that we would the Pi would use to determine what the next stepper input should be. The FEN is the literal string that the chessboard.js uses to display the board position and is being used as a debugging tool.

This week I will be further developing the Arduino code so that we can fully control the gantry positioning through the serial stream. I will also be working on improving the website interface and user experience.

Luis’ Status Report for 04/10/2021

This week I worked with my team to do some initial system integration. We found a few kinks that we were able to work through. The OpenCV algorithm requires some adjustments, which I’ve been working on.

Firstly there needs to be some refining of the line detection. Running it realtime led to unstable lines, so the current plan is to initialize those locations with a clear board prior to starting the game. Doing this and getting all the lines to be clear has been a little more difficult to prove. My current solution has involved some adaptive thresholding, working on isolating the boards as solid colors, unfortunately the camera is able to pick up the slight variations in wood grain and those carry through, so this has been a work in progress.

Additionally I began work on my move detection. The current work seems very promising, which involves image subtraction, finding contours, and finding the centers. The issue with this is that shadows seem to break the contours apart, so some refining has to be done still.

Unfortunately I forgot to save screenshots while testing the code, so I will have to show them during the demo on Monday.

This upcoming week I plan on continuing to refine these two parts of the OpenCV. I also plan to begin transitioning the code from its current testing form consisting of a large loop, to a more usable code base. Previously I mentioned that I wanted to figure out what to send the Arduino in order to control the gantry, however this is being pushed back and I will work with Juan to make sure they interface well.

Lillie’s Status Report for 04/10/2021

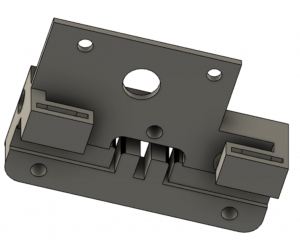

This week I worked on remodeling the y front attachment (pictured below), assembling the gripper, and designing the z movement mechanism. I was able to do some testing with the gripper attached to the gantry and the elevator mechanism for the z motion works.

I also designed and laser cut the camera and raspberry pi mount.

This week I am going to figure out how to make the gripper arms more suitable for picking up pieces without slipping. I am also going to work on refining the website and look into Django channels to figure out how to send and receive information to and from the raspberry pi.

Lillie’s Status Report for 04/03/2021

This week I spent a lot of time in Tech Spark prototyping the camera mount and gripper mount for the gantry as well as refining the gripper model itself. Juan and I ran into a small issue with the stepper motor we planned to use to raise and lower the gripper; the maximum rpm of the motor is far too slow and will prevent us from meeting the time constraints we have outlined to quantify successful execution of our project.

This week I will look into an alternative motor for the z motion of the gripper. I will also be working on implementing the website layout Luis has drawn up. Juan and I will also setup the timing belt in the gantry system and test the x-y motion. I will finish laser cutting the camera mount and assembling it this week too.

Team Status Report for 04/03/2021

This week the team worked in Tec spark to test motor control on the gantry. Also the Z-Axis mechanism has been assembled and attached to the gantry and is being tested. This coming week we will be attaching the Z-axis to the rest of the gantry and testing combined movement for stability and accuracy. We will also be further developing the interfacing between the Pi and webserver.

Juan’s Status Report for 04/03/2021

This week I have worked on interfacing and programming the stepper motors for the gantry. We are now able to send commands to our motors through GRBL gcode using the Universal Gcode Sender. This serves as a testing platform to ensure that we can move our motors to the desired locations. There were issues with some of our hardware but after debugging I found that the issue was that one of the driver potentiometers was damaged.

This week I will be finding the gcode commands needed for each square position as well as begin developing a way to continuously send gcode to the CNC shield.

Luis’ Status Report for 04/03/2021

This week I continued my work on piece detection. Currently we are using HoughCircles and HoughLines to determine board and piece location. I’ve also been working with Lillie on the website, I’ve created a basic design for the website, which Lillie will then implement.

My main goal this upcoming week is to work with image subtraction to be able to detect movement. I anticipate that this will require some changes to the Hough algorithms, and will work on changing them to work best with motion detection. Another plan is to possibly change the way board line detection is performed, such that we only detect the outermost edges, and divide their lengths to find the inner squares, this should use less memory, and video processing power. After this, which may have to be the following week, I hope to start interfacing this with the arduino and cnc controller. I anticipate that connecting these two may require the RaspberryPi to output different information about the locations, and will have to change this accordingly.