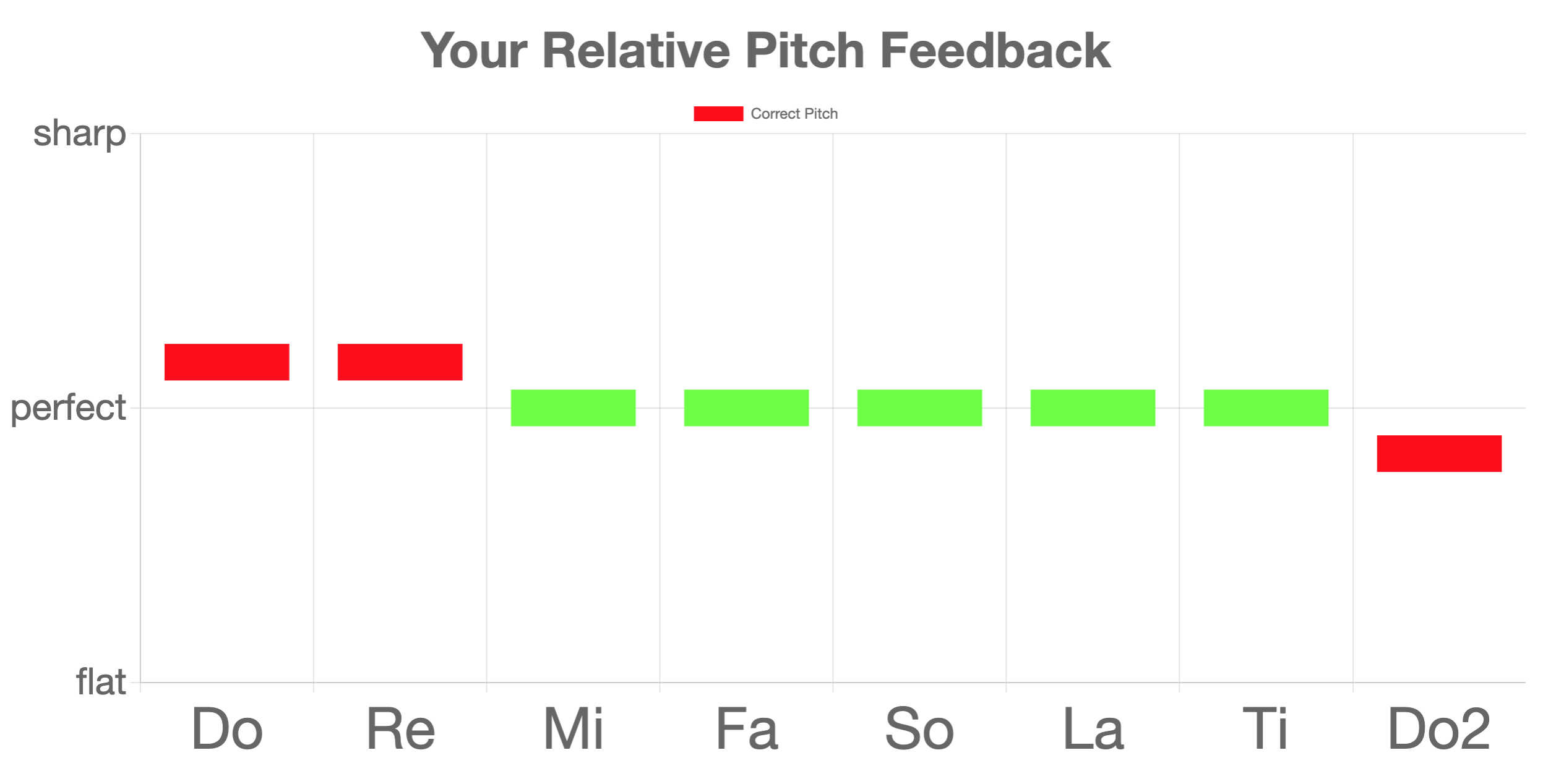

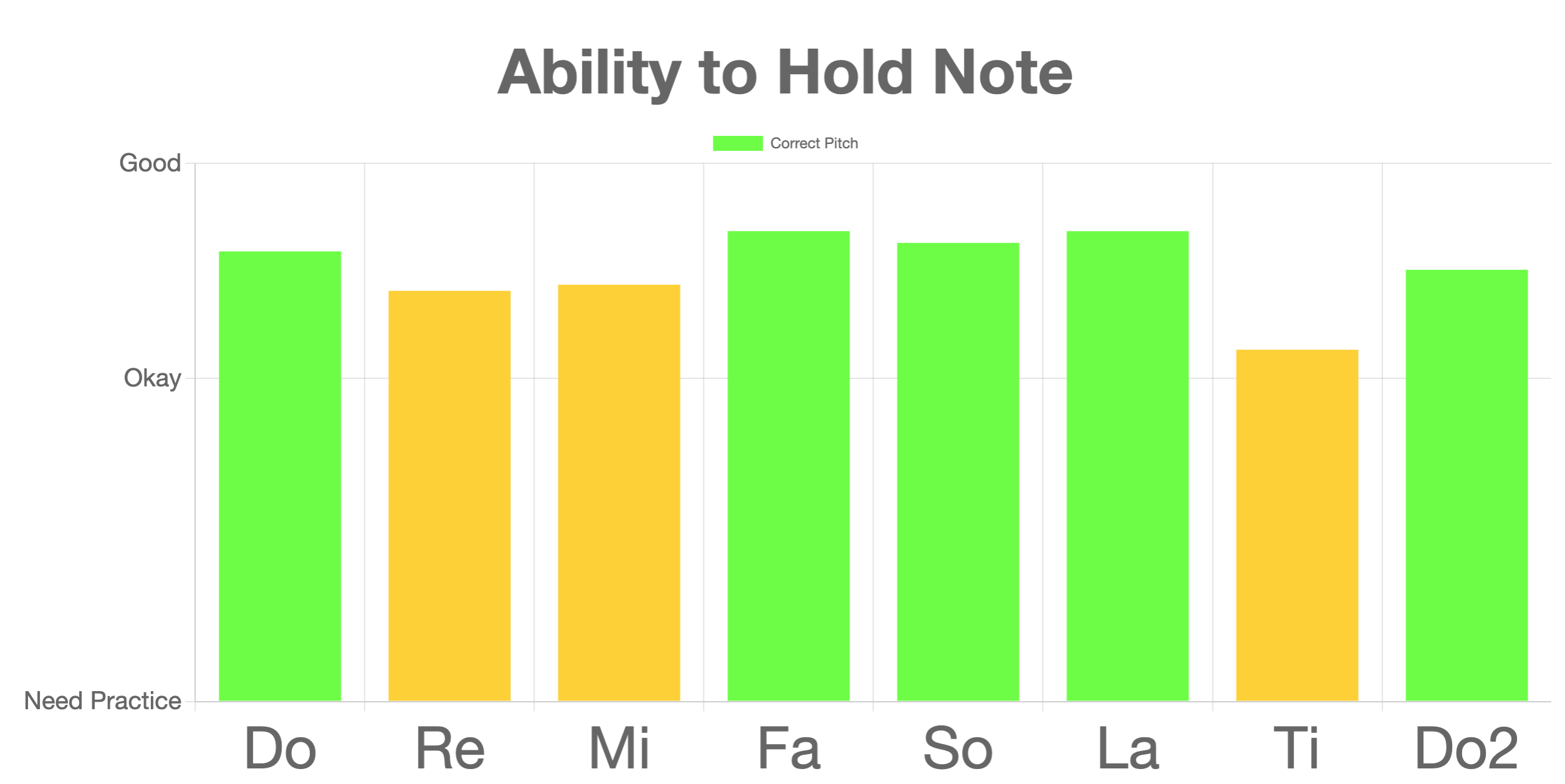

This week, I was able to finalize the note and clap detection algorithms, as well as the singing feature extraction, and vocal range calculation. The singing features that I chose in the end were: scale transposition, relative pitch difference per note, relative pitch transition difference, note duration, and interquartile range. Scale transposition is a measure of how many half-steps the users’ performance differs from the exercise most, which is calculated by taking the mode of absolute pitch differences. The relative pitch difference per note is a measure how sharp or flat a user is with respect to the transposed scale. The relative pitch transition difference per note is a measure of how users’ pitch changes from note to note. The note duration is simply a measure of how long users’ hold a note for. Finally, the interquartile range is a measure of how much users’ pitch vary per not; too much variance indicates that the singer is not doing a good job of holding the note. Vocal range is calculated by having users record their lowest and highest tones, and is used to guide the reference tones in the exercises, and can be recalibrated by the user at any time. Funmbi, Sai, and I have been able to successfully integrate our parts for the pitch and clap exercises and are now working on finishing touches and web deployment.

Funmbi’s Status Report: 5/8/2021

This week I finalized organizing all the feedback for the rhythm and the theory exercises, navigating from doing the exercises to achieving your feedback. I also began working on the cloud deployment. however, for cloud deployment I ran into an issue that I can’t seem to solve yet and so I would be working on that throughout the weekend till Monday to hopefully get that fixed.

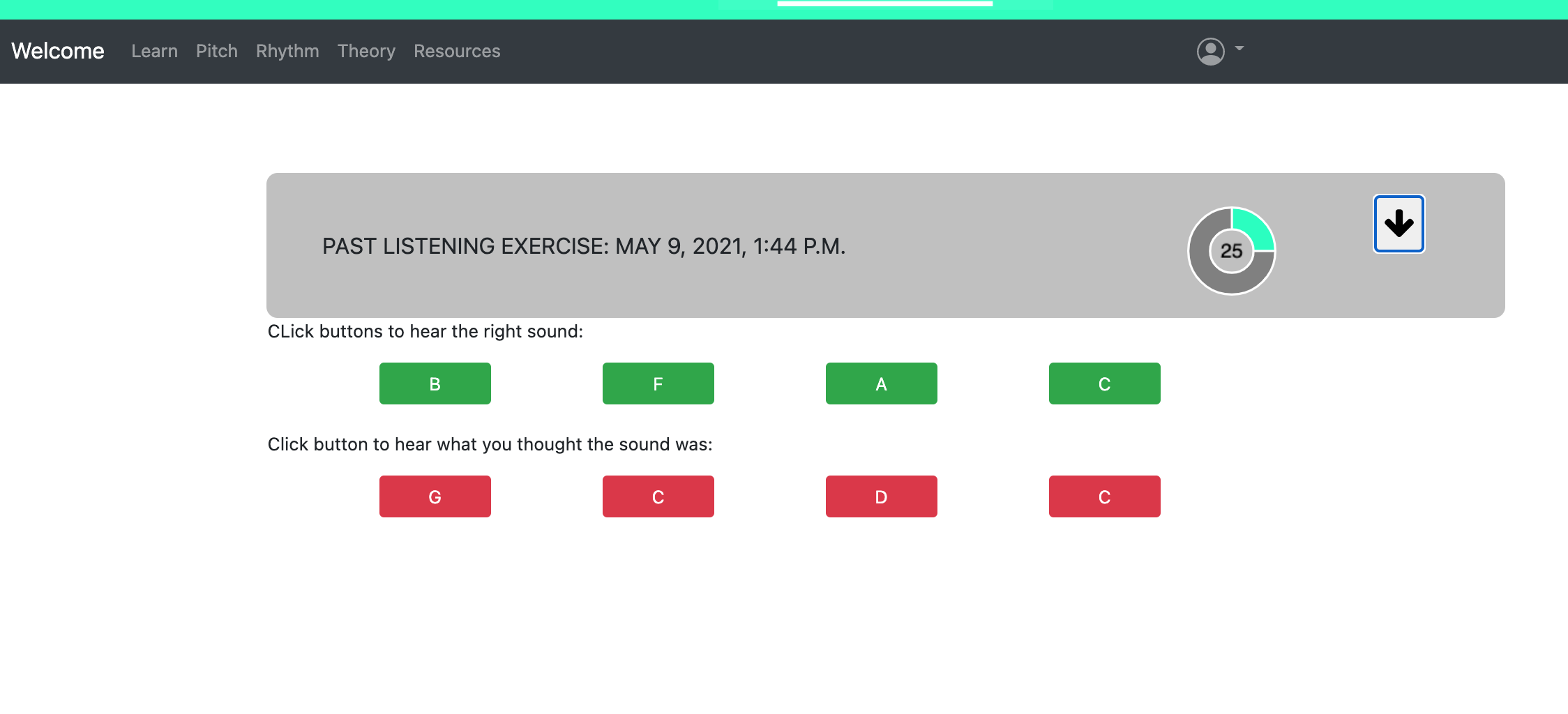

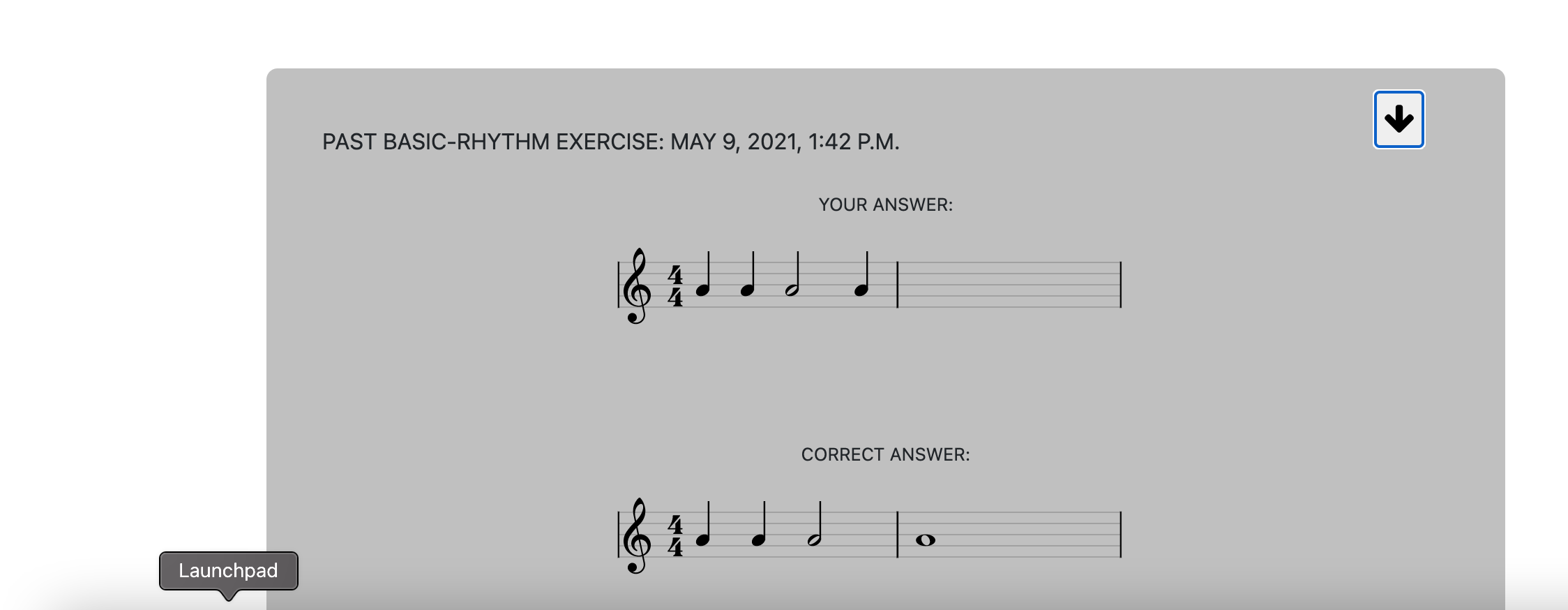

Below are pictures of all the final feedback displays that I worked on:

We have also integrated all the parts together and at this point have a fully functioning application. The last step is to get the deployment bug fixed. The biggest issue is we can’t seem to see where the bug is coming from and my fear is that it may be one of the APIs we are using so I am reading more into handling that and hope to have it fixed by the demo.

Last thing to accomplish is deployment and the addition of more style to our pages, so I would be cleaning up the resources pages and the theory pages and that should be all.

Sai’s Status Report for 5/8/2021

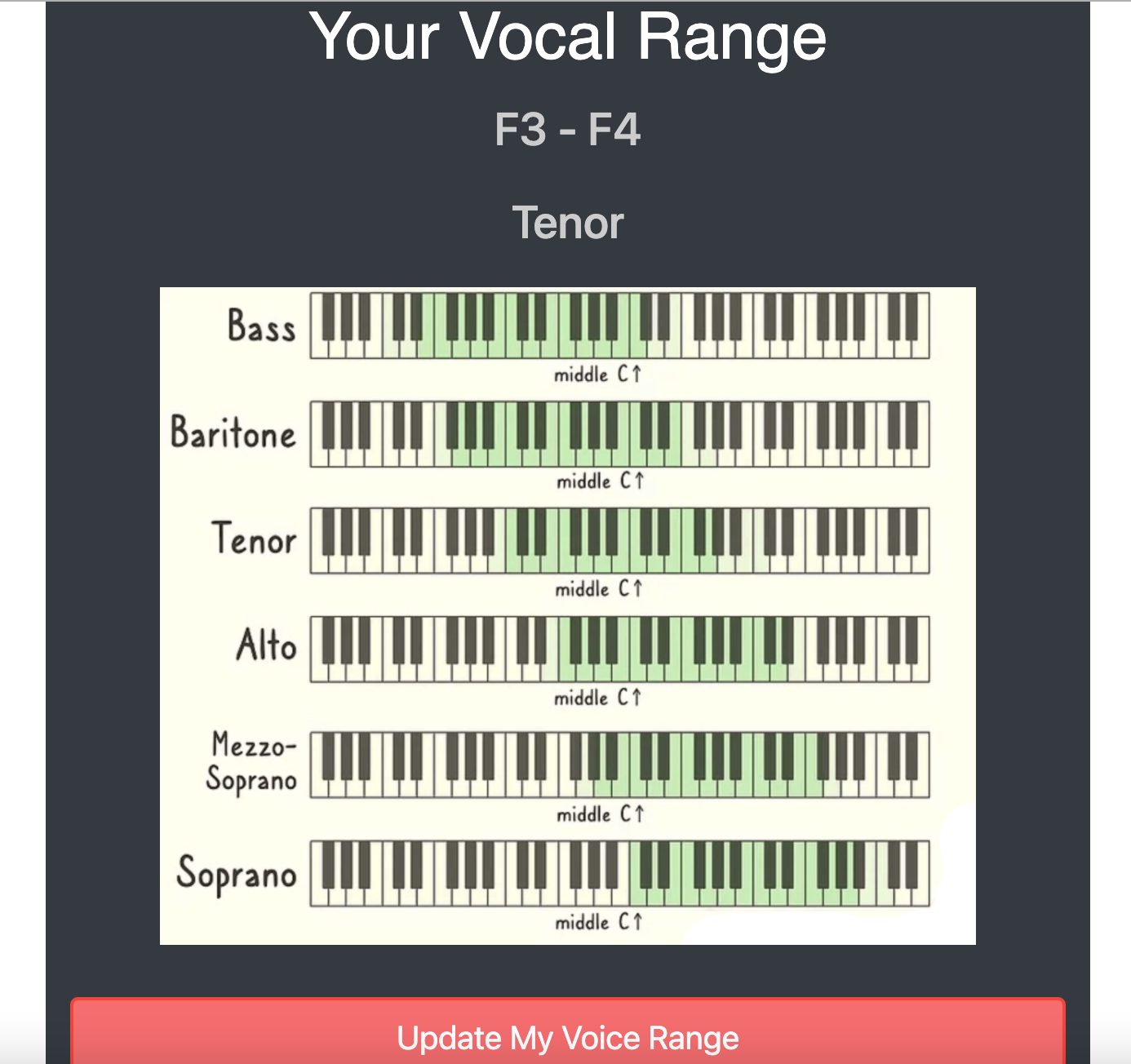

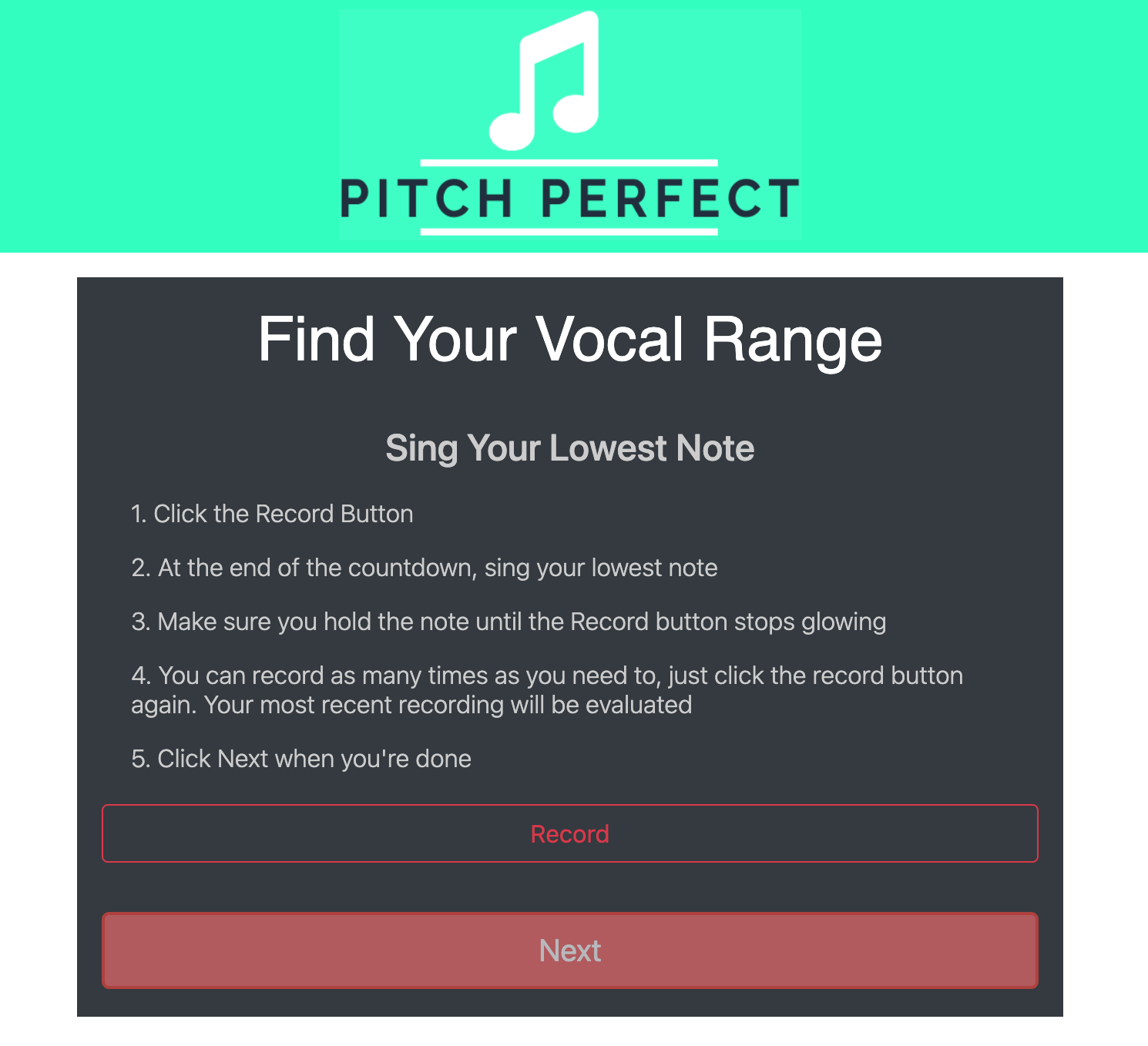

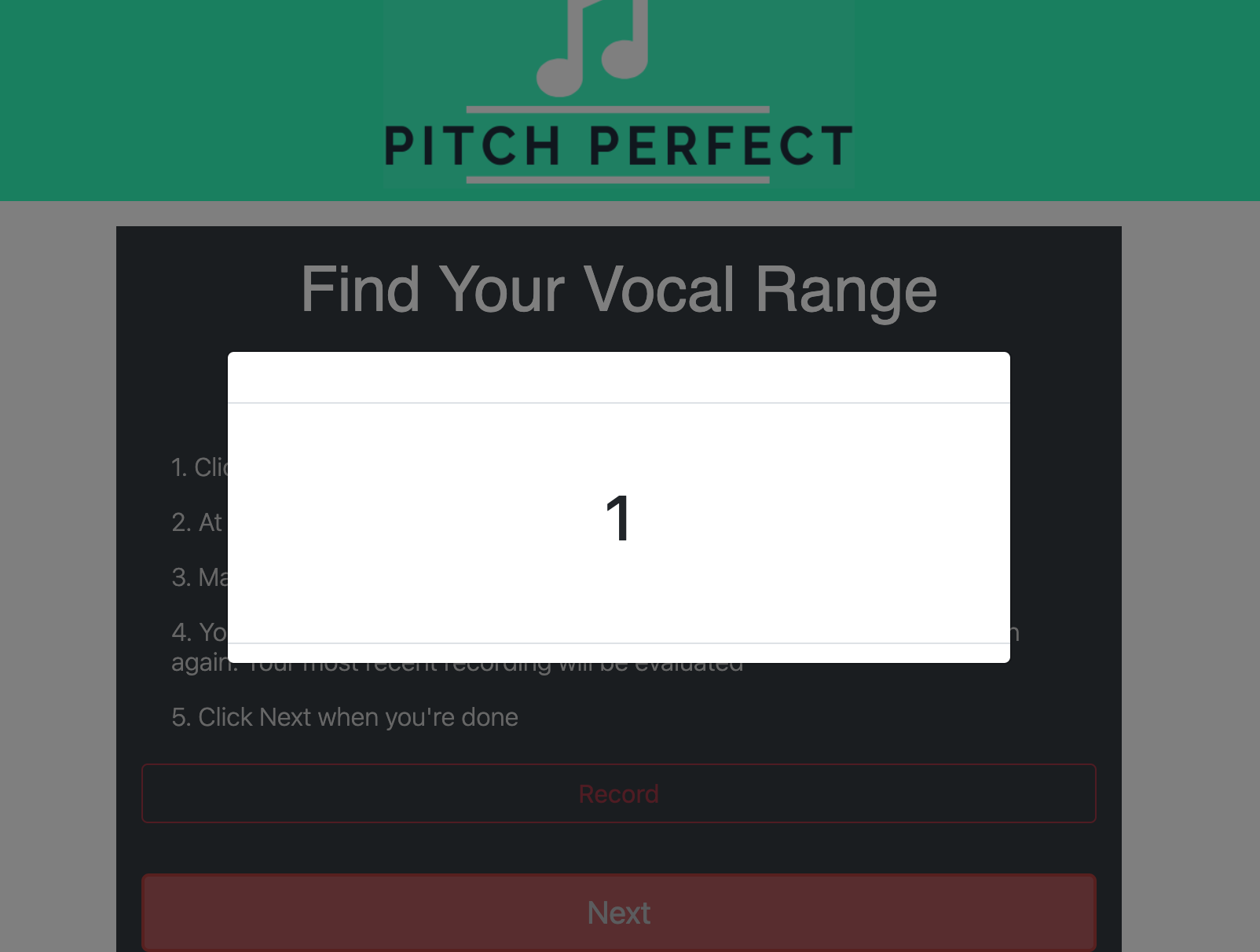

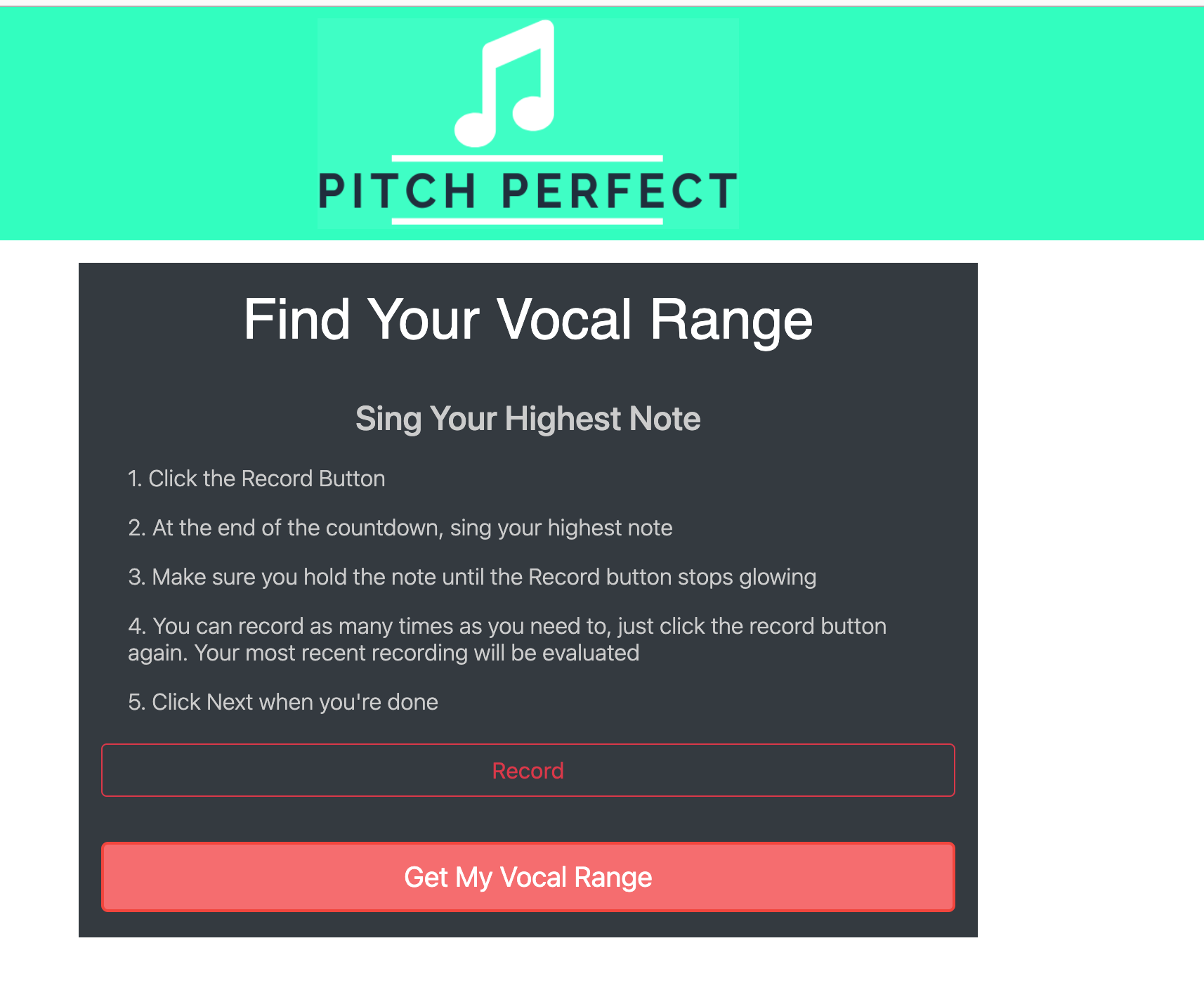

This past week, I was able to integrate Carlos’ pitch detection and feedback algorithm with the user interface. I used the metrics from the algorithm to get the voice range evaluation. The algorithm outputs a note range, and I wrote some code to map that note range to a voice range category (“Soprano” , “Alto”, “Bass”, etc) to show to the user. I also wrote code to use this category to generate pitch lessons, and send expectancy metrics to the pitch feedback so that each user gets a custom lesson and evaluation based on their voice range. I designed the web app so that users can update this voice range at any time. I used the metrics from the pitch detection/feedback algorithm to show a user how sharp and flat a user and how well a user was able to hold each using charts from chart.js library. The pictures of this feedback can be found in the team status report. I’ve attached a picture of the example voice range feedback I got for my own voice range.

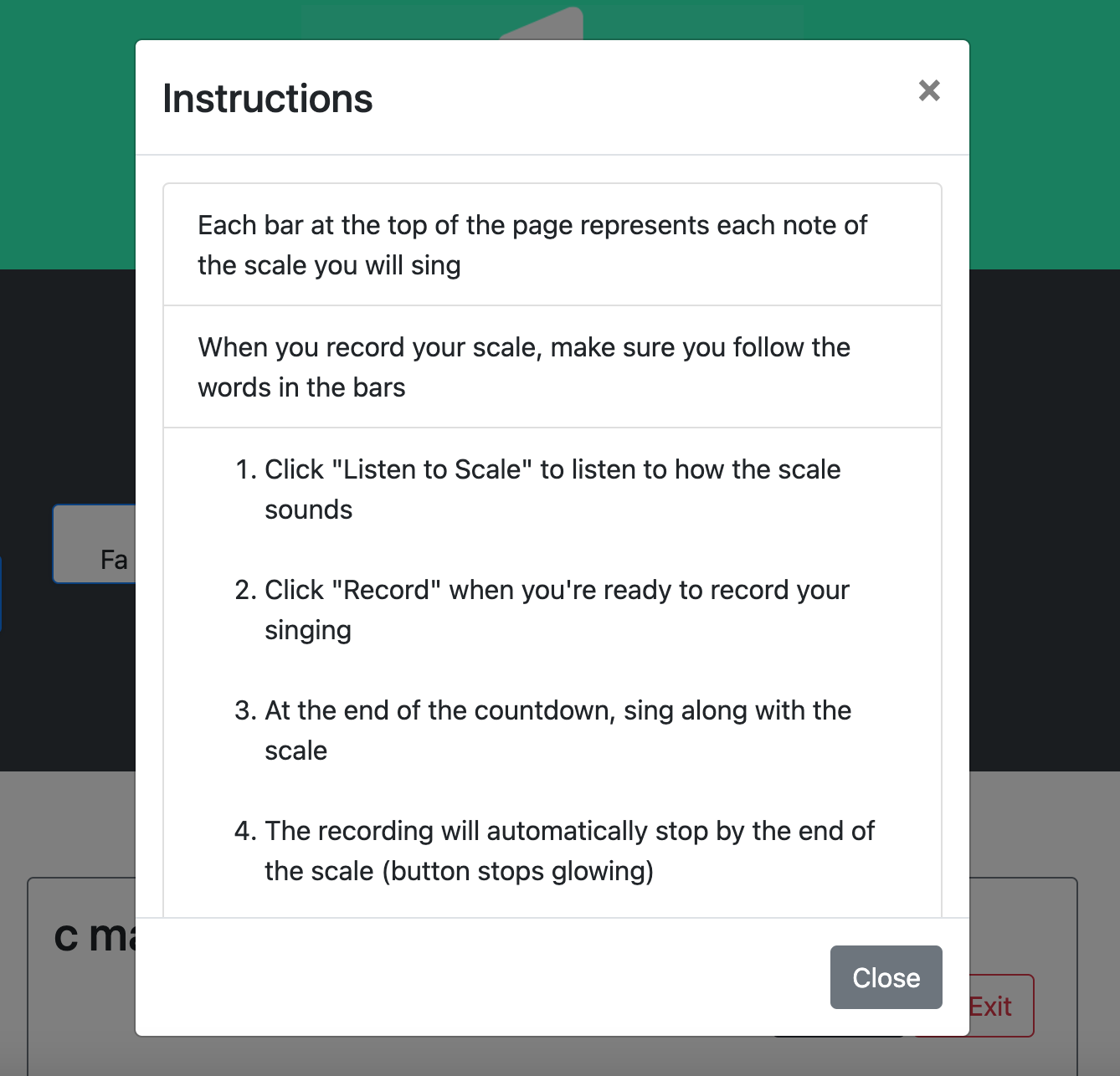

Using the feedback I got from the user experience surveys last week, I made modifications to the pitch exercise so that there are now pop-up instructions, and more navigation buttons (exit buttons, back buttons etc).

The pitch detection algorithm can return empty metrics if the recording is flawed, so I added a case where if the detection algorithm detects a faulty recording, I put in an error message and tell the user to try recording again for both the pitch exercises and the voice range evaluation. This was the best alternative to a page just crashing.

I’ve actually been able to accomplish everything I’ve wanted to put into the web application, and am on schedule. Right now I am just hoping deployment works out.

For the upcoming week, I’ll keep cleaning up and refining the user interface, adding more instructions and navigation for the exercises, and working the welcome page.

Team Status Report For 5/8/21

This past week, our team was able to successfully integrate the user interface with the pitch detection and clap detection algorithms and the web application now displays and stores feedback for pitch and rhythm to a user’s audio recording. Therefore, we no longer have any potential risks in integration. However before this upcoming Monday, the only risk we face is not being able to deploy our web application with the EC2 Instance. We tried to deploy more than once on the EC2 Instance using amazon S3 for storage, however, the web application is unresponsive at the public IP address on HTTP/HTTPS ports. We are currently still trying to make this deployment work, but our risk mitigation is to deploy on an 8000 port since that seems to be loading the web application on the EC2 instance. However we need to also use SSL for this option in order to get the audio recording functionality working. Furthermore, another risk mitigation, will be to use SQL for database storage instead S3 since using S3 with the EC2 Instance is not as straightforward to deploy as is SQL. This will mean that there could potentially be design change for our product. No other parts of the design will be affected, the database storage will be the only thing that is replaced. Until Monday, we will be attempting to get deployment to work, working on the poster, the demo video, and making some User Interface refinements. Our schedule remains the same. Below are some pictures of the integrated feedback for the exercises.

Sai’s Status Report for 5/1/21

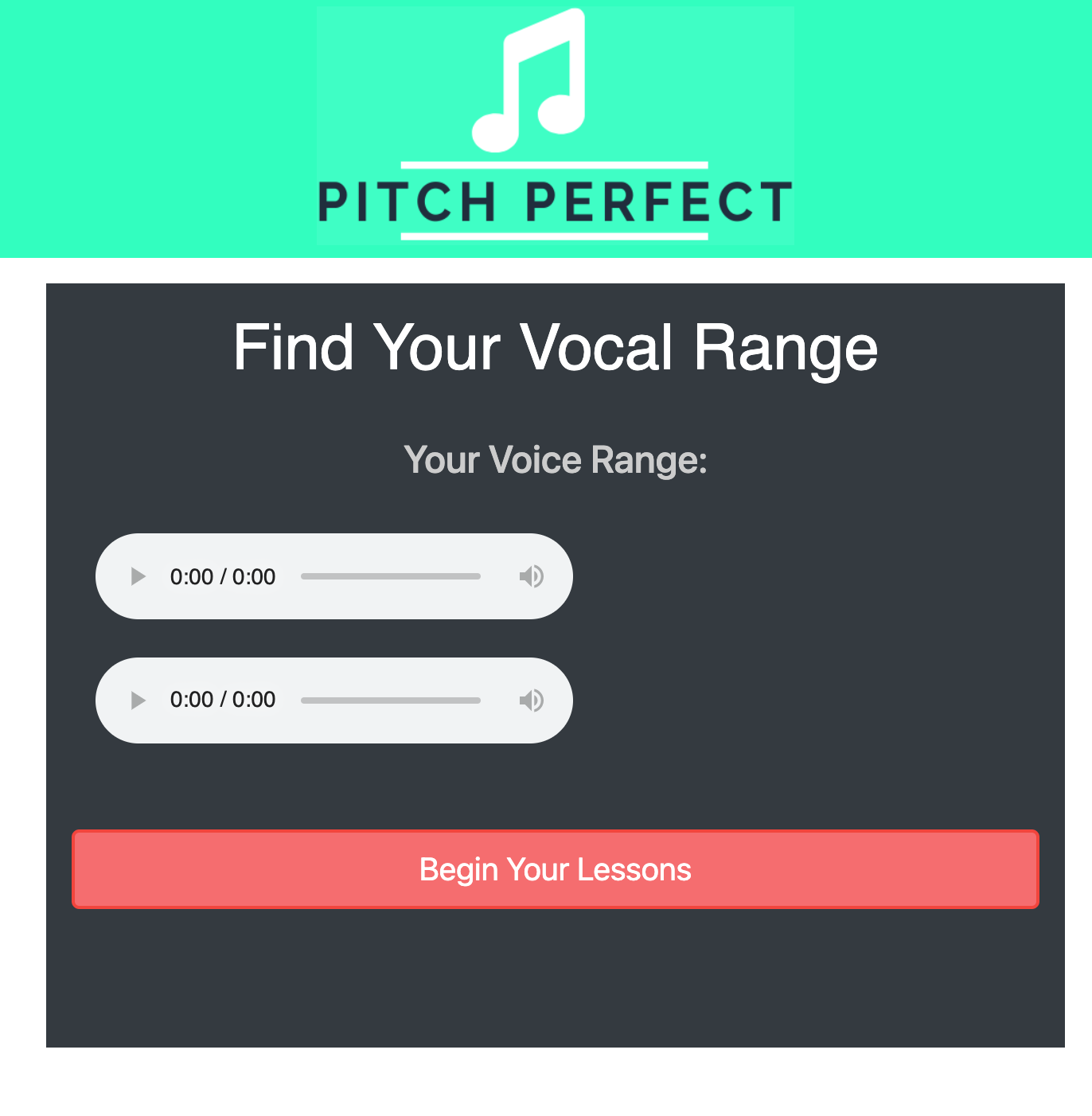

This week, I was able to get started on the voice range evaluation component of the web app. This was a bit tricky because I wanted to use the code I already had for the pitch lessons to record for this component, but that code was kind of not scalable to the voice range. So a good amount of time went into making that code scalable for the voice range as well as the rhythm exercises. The voice range evaluation frontend with its recording capability is complete, however it still needs to be integrated with the audio processing component to map the audio files to a voice range to output to the user. Me and Funmbi were able to integrate both our parts of the web app together. We integrated the vexflow music notes and the play rhythm + recording functionality to complete the clapping exercises. We also worked on the dashboard to bring all the different components together and link them together. The dashboard pic can be found in the team status report. For the upcoming week, I’ll be working with Funmbi on deploying what we so far, since it might be a bit tricky with the audio files, external APIs and packages we are using. I will also try to finish up the voice range exercise and integrate with Carlos’s audio processing algorithms so that I can actually display feedback to the users for the pitch lessons, rhythm lessons, and voice range evaluation. There’s still a good amount of work to do, so I feel that I may be behind, but hopefully the integration won’t take too long. I’ve attached photos of the voice range evaluation run-through.

Carlos’s Status Report for 5/1/2021

This week I’ve been applying the final set of post-processing steps to the pitch and clap detection algorithms, and have been continuing to test both. Our pitch exercises, which are based on solfege, do not have jump in tones more than an octave. We leverage this fact to filter out unreasonable pitch measures, or pitch measures that are not the target of the exercise, like those generated from fricatives. I have also been working on extracting metrics from pitch exercises to evaluate a user’s performance. This task proved to be much more difficult to anticipate for many reasons, but most importantly, we were initially thinking about grading users with respect to some absolute pitch, instead of relative pitch. When implementing the evaluation metric, we figured it’d be too harsh of a constraint to have singers try to measure an exact pitch, so we have switched to judging their performance based on a translation of the original scale, which is defined by the users first note.

Next week, we will be integrating the detection algorithms with the front end, thus we will hopefully be able to get feedback from users for the functionality of our application which can drive a final set of changes if necessary.

Team Status Report for 5/1/2021

As the semester comes to an end, we are continuing to integrate our subsystems and testing as we go. We have been integrating our front-end for the different exercises and creating dashboards linking to link them.

As of now, our most significant risks are successful web deployment and frontend/backend integration. The functionality of much of our code depends on the use of external libraries, so it is critical that in deploying our code we are able to include these packages. To mitigate this, we will start deployment on Monday when the front-end is almost entirely integrated, and test to see if there are any package import issues. Worst case scenario, we may have to run our website locally, but we are confident that we will be able to deploy in the time we have left.

As for frontend/backend integration, we are starting to integrate the exercises with their corresponding backend code. Ideally, we could have started this before, but there were some edge cases that needed to be resolved in extracting metrics for pitch exercise evaluation. We will continue to integrate and test the disjoint subsystems, hopefully with enough time to get user feedback on the functionality of our app and its usefulness as a vocal coach.

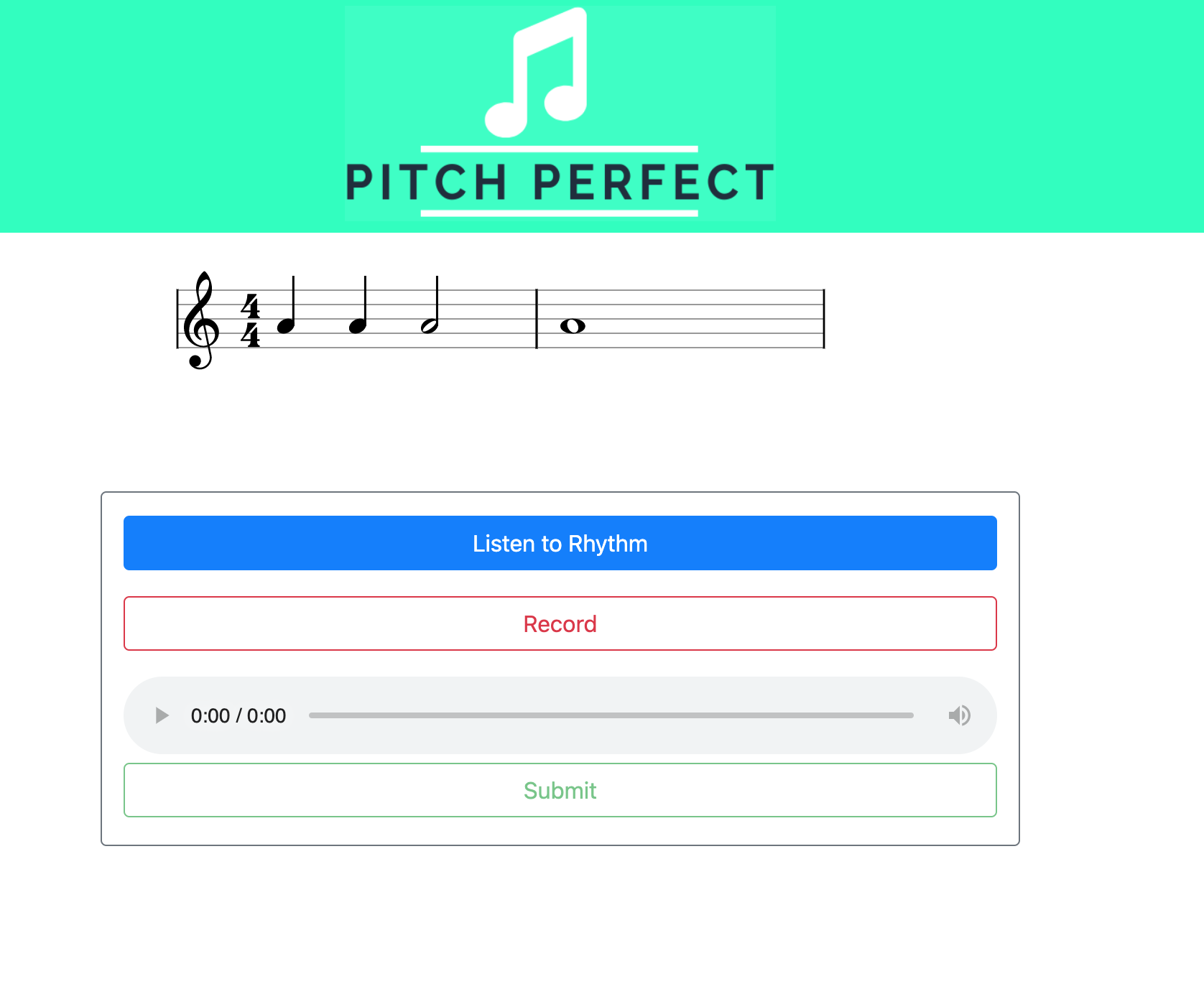

Photos of the web app’s dashboard and the rhythm exercise can be seen below:

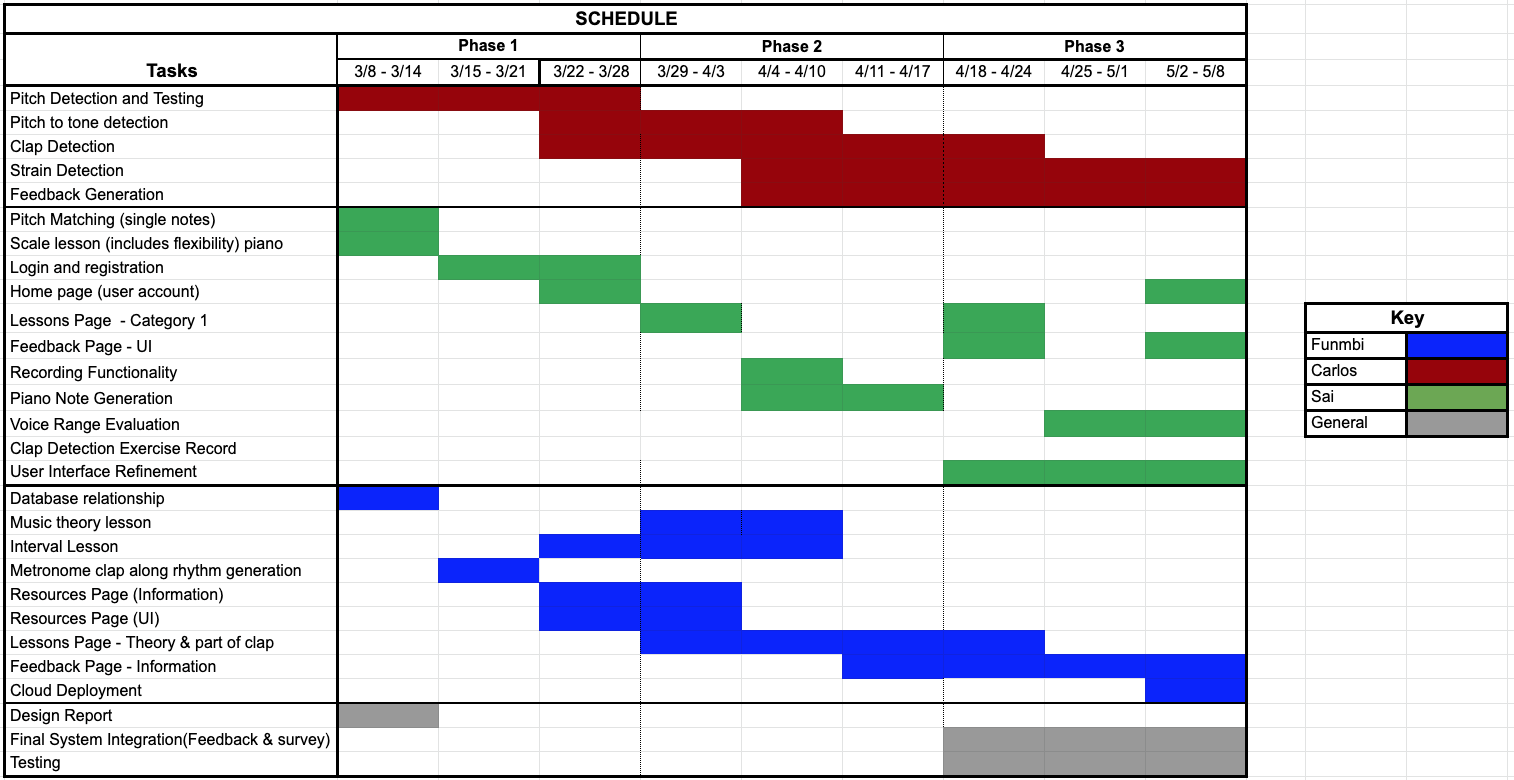

We also uploaded our updated schedule:

Funmbi’s Status Report: 5/1/2021

This week, I was able to accomplish completing of the integration of my files and one of my other partner’s files, so right now most if not all of our frontend has been completed with minor changes to be implemented. So my final tasks was creating the rhythm exercise using the VexFlow API and integrating that part to the rest of the application, which I was able to get finished. There are a few pictures below to represent what we have so far.

Sai’s Status Report for 4/24/2021

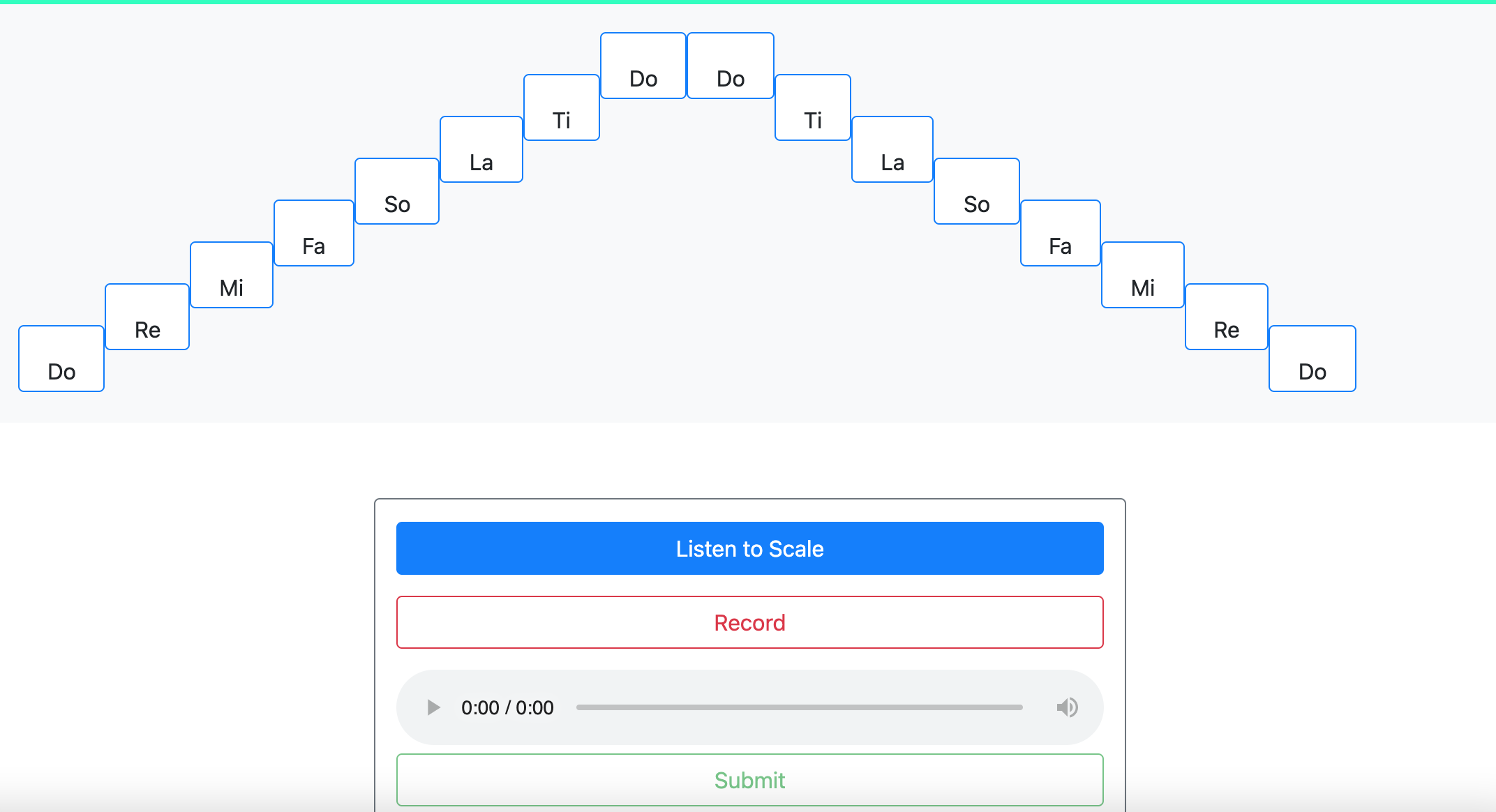

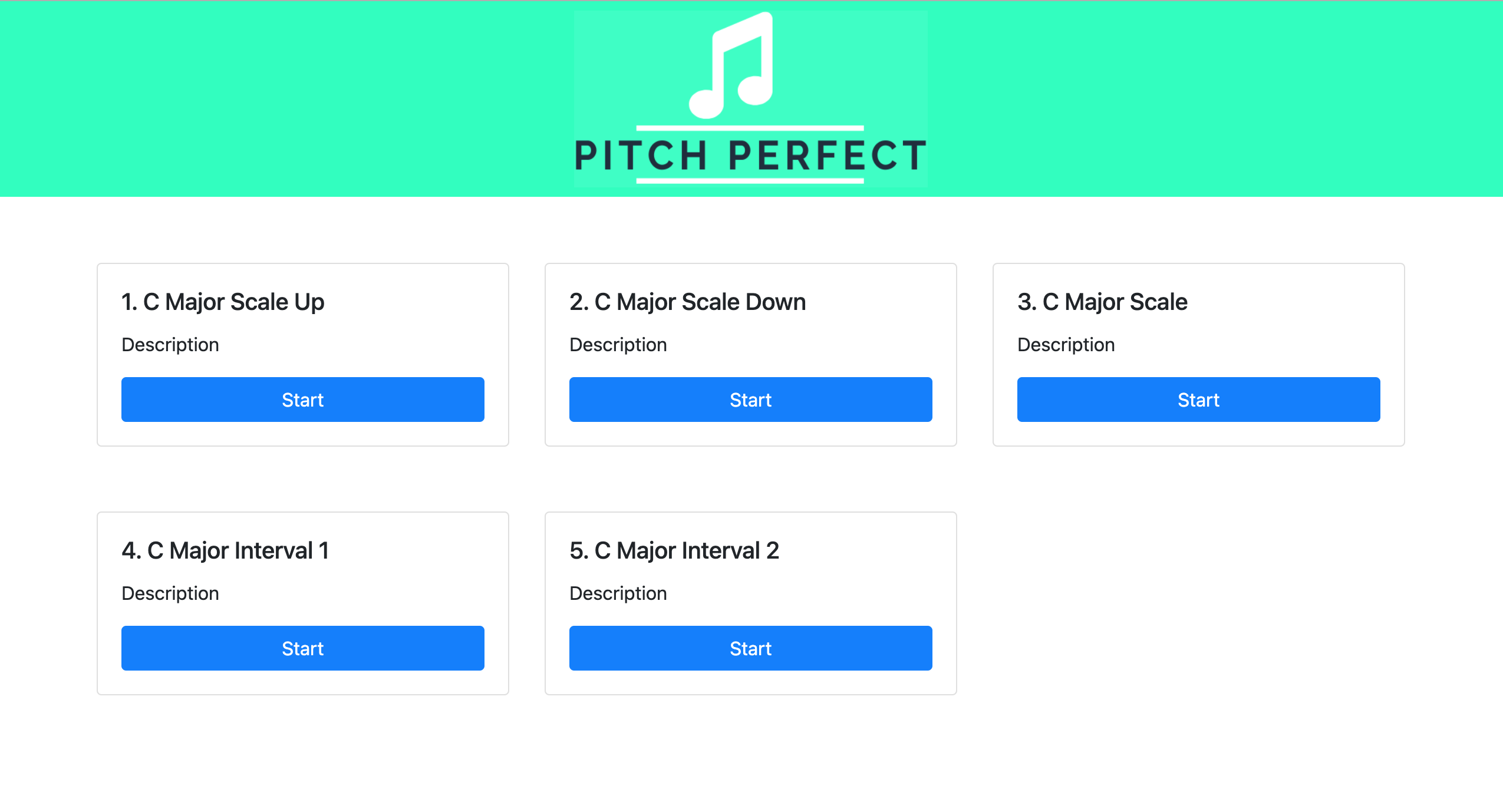

This week, there were some file formatting issues for the recorded audio files that were submitted on the web application. I found out that the API I’m using to record a user’s audio input on a browser doesn’t produce uncorrupt/clean .wav files that you can open or process on the backend, but produces clean .webm files. I found an ffmpeg package that I can call on the server to convert .webm files to .wav files. This package has been successful and produces clean .wav files. We made some design changes to our pitch exercises, there is now a countdown when you press the record button and we wanted to add breaks in between notes when listening to how the scale/interval sounds via piano notes. I had to change the algorithm that produced the sequence of piano notes and displays/animates the solfege visuals. There is a now a dashboard/list of some of the different pitch scale/interval exercises. But now, new exercises can be easily added by just adding a model with its sequence of notes, durations, and solfege. I also spent some time refining the solfege animation/display since some edge cases came up with adding more pitch exercises. I want to add a more refined display for the solfege display this upcoming week using some bootstrap features. Another minor edit I made was adding a nice logo for web app and making the title bar more engaging/bright. I feel like I’m behind schedule, since I thought integration would happen this past week, but we will spend a lot more time on this project together to make sure the integration gets done before next week.

For the upcoming week, I’ll be working on creating the voice range evaluation that will be part of the registration process, integrating with Funmbi’s features, and working with her to create the web app’s main dashboard. I will also integrate my code with Carlos’ Pitch detection algorithm to receive feedback from the audio recordings. I will also try to plan out what the User Experience survey questions/prompts will look like.

Below are some pictures of the new exercise format and the pitch exercise dashboard.

Carlos’s Status Report for 4/24/2021

These past two weeks, I’ve been testing my pitch to note mapping and clap detection algorithms. In testing the pitch to note mapping, I came across several potential issues that I had not considered before implementation. For one, I observed that when some singers attempt to hold a note their pitch can drift significantly, in some cases varying more than ![]() cents. This pitch drifting introduces tone ambiguity which can drastically affect classification. Since this app is targeted for beginners, we expect there to be lots of pitch drifting, so this is a case that we have to prioritize.

cents. This pitch drifting introduces tone ambiguity which can drastically affect classification. Since this app is targeted for beginners, we expect there to be lots of pitch drifting, so this is a case that we have to prioritize.