This week, I originally planned to implement and start testing the clap detection algorithm, but I instead started working on testing the Yin pitch detection algorithm I found online. I have implemented a pitch detection algorithm in the past using a Cepstrum based approach, so I have some familiarity with this class of algorithms, and the problems that are common in detecting pitch.

In my implementation though, the intensity of the pitch an output parameter, whereas that is not the case in the third-party implementation. I believe this is because of the several post-processing steps that occur after extracting raw pitch contours using the autocorrelation method. I will, however, need this intensity value to be able to determine how loud a singer is able to carry a certain note, so it is crucial that I implement an intensity detector myself. To calculate intensity, I will be using the method described in ‘Speech signal processing by Praat’.

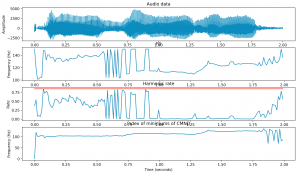

I tested the PDA against a valiant effort of me “singing” do-re-mi in C3-D3-E3 which are respectively about 130 Hz, 146 Hz, and 164 Hz. I did not do such a good job singing this as my pitch readings were 103 Hz, 112 Hz, and 124 Hz which correspond to about G2 sharp, A2, and B2. Here is a diagram of my pitch as output by the Yin PDA:

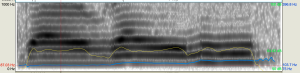

To make sure that the pitch readings were accurate, and my singing was actually at fault, I cross-validated my pitch readings against the pitch detector from the Praat tool, which you can see here:

After this, I decided to stick with testing with pure tones for now. I’m also looking for datasets of people singing with the notes labeled, to allow for more interesting simulations. I hope to complete verifying the PDA by the end of next week and then start the intensity and clap detector. Before that, though, I will have to complete my portions of the design report that is due Wednesday night.