This week, I was able to finalize the note and clap detection algorithms, as well as the singing feature extraction, and vocal range calculation. The singing features that I chose in the end were: scale transposition, relative pitch difference per note, relative pitch transition difference, note duration, and interquartile range. Scale transposition is a measure of how many half-steps the users’ performance differs from the exercise most, which is calculated by taking the mode of absolute pitch differences. The relative pitch difference per note is a measure how sharp or flat a user is with respect to the transposed scale. The relative pitch transition difference per note is a measure of how users’ pitch changes from note to note. The note duration is simply a measure of how long users’ hold a note for. Finally, the interquartile range is a measure of how much users’ pitch vary per not; too much variance indicates that the singer is not doing a good job of holding the note. Vocal range is calculated by having users record their lowest and highest tones, and is used to guide the reference tones in the exercises, and can be recalibrated by the user at any time. Funmbi, Sai, and I have been able to successfully integrate our parts for the pitch and clap exercises and are now working on finishing touches and web deployment.

Carlos’s Status Report for 5/1/2021

This week I’ve been applying the final set of post-processing steps to the pitch and clap detection algorithms, and have been continuing to test both. Our pitch exercises, which are based on solfege, do not have jump in tones more than an octave. We leverage this fact to filter out unreasonable pitch measures, or pitch measures that are not the target of the exercise, like those generated from fricatives. I have also been working on extracting metrics from pitch exercises to evaluate a user’s performance. This task proved to be much more difficult to anticipate for many reasons, but most importantly, we were initially thinking about grading users with respect to some absolute pitch, instead of relative pitch. When implementing the evaluation metric, we figured it’d be too harsh of a constraint to have singers try to measure an exact pitch, so we have switched to judging their performance based on a translation of the original scale, which is defined by the users first note.

Next week, we will be integrating the detection algorithms with the front end, thus we will hopefully be able to get feedback from users for the functionality of our application which can drive a final set of changes if necessary.

Team Status Report for 5/1/2021

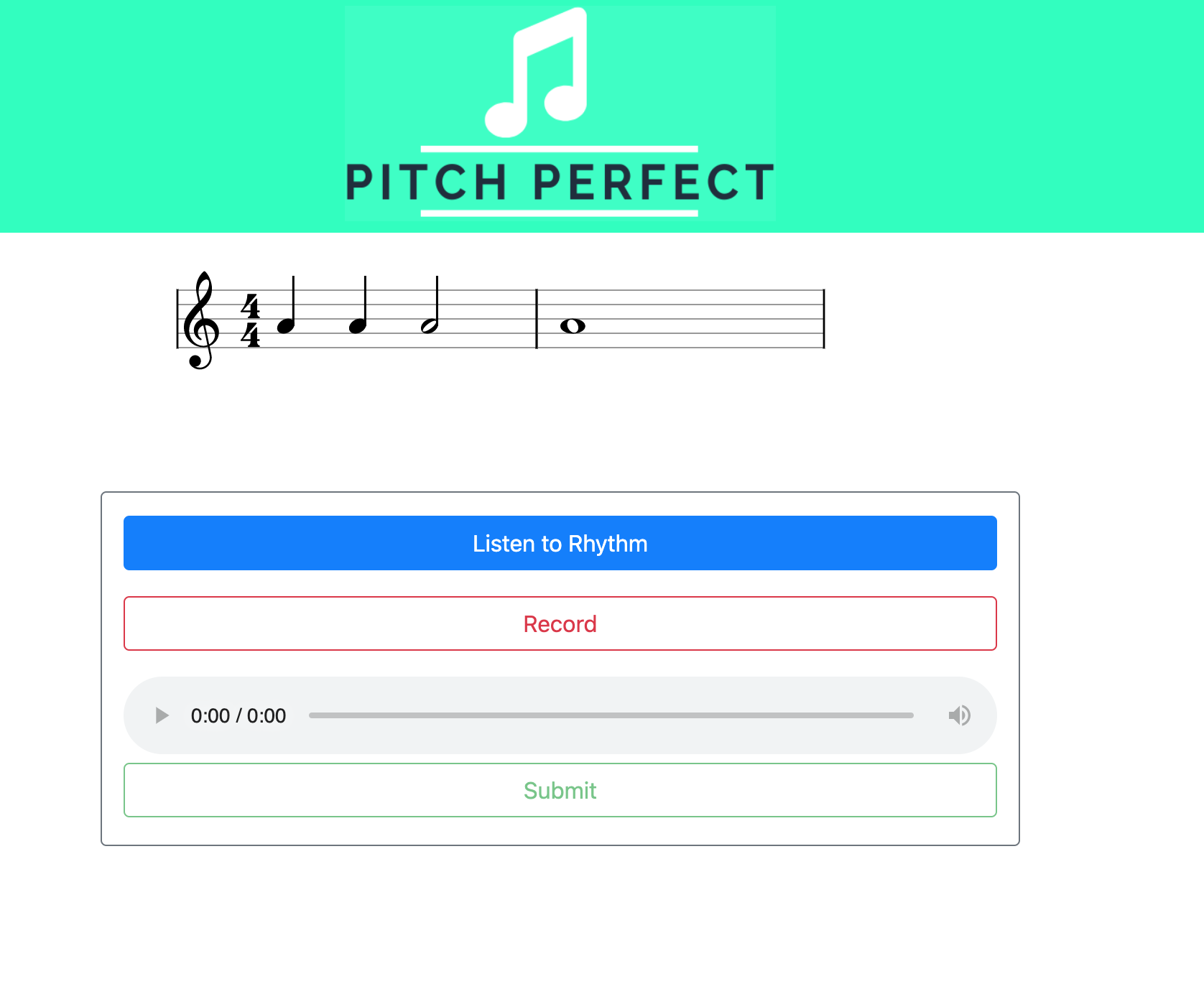

As the semester comes to an end, we are continuing to integrate our subsystems and testing as we go. We have been integrating our front-end for the different exercises and creating dashboards linking to link them.

As of now, our most significant risks are successful web deployment and frontend/backend integration. The functionality of much of our code depends on the use of external libraries, so it is critical that in deploying our code we are able to include these packages. To mitigate this, we will start deployment on Monday when the front-end is almost entirely integrated, and test to see if there are any package import issues. Worst case scenario, we may have to run our website locally, but we are confident that we will be able to deploy in the time we have left.

As for frontend/backend integration, we are starting to integrate the exercises with their corresponding backend code. Ideally, we could have started this before, but there were some edge cases that needed to be resolved in extracting metrics for pitch exercise evaluation. We will continue to integrate and test the disjoint subsystems, hopefully with enough time to get user feedback on the functionality of our app and its usefulness as a vocal coach.

Photos of the web app’s dashboard and the rhythm exercise can be seen below:

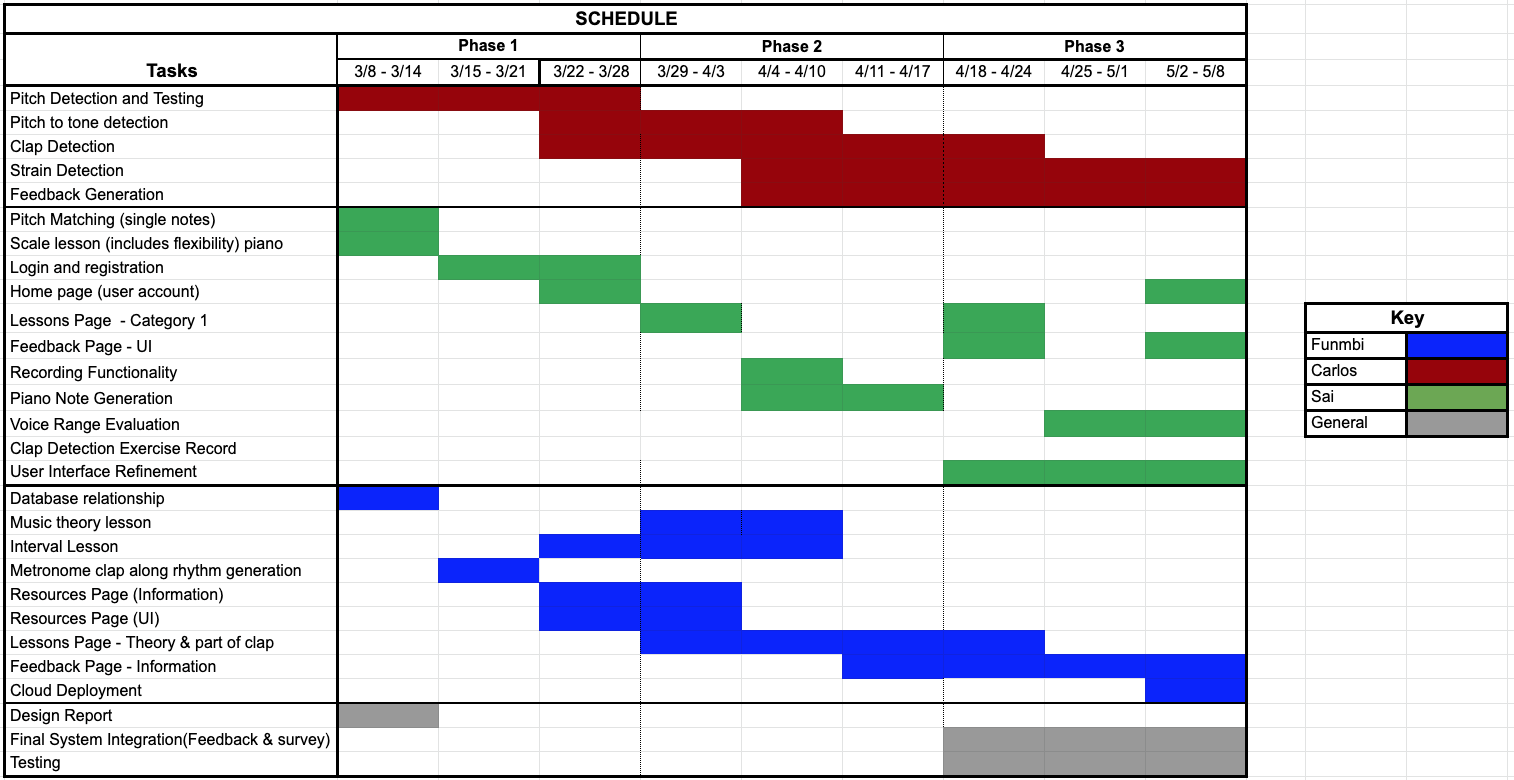

We also uploaded our updated schedule:

Carlos’s Status Report for 4/24/2021

These past two weeks, I’ve been testing my pitch to note mapping and clap detection algorithms. In testing the pitch to note mapping, I came across several potential issues that I had not considered before implementation. For one, I observed that when some singers attempt to hold a note their pitch can drift significantly, in some cases varying more than ![]() cents. This pitch drifting introduces tone ambiguity which can drastically affect classification. Since this app is targeted for beginners, we expect there to be lots of pitch drifting, so this is a case that we have to prioritize.

cents. This pitch drifting introduces tone ambiguity which can drastically affect classification. Since this app is targeted for beginners, we expect there to be lots of pitch drifting, so this is a case that we have to prioritize.

Carlos’s Status Report for 4/10/2021

I hoped by this week to have completed the pitch to tone mapping algorithm, but it is a much more involved endeavor than I anticipated. I have been having difficulties enumerating the cases that I have to consider for when to detect notes, most importantly determining when the user starts singing, if they keep their time alignment throughout the duration of the song, and how to handle the cases where they are not singing at all. For much of my time developing, I have treated this algorithm as if it were simply performing the pitch to tone mapping, but in reality there are several aspects of the users’ performance that I have had to consider. Most recently, I have found more success in taking a top-down approach and sectioning the responsibilities of the algorithm by function.

I am currently trying to finish this algorithm up, once and for all, by the end of this weekend, so that my team and I can integrate our respective parts and construct a preliminary working system. I am not sure if I will be able to test the algorithm as exhaustively as I should, so I will set a first round of unit tests on the generated pure tones.

Carlos’s Status Report for 4/3/2021

This week, I continued developing the pitch to key mapping that takes in a pitch contour as generated by the Praat pitch detector, and outputs a set of time stamps and tones corresponding to pitch and rhythm. This component turned out to be more intricate than I had initially expected due to confusion arising from a lack of formal music understanding. Particularly, I was having trouble understanding the relationship between tempo, time signatures, and note duration. Continue reading “Carlos’s Status Report for 4/3/2021”

Team Status Report for 4/3/2021

This week, we continued developing several core components of our project, particularly: lesson creation, web template design, and tone detection from calculated pitch contours. Thus far, we are on schedule and our pacing is generally what we anticipated.

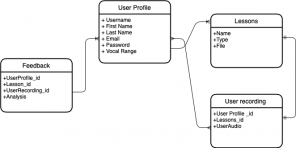

While most of our design has stayed the same, we have made changes to how we will be storing data. As we have iterated over our project design and ideas, the data that we require have changed and to reflect that we updated our data model scheme from

to

.

.

This new scheme will provide us with more flexibility and reusability in how we represent and store data across users and exercises. Continue reading “Team Status Report for 4/3/2021”

Carlos’s Status Report for 3/27/2021

This week I verified the pitch detection accuracy of the Yin pitch detection algorithm as implemented by a third-party. In inspecting their code, I noticed that they did not implement every step of the algorithm and described in the paper, therefore, its accuracy is lower than reported in the paper. While the algorithm has 6 steps, only 4 were implemented. I considered implementing the extra steps myself, but I found the code difficult to work with and sought other options. I found another implementation for the algorithm on GitHub, but it had similar issues: not all steps were implemented, and it was written in Python 2. Continue reading “Carlos’s Status Report for 3/27/2021”

Carlos’s Status Report for 3/13/2021

This week, I originally planned to implement and start testing the clap detection algorithm, but I instead started working on testing the Yin pitch detection algorithm I found online. I have implemented a pitch detection algorithm in the past using a Cepstrum based approach, so I have some familiarity with this class of algorithms, and the problems that are common in detecting pitch. Continue reading “Carlos’s Status Report for 3/13/2021”

Carlos’s Status Report for 3/6/2021

This week, I conducted considerable research into features a singer’s vocal performance that can be used to discriminate between good and bad singing. I stumbled upon a few papers that discussed how we can do this, most importantly this one and this one.

In the first paper, the authors described 12 desirable characteristics used to define good singing, as described by experts in the field, and they adapted existing methods that measure those traits and aggregated them to generate a metric which they call Perceptual Evaluation of Singing Quality (PESnQ). However, to obtain this measure, a singer’s performance must be compared to an exemplary performance, which is out of the scope of our project. Most literature in this field of singer evaluation follows this methodology of using a template by which to compare a performance to. Continue reading “Carlos’s Status Report for 3/6/2021”