This week, I continued developing the pitch to key mapping that takes in a pitch contour as generated by the Praat pitch detector, and outputs a set of time stamps and tones corresponding to pitch and rhythm. This component turned out to be more intricate than I had initially expected due to confusion arising from a lack of formal music understanding. Particularly, I was having trouble understanding the relationship between tempo, time signatures, and note duration.

To remedy this, I spent a decent portion of my week watching videos on YouTube and reading articles online about rhythm in music. I have come to understand that 4/4 time means that there are 4 beats per measure and that the quarter note is the standard for a beat. I am still a bit confused about how eighth and sixteenth notes are played seeing as how they are fractional notes in 4/4 time, but this is something I think I will come to understand as I keep working on this project.

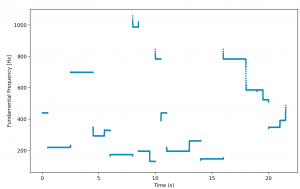

Equipped with these insights, I made changes to my random pure tone generator to add a rhythmic component by varying the duration of notes. This is an example of the pitch contours of a randomly generated set of pure tones between C3 and B5.

So far, I have designed the note detector to output a template of notes at expected timestamps by which to compare the user’s performance to. By the end of this week, I aim to complete this note detector and integrate it with the web application.