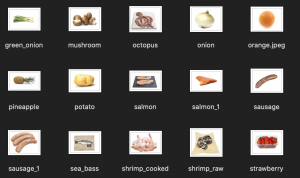

This week our team finished the setup of the system in the mini-fridge and tested the integrated system. The most significant risk that could jeopardize the success of the project is how to showcase the entire functionality in the demo video. Since the live demo is only one part of the entire demo video, and our project plays the role in the daily routine of the user, we need to find a proper expression that demonstrates our product in few minutes. This risk is managed by having good communication in our team meeting to design a plan with specific steps and content we want to add to the video. We will add annotations to the live demo part so that we can make sure each step is explained to the audience.

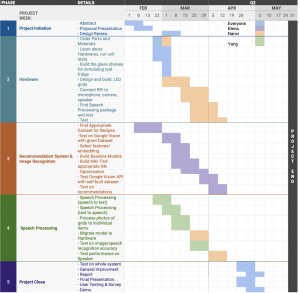

Since it is already the last week of class, and we finished the implementation part of our project, we are not making changes to the existing design, and we don’t change the schedule as the things left are to work on the report, demo, and poster.

An image of the mini-fridge is attached.