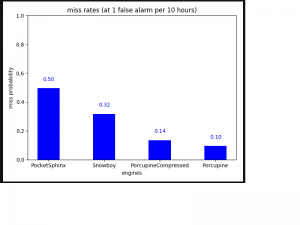

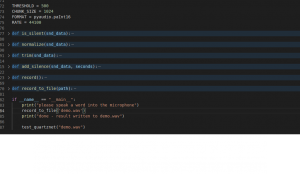

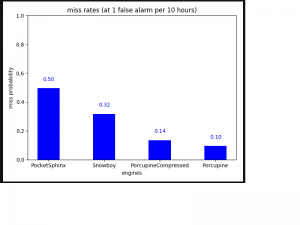

I ran into some issues earlier this week with doing speech processing, since I realized we needed a way to trigger the recording instead of simply recording at set intervals. To do this, I found pvporcupine, which is a python module that has the best accuracy on trigger words, out of all the free software that aims to do this.

After doing this, I set up the threading to handle each of the different portions of our system, and have managed to get our speech recognition system to record only after being triggered by the wake word. With this, I am able to actually get text out of the input audio in the format of a command that we specified, to be sent to the recommendation system.

Next week, I’ll be looking into actually parsing the text we have into intent, which can be used by the recommendation system, and start creating the framework for that.