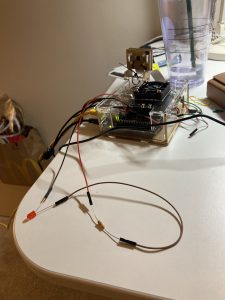

This week I am done with the building and testing of the LED grid. It is working as expected. I might make some final adjustment to the sizing of the grids before taping it, but it is mostly done. The adapter we got for LED is not so stable, so I prefer not to light it up for too long at a time so that the LED strip doesn’t get burned like the last time. This also fits the usage model better. (Users will pick up the food from the grids within a minute.)

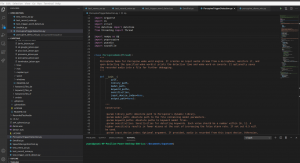

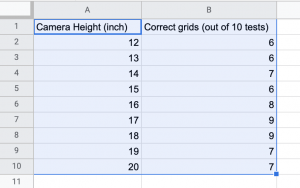

I have been testing the image recognition part regarding the placement of each object. This part of the algorithm decides which LED grid each item belongs to. I found out that the accuracy rate of this when using google Vision API is highly dependent on the placement of the camera and the lighting. I did some testing on where to mount the camera to get the best result. Here are the results below. I decide to mount the camera at 18 inch above the surface. We will be using this data for a part of the testing plan as well. More testing about the accuracy of this will be added to the testing plan later.

Another problem is that lighting does affect the image recognition accuracy. We will put a light source (a floor lamp) right above the objects to imitate the lighting in the fridge. In this case, the accuracy rate of image recognition should be stable and it will be better for the testing.

I plan on doing the integration test next week and we should be able to have our final product complete.