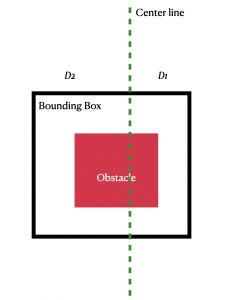

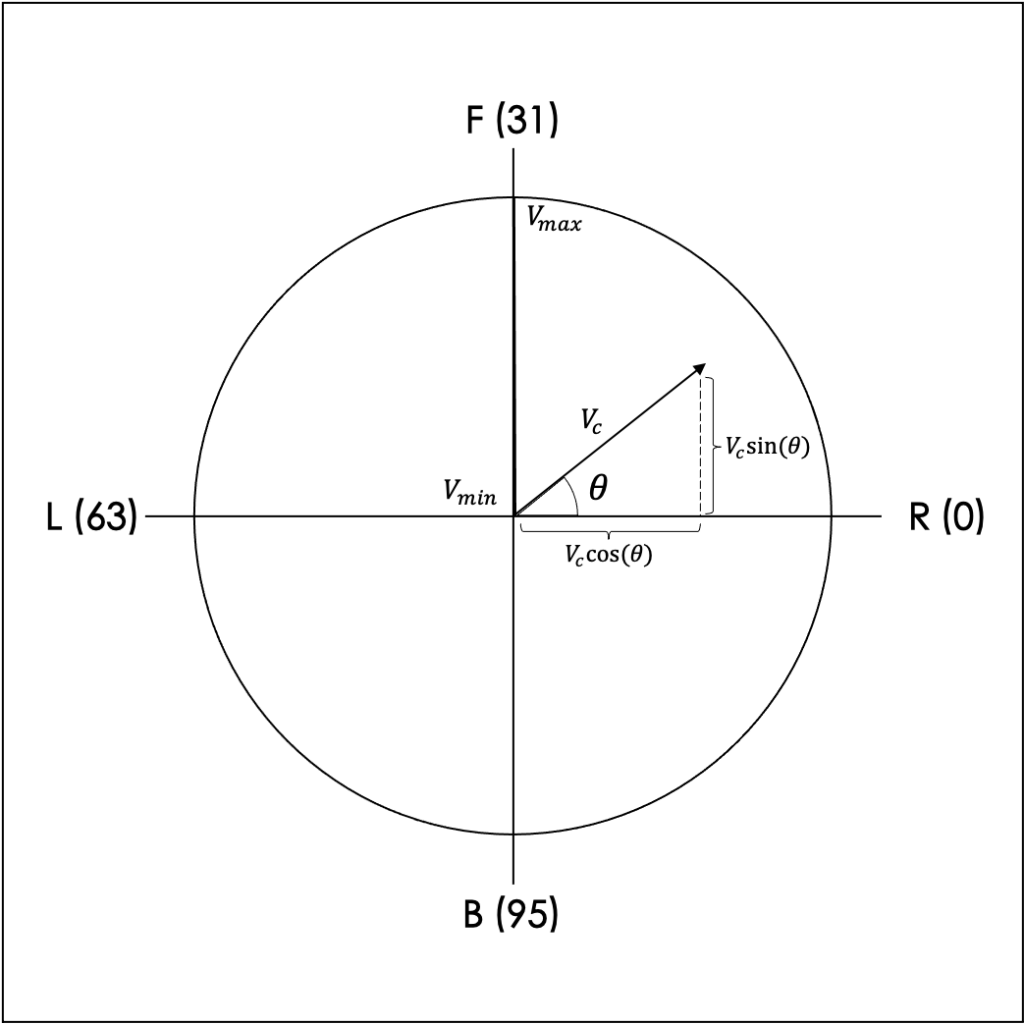

This week I focused on fine tuning the object detection algorithm and began writing the planning algorithm. I had a little trouble downloading all the drivers for the Intel RealSense camera, but managed to get everything installed properly by the middle of the week. After that I experimented with extracting the RGB footage values and using OpenCV to process them into something that MobileNet v2 can use to make object classification and detection decisions. After that I started determining the robustness of the Intel camera by moving it around the room to see if there are any jitters in the feed, to determine the fastest sampling rate at which we can sample from the camera while still maintaining clear images. I found that we can sample faster than MobileNet v2 can process the image, making our lives easy in the future. Starting tomorrow, I will hook up the Intel camera to MobileNet v2 to see what the real time object detection looks like. After that we can start integrating and I can begin determining heuristic values for the planning algorithm.

Additionally, we plan to meet up to figure out what the obstacles will look like and generate realistic feeds from the car to further determine the robustness of the object detection algorithm.

Honestly what does it even matter, you work so hard only to have Jalen Suggs hit a half court shot to win the game.