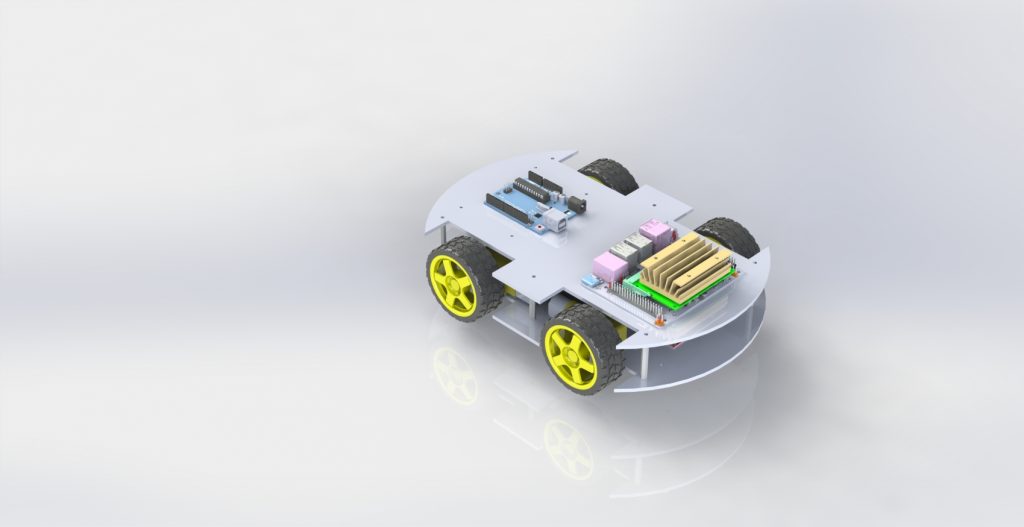

This week was focused on providing more concrete details about the technology to be used in the project as well as the design and integration. In particular, I have been able to verify the feasibility of our planned design from the mechanical side of things. One concern that was solved this week was the use of the motor controllers to manage the motor system. We have settled on using two LN298 H bridges to control each motor pair (front, rear). This will allow us to have fine grained control of each wheel to allow for decent car agility. In addition to this, we have been able to finalize almost all of the components (including building materials, fasteners, screws, etc.) that we will need for each vehicle with the exception of exact sensor units for odometry and some power supply units. With our current configuration of vehicles and parts, we anticipate being able to fully afford 3 vehicles with some budget left over for miscellaneous items, and potentially some experimentation.

This week the team also collaborated to come up with more concrete metrics and testing strategies to evaluate our progress. This has allowed us to narrow down scope and maintain a more focused approach as we continue to fine tune our design.

Moving forward, I need to focus on fabricating the vehicles once we are able to get all the parts. In addition I will also develop the motor system interface to enable the driving portion of the car.

RC Unit (No Camera)