This week I mainly focused on the planning aspect of the project. I had to figure out how to use the bounding boxes created by the object detection algorithms to figure out what angle to set the wheels such that the car would avoid the obstacle. The issue was that the bounding box around the object does not contain depth information and thus we don’t know how far the object is. We can assume that the object is a short distance from the car, but this would lead to wide turns around the obstacles and may affect planning if the course is dense with obstacles. Therefore we needed some way to approximate the horizontal distance necessary to avoid the obstacle. Once this is determined, we can use the depth map from the Intel RealSense to create a right angled triangle with depth and horizontal distance as the legs, and the angle created by the hypotenuse and the depth leg would be the angle necessary to turn the wheels.

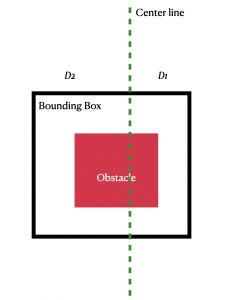

In the diagram above, I let D1 and D2 be the distance from the centre of the image to the edges of the bounding box. I figured if we could scale these distances D1 linearly, i.e apply some affine transformation to it, we can approximate the horizontal distance necessary. This works, since the planning algorithm will only account for the left/rightmost bounding box when making decisions, and since the closest obstacle will be detected at around a range o 0.2m, we can approximate a transformation such that the horizontal distance will not be too far off the true distance, and the vehicle will never collide with the obstacle.

For next week I will go back to object detection and continue experimenting with MobileNet on my laptop and try and integrate MobileNet with the intel RealSense camera.