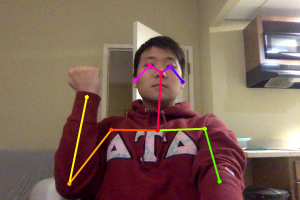

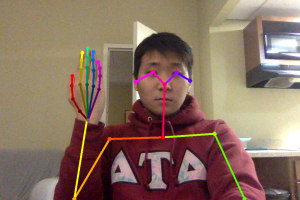

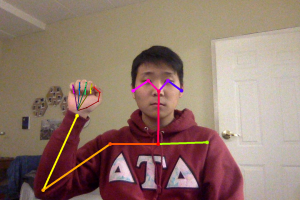

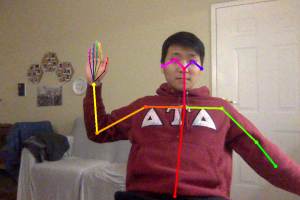

Hello from Team *wave* Google!

This week we focused on getting resettled and refocusing our project given the switch to remote capstone. For the most part, our project is mostly intact with some small changes. We did cut out the physical enclosure for our project, given TechSpark closing, but this was not an essential part of project, and we eliminated live testing instead focusing solely on video streams of gestures, hopefully that can be gathered remotely from asking friends.

To facilitate remote capstone, we worked to segment our project into stages that we each could work remotely on. We narrowed down the inputs and output of each stage so that one person would not rely on another. For example, we determined that the input for OpenPose would be images and that the output would be positional distances from the wrist point to all the respective points as a JSON, something that OpenCV would also output in the future. We also set up Google Assistant SDK so that the text inputs and outputs work and are determined. These inputs and outputs will also be the inputs to our web application This will allow us to do pipeline testing at each stage.

Finally, we decided to also to order another Jetson Nano given we have enough budget, which eliminate another dependency as OpenCV can be tested directly on this new Nano.

More detail on the refocused project is on our document on Canvas.

PS: We also wish our team member Sung a good flight back to Korea where he will be working remotely for the rest of the semester