Given spring break and coronavirus, I did not do much this week for capstone.

Carnegie Mellon ECE Capstone, Spring 2020 – Claire Ye, Jeffrey Huang, Sung Jun Hong

Given spring break and coronavirus, I did not do much this week for capstone.

This week has been very unfortunate with all the midterms and projects I had due, so I wasn’t able to work on Capstone meaningfully. Aside from things that I got done last week coming to fruition this past week (e.g. requesting full access to Google Cloud platform within andrew.cmu.edu), I didn’t really start anything new. I will be traveling during spring break, but here are some deliverable tasks that I can achieve remotely:

This week, we started going deeper into the machine learning aspect of things. After some experimentation with OpenPose on the Nano, it became abundantly clear that if we want to meet our speed requirements we definitely should not run it locally. It’s good to know this early on – now we know that AWS EC2 is definitely the only way forward if we want to keep our current design of utilizing both OpenPose and OpenCV.

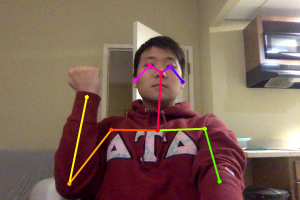

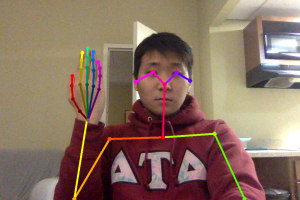

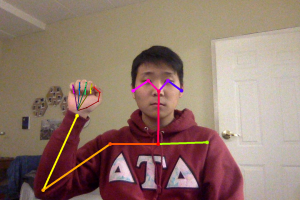

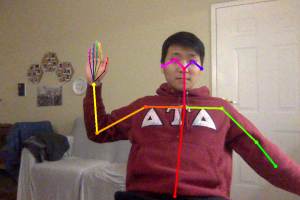

We also found out that OpenPose doesn’t recognize the back of hands, especially gestures where the fingers are not visible (like a closed fist with the back of the hand facing the camera). We are going to re-map some of our gestures so that we know that each gesture is, at minimum, recognized by OpenPose. This would greatly reduce the risk of a gesture that is never recognized later on, or the need for additional machine learning algorithms incorporated into the existing infrastructure.

(OpenPose can detect the hand backwards, but cannot do the same with a fist backwards)

We are quickly realizing the limitations of the Nano and seriously reconsidering changing to the Xavier. We are in contact with our advisor about this situation, and he is ready to order an Xavier for us if need be. Within the next two weeks, we can probably make a firmer decision on how to proceed. So far, only the CPU has shown serious limitations (overheating while running basic install commands, running OpenPose, etc.). Once OpenCV is installed and running, we can make a more accurate judgement.

This week I continued to work on the web application, working again on setting up the channel layer and web socket connections. I also decided to work more on setting up the Jetson Nano to run OpenPose and OpenCV, as finalizing the web application was less important than catching up to the gesture recognition parts of the project.

Getting OpenPose installed on the Jetson Nano was mostly smooth, but had some hiccups on the way from errors in the installation guide that I was able to solve with the help of some other groups that installed on the Xavier. I was also able to install OpenCV which went smoothly. After installing OpenPose, I tried to get video streaming working to test the FPS we would get after finally getting our camera, but I had difficulties getting that setup. Instead, I just experimented with running OpenPose in a similar fashion as Sung had been doing on his laptop. Initial results are not very promising, but I am not sure if OpenPose was making full use of the GPU.

Next week is spring break, so I do not anticipate doing much, but after break I hope to continue to work on the Nano and begin OpenCV + glove part.

Hello from Team *wave* Google!

This week we presented our design report (great job Claire!!) and worked on our design report. After our design report, we received useful feedback regarding confusion matrixes, something that would be useful for us to add onto our existing design. We had already decided to individually classify the accuracy of all our gestures, and by combining that information with a confusion matrix, we can hope to achieve better results.

Another important feedback that we received goes along with one the bigger risks of our project that has put as a bit behind schedule, hardware. This week all our hardware components that we ordered finally arrived, allowing us to fully access the Jetson Nano’s capabilities. We had already determined that OpenPose was unlikely to successfully run the Nano given the performance of other groups on the Xavier, and we have thus choose to minimize the dependencies on the Nano instead running on a p2 EC2 instance. We should be able to know much more confidently next week if OpenCV will have acceptable performance on the Nano, and if not we will strongly consider pivoting to TK or Xavier.

Regarding the other component of the project, the Google Assistant SDK and Web Application, we have made good progress on that figuring out the way to link up to two using simple Web Sockets. We know that we can get the text response from Google Assistant and using a Web Socket connection relay that information to the Web Application. Further experimentation next week, will determine in more detail the scope and capabilities of Google Assistant SDK.

All in all, we are a bit behind schedule which is exacerbated by Spring Break approaching. However, we still have a good amount of slack and with clear tasks next week we hope to make good progress before Spring Break.

This week, I did the Design Review presentation and worked on the report. I also spent a long time exploring the Google Assistant SDK and gRPC basics.

For the Google Assistant SDK, I got to the point where I was almost able to run the sample code on the Nano. I bumped into a lot of unforeseen permissions issues on the Nano, which took a few hours to resolve.

Now, I am stuck at a point where I need to register the device with Google Assistant, but despite a few hours of probing around I cannot get a good answer on why this is happening. It seems like there is, again, a permissions issue. There are not too many online resources for debugging this because it is a little niche and Google’s tutorial for it is quite incomplete.

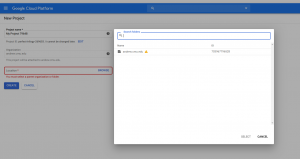

I have also contacted the school’s IT desk so I can create a project under my school Gmail account rather than my personal one. I want to be able to create an account under the school’s Gmail to make my project “internal” within the CMU organization and skip some authentication steps later on in the process (i.e. having to provide proof of owning a website for terms and agreements) . The IT desk and I are preparing for additional permissions for my account so I can create actions on my Andrew email (CMU emails are normally denied that privilege).

For the gRPC, I was able to run some code based from the samples. I think there is potential for it to be very useful for communicating to either of the AWS servers we have. For the WebApp, it can give it information from the results from the command and to display it on screen.

For the deliverables next week, I will be completing the introduction, system specification, and project management sections of the design report. I will also continue working on the Google Assistant SDK samples on the Nano and try to get the issues resolved as soon as possible. I should also have a new project created on my school email instead by next week. Aside from that, I will be installing the WiFi card on to the Nano.

I was not able to do anything for Capstone this week. I was hit with 3 assignments from 15440 and even though I spread out all the work to be done by Friday, the project that was due on Friday was too much and I am currently using my late day and about to use my 2nd late day to finish this project. As soon as I finish this I will transition to Capstone and do the things I was supposed to this week.

This week, I worked on the Design Report. I also began making more progress on the web application finalizing some design choices and creating a rough prototype.

The key design and overall of the web application is to emulate the Google Assistant on the phone, which displays visual data of the Google queries in a chat type format. The “messages” would be the responses from the Jetson containing the information. We are still experimenting with Google Assistant SDK to determine what exact information that is received, but at minimum the verbal content usually stated.

In addition, do to the nature of this application it is important that the “messages” from the Jetson with the appropriate information be updated in real time, ie eliminating the need to constantly refresh the page for new messages to occur. To do this, I decided on using Django channels, which allow asynchronous code and handling HTTP as well as Web Sockets. By creating a channel layer, consumer instances can send then information. The basic overall structure has been written, and I am currently now in the process of experimenting with finishing up the channel layer and experimenting with using a simple python scripts to send “messages” to our web application.

This week, I was working on the design review in preparation for the design presentation. As such, a lot of time was devoted into thinking about the design decisions and whether or not these decisions were the best way to approach our problem/project.

I was hesitant about using OpenCV and whether or not it could be accurate, and we recognized as a risk factor, and as such added a backup. As such, Jeff and I decided that we should use OpenPose as a backup, and have it running as well as OpenCV. We realized that OpenPose takes up a lot of GPU power and would not work well on the Nano given that the Jetson Xavier (which has about 8 times the GPU capabilities) resulted in 17 fps with OpenPose video capture. As such, we decided to use AWS to run OpenPose, and I am in the process of setting that up. We have received AWS credit and we just need to see if AWS can match our timing requirements and GPU requirements.

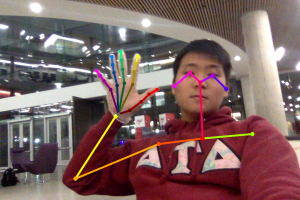

Our initial idea revolves around a glove that we use that tracks joints. We were originally thinking of a latex glove where we would mark the joint locations with a marker, but we thought that the glove would then interfere with OpenPose tracking. We tested this out and we found out that OpenPose is not hindered even with the existence of the glove as shown on the picture below.

This week, I have to make a glove joint tracker with OpenCV. I’ve installed OpenCV and have been messing around it, but now I will have to implement a tracker that will give me a list of joint locations. This will probably be a really challenging part of the project, so stay tuned in for next weeks update!

Hello from Team *wave* Google!

This week, we worked a lot on finalizing our idea/concepts in preparation for the design review. In terms of the gesture recognition software we were planning on using, we decided to use both OpenPose and OpenCV to mitigate the risk of misclassifying and/or not classifying at all. Initially, we were planning on only using OpenCV and have users wear a glove to track joints. However, we weren’t sure how reliable this would be so to mitigate the risk of misclassifying/not classifying, we added a backup, which is to run OpenPose to get joint locations, and to use that data to classify gestures. With this new approach, we will have OpenCV running on the nano and OpenPose running on AWS. Gestures in the form of video will be split into different frames, and those frames will be tracked with glove tracking on the Nano and OpenPose tracking on AWS. If we don’t get a classification on the Nano and OpenCV, we will use the result from AWS and OpenPose to classify our gestures.

We wanted to see how fast OpenPose could run as how fast we can classify gestures is a requirement we have, and on a MacBook Pro, we achieved a 0.5 fps with video and tracking one image took around 25-30 seconds. Now OpenPose on the MacBook Pro was running on a CPU, whereas it would run on a GPU on the Nvidia Jetson Nano. Even still, the fact that it ran 25-30 seconds on a CPU to track one image meant that it would be possible that OpenPose would not deliver our timing requirements. As such, we decided to use AWS to run OpenPose instead. This should mitigate the risk of classification being too slow by using OpenPose.

Another challenge we ran into was processing dynamic gestures. Processing dynamic gestures would mean that we would have to do video recognition to do our gesture recognition. We researched online and found that most video recognition algorithms rely on 3D-CNN’s to train/test because of the high accuracy that 3D-CNN provides compared to 2D-CNN. However, given that we need fast response/classification times, we decided not to do dynamic gestures as we thought they would be hard to implement with the time constraints that we are working with. Instead we decided to have a set of static gestures and only do recognition on those static gestures.

We’ve also modified our Gantt Chart to update the change in design choices, especially with the gesture recognition aspect of our project.

Next week, we are going to run OpenPose on AWS and start the feature extraction with the results we get from OpenPose tracking so that we can start training our model soon.