This week, I prepared for the demo, but the demo did not work out as well as I hoped it to be. I assumed that while testing the classifier separately, when integrating it would work out as well. My testing error for the classifier was about 97%, so I was expecting high accuracy, but that did not show during the demo. As such, this week I was trying to fix that after the demo, and that is still my goal for the coming week.

Month: April 2020

Jeff’s Status Report For 4/04

Hello from home!

This week, I focused on getting AWS set up and hosting our web application, switching from just local hosting. AWS set up took a while to figure out how to get the credentials, but the web application should be hosted, and now I am just doing some experimentation that all the features still work, ie we can still connect via websockets and send data through. In addition, I’m continuing to tinker with the UI/UX, adding some features like parsing the message to add some more visual elements like emojis to the currently only text based output.

In addition, for the OpenCV part after doing some further investigation and talking it over with Sung, we decided that OpenCV would be better suited for use in normalizing images for use in Sung’s image neural network classifier. Even after adding different patterns and colors as suggested during last week’s meeting, we realized there was simply OpenCV with a glove simply did not have the capability to reproduce OpenPose’s feature extraction by itself. Too often in our gestures were markers covered by our gesture, and without the ability to “infer” were the markers were, OpenCV could not reproduce the same feature list as OpenPose requiring a new classifier. Instead we are using OpenCV to just extract the hands and normalize each image so that it can be passed in. I have worked on extracting the hands, and I am continuing working to normalize the image first by using the glove with a marker to standardize the image, and hopefully eventually without a glove.

Team Status Report for 04/04

Hello.

This week, we continued to work on our respective projects based on the individualized Gantt chart. Everything is going well, and we plan on reconvening next week to “integrate” remotely. We are sharing our progress with each other via Github, so that we can take information from each other as needed. We started collecting more data through social media for our hand gestures, but we were not able to get too many replies. Hopefully, next week we will get more submissions.

Claire’ Status Report for 4/4

Hello! This week, I did some tweaking with the testing. To start, I am now doing around 20 fps for the testing video. I am still changing around some parameters, but I think I am going for this particular setup.

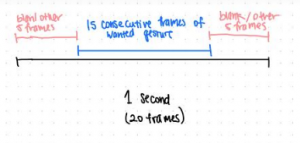

I want to switch away from the one gesture straight to next approach I was doing before to simulate more of the live testing that I talked about with Marios. If we go for 20 frames per second (which we might tweak depending on how good the results are, but we can go up to 28 fps if necessary), I want at least 3/4 frames to be the correct gesture consecutively for the gesture I am trying to get the Jetson to read. The five frames before or after can be either blank or another gesture. That way, it should be guaranteed that there will be no consecutive 15 frames of one thing at any point of time no matter what. Obviously, real life testing would have more variables, like the gesture might not be held consecutive. But I think this is a good metric to start.

Here is a clip of a video that has the incorporated “empty space” between gestures.

From this video you can see the small gaps of blanks pace between each gesture. At this point I haven’t incorporated other error gestures into it yet (and I want to sort of think more about that). I think this is pretty much how we would do live testing in a normal scenario – the user holds up the gesture for around a second, quickly switches to the next one, etc.

Next week, I plan on getting the AWS situation set up. I need some help with learning how to communicate with it from the Jetson. As long as I am able to send and receive things from the AWS, I would consider it a success (OpenPose goes on it but that is within Sung’s realm). I also want to test out the sampling for the camera and see if I can adjust it on the fly (i.e. some command -fps 20 to get it sampling at 20 fps and sending those directly to AWS).

Sung’s Status Report for 04/04

Hello World!

This week, I was working on getting the 2d convolutional neural network working for the project. I’ve created a Github Repo where I’ve put everything I’ve done so far.

https://github.com/mrfrosty0430/WaveGoogle

I also collected a lot more data, and currently I have about 5000 images.