This week our backend underwent a refactoring due to limitations surrounding certain elements of the frontend that we wanted to use, and also due to latency concerns. Besides that, I spent time this week finishing up the filter select menu (backend and frontend) as well as testing the code for latency.

Michael’s Status Report 8

This week, I helped Jason in implementing real-time gyroscope controlled sound effects and also continued my work with integrating the code. During integration, I started with the backend part of the controller portion of our MVC in Python, but due to how fast the code was expanding/getting more complicated, we decided to refactor the code into a clean design pattern. The main flow of our projects operation would be between two main modes: play and edit mode. In play mode, which is the default mode, the VR inputs are processed and used for sound output and effects toggling. In edit mode, VR controller inputs are used in navigating a series of menus used for selecting instruments, changing filters, adjusting filter parameters etc., and Jeffrey is working on the frontend such that the user can directly interface with the website using their remotes. At the moment, I am continuing work on the menu for selecting and changing the filter sets.

Michael’s Status Report 7

This week our team decided to pivot away from using peak detection for note separation and to utilize different sensor data mapped to certain effects instead. As a result, I’ve spent this week working on setting up the gyroscope data to be processed by the tone library. Besides that, I have been working with Jason on implementing effects that have tunable parameters that we can map to motion data.

Michael’s Status Report 6

This week I continued work with integrating my VR controller code with the sound output code and have been continuing with tuning the gesture detection. In regards to integration, I am now able to trigger sounds using just the VR controller–as of now, sounds are hardcoded and will be more usable once integrated with the smartphone. I found that latency between controller to audio was not as good as we wanted, but after trials with Jeffrey and Jason’s setups, discovered that the latency was due to my laptop itself. They measured the latency on their systems to be around 40 ms, which was within our requirements.

Team Status Report

Updated Gantt Chart

https://docs.google.com/spreadsheets/d/1f5ktulxfieisyMuqV76F8fRJTVERRSck8v7_xr6ND80/edit?usp=sharing

Control Process

Selecting Notes

The desired note can be selected from the smartphone controller by holding a finger down on the note grid. The eight notes represented on the grid are solfege representations of whatsoever key and tonality (major/natural minor) selected from the smartphone menu. For example, a selection of the key of C Major would involve a grid with the following notes: C, D, E, F, G, A, B, C.

Octave Shift

To shift the range of notes in the smartphone note grid up or down an octave, swipe the thumb right or left on the touchpad of the VR controller (in the right hand). Swiping right once would denote a single octave shift up, and swiping left would denote an octave shift down.

Chromatic Shift

To select a note that may not be in the selected key and tonality, the user can utilize the chromatic shift function. This is done by holding your right thumb on the top or bottom of the VR controller touchpad (without clicking down). Holding up would denote a half step shift up, and holding down would denote a half step shift down. For example, playing an E-flat in the key of C Major would involve selecting the “E” note in the left hand and holding the thumb down on the right hand touchpad. The same note can also be achieved by selecting “D” and holding the thumb up on the touchpad.

Triggering a Note

To trigger the start of a selected note, pull and hold down the trigger on the VR controller. The selected note will be played for as long as the trigger is held down, and any additional notes toggled on the left hand will be triggered as the note is selected in the left hand. If no notes are selected while the trigger is pulled, no sounds will be outputted.

Note Separation

If the user wishes to divide a selected note into smaller time divisions, there are two options:

- Release and toggle the trigger repeatedly

- Use motion to denote time divisions

The system recognizes a change in controller direction as a time division. For example, to subdivide held note(s) into two subdivisions, one would initialize the note with a trigger press and initiate the subdivision with a change in controller motion. The same outcome can be accomplished with just repeatedly pulling the trigger.

Polyphony

Polyphony can be simply achieved by holding down multiple notes on the smartphone grid while the trigger is pressed.

Toggling Effects

Four different effects can be toggled by clicking on any of the four cardinal directions on the VR controller touchpad.

Updated Risk Management

In response to the current virtual classroom situation and progress with our project, the risk has changed a bit.

For the phone grip, it doesn’t seem very feasible to build it in the same manner/design we had originally intended. We had planned to build the grip with a combination of laser cutters and maker space materials. Instead, we have decided to go for a simpler approach for attaching the phone to the users left hand. Instead, we want to use a velcro strap that would go around the user’s hand. We would then have the other end of the velcro attached to the back of the phone.

Another area of risk we found was the limitations of the current Javascript package we are using to generate sound. While there are many features in the library such as accurate pitch selection and instrument selection, there were some features we didn’t see. One of these features we wanted to use was the ability to pitch bend. A workaround we have brainstormed is to use a Python bending that does support pitch bending library. We could run this in parallel on our Flask server with the front end Javascript to achieve features we want from both libraries.

Michael’s Status Report 5

This week I successfully implemented a working algorithm for peak detection. I first implemented solely the dispersion based algorithm, but it was too sensitive and would detect peaks even when I would hold the controller still. After tuning the parameters a bit and adding some more logic atop the algorithm, I was able to pretty consistently detect peaks with realistic controller motion. I still have work to do in further tuning and testing in this regard.

I also started integrating the gesture detection code with Jason’s work with MIDI output. This is in progress as of now.

Michael’s Status Report 4

In light of the recent events of COVID-19, we have been spending this week setting up our project to work from three remote settings. The only setback from social distancing is that we now don’t have access to the campus labs or facilities, which we usually use to work together in person. We had also planned on fabricating a custom grip for our smartphone, but we had planned on 3D-printing the parts in the facilities that are now closed. Instead, we are using a more makeshift approach involving velcro straps to secure the hand to the smartphone. However, most of our project is software-based and we all have the required parts to get the platform working on our own setups, so there was no further refocusing required for the rest of our project.

During this week and the following, I plan on be exploring the different algorithms for peak detection as found in the paper in my last post. So far, I have altered the logging tool used for my data collection to be used by the different peak detection algorithms on the Python side of things. Jeffrey is going to be concurrently implementing the dispersion based algorithm for peak detection alongside me.

Michael’s Status Report 3

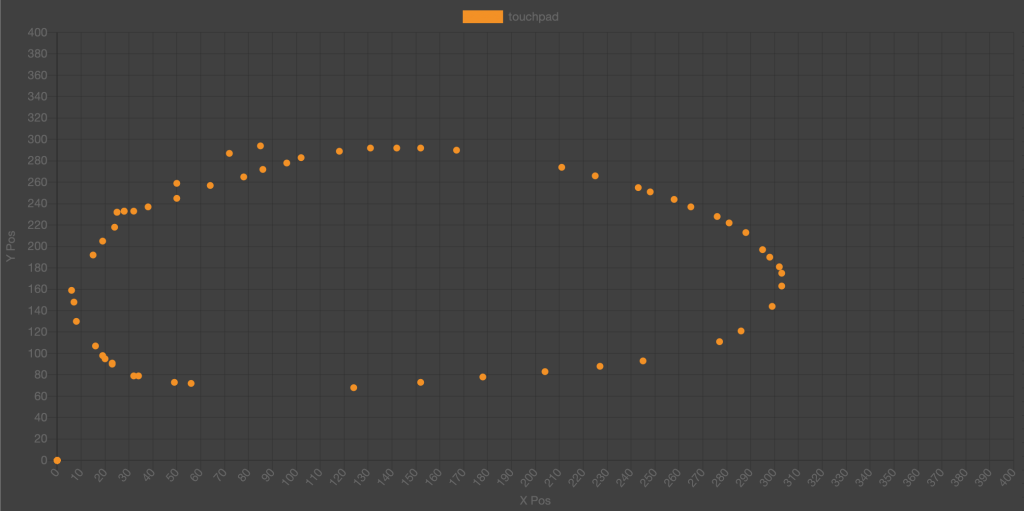

This week I continued working on the sensor data visualization tool. I was able to extend it to the gyroscope and touchpad sensors. Drawing a circle on the touchpad (without pressing down on the buttons) gave this output:

There would be some further calibration needed if we wanted to fine tune spacial detection on the touchpad, but for the purposes of swipes, it worked rather well. I began working on finding gestures from these datasets and decided to continue working on the tool as I needed. With gestures, I was able to see distinct peaks and troughs in acceleration values when I would physically do a sharp movement indicative of a note change. The main issue was that the data was coming in one point at a time, so we would need to find a way of analyzing realtime time series data.

I found an article on doing this, and will try implementing a similar sliding window algorithm.

https://pdfs.semanticscholar.org/1d60/4572ec6ed77bd07fbb4e9fc32ab5271adedb.pdf

Michael’s Status Report 2

This week, the team decided to pivot to the web-bluetooth approach using a Flask server. Using this method, we were able to easily connect to the GearVR controller, enable notifications, and write commands to characteristics. With this, I started working on a javascript tool for us to visualize GearVR sensor values received in the notification buffer.

I found a javascript chart-creation package named Chart.js which could make different types of plots based on JSON data. Since our project should only register motion and play notes when the trigger is held on the VR controller, I decided to case on the trigger-on notification during the stream of real-time notification buffers. I tried parsing and plotting for the acceleration values first. Once the trigger was pressed, my code would start logging the sensor values, and would plot the x, y, and z values on a line chart once the trigger was released.

In this case, the y-axis is in m/s^2 and the x-axis is in “ticks”, which is approximately .015 seconds (as the average notifications per second was 68.6 notifications). Orange is x, blue is y, and green is z.

Michael’s Status Report 1

This week I worked on connecting to the GearVR controller via Python. This would use the laptop as the central device in the low-energy bluetooth connection with the controller, the peripheral device.

I tested out a several different Python packages that would meet the requirements of:

-Scan for nearby BLE devices and view peripheral services and characteristics

-Connect to device

-Read and Write to characteristics

-Enable and receive notifications from device

I ended up choosing a package named Bleak (https://bleak.readthedocs.io/en/latest/). From there, I was able to scan for the GearVR and view the available services. The usual services found in most bluetooth devices were listed, such as battery life and device info, but there was one service with an un-stringified hex. I assumed that was where the sensor related characteristics were located.

The next step was to connect to the controller. I was only able to successfully connect to the controller using my laptop for approximately 20-30 seconds before the connection was dropped by the controller. I also tried writing code so that I could enable notifications and write commands to characteristics during the time that the connection was stable. The ultimate dagger was when enabling notifications, I would get a BLE error code that ended up being a system error from my operating system. After doing some research online, we found out that it was specifically designed to be connected with Samsung products through their application, so my Windows system trying to enable BLE notifications was getting denied.

I tried another package, PyBluez, which also failed due to the same error. The direct bluetooth connection approach with the laptop wasn’t an option for this particular controller.