Team Status

This week was heavy on solidifying our final design and beginning work on some of the core components of the project. Our current development strategy is to build skeleton modules for each component of the overall system first, integrate these modules, and then iteratively improve each module individually. By skeleton module, we mean a barebones implementation of the component such that it can interface with other components in the same way as the final product, but may not have fully developed internals.

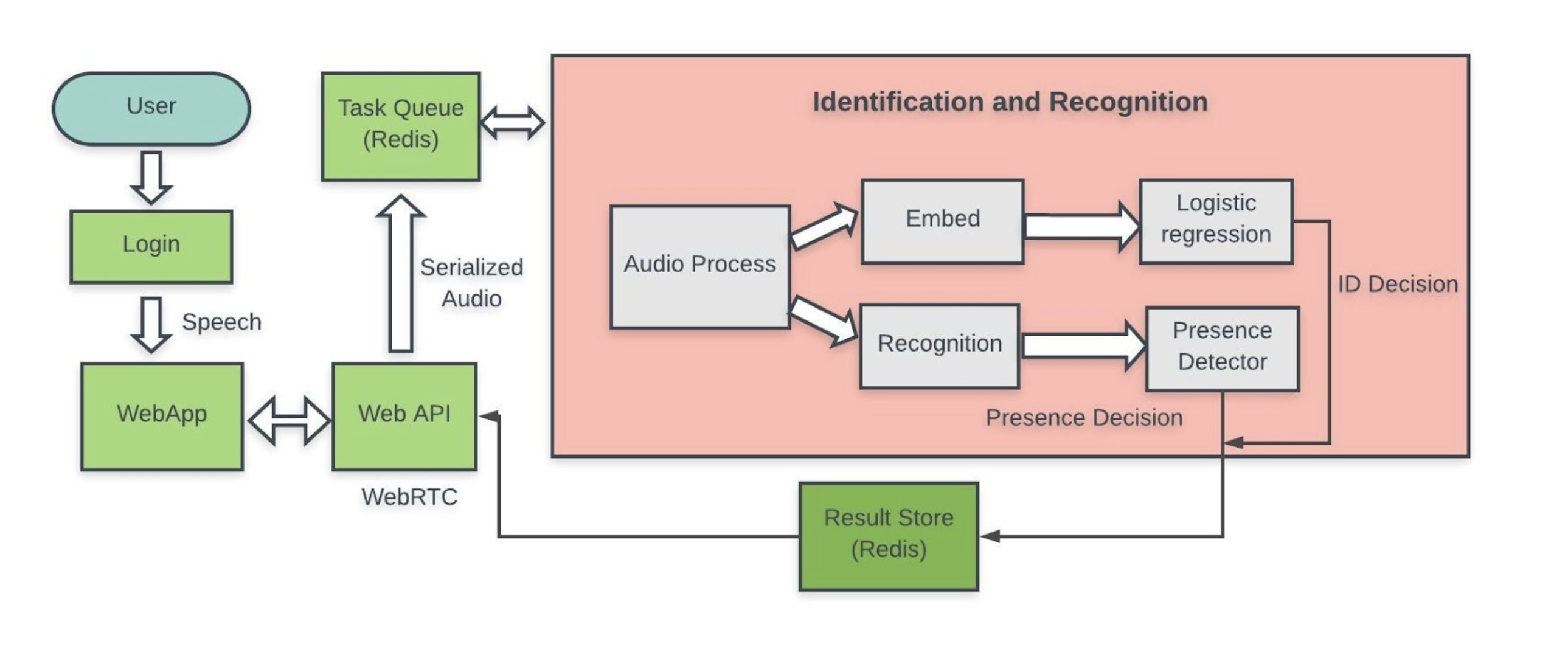

On that note, this week we have finished our design of the backend system. The major design change associated with this finalization was settling on using a Redis-based queueing system (using a Python wrapper aptly called Redis Queue) to manage the background processing tasks and choosing a remote MySQL instance to maintain state of the system. The goal is to have a robust backend application that can be deployed and torn down on AWS by running a script. A baseline version of the system will be tested on AWS by the end of the coming week.

The most significant risk we currently face is proving that we can transfer the ability of our models on external data sets to our own data, which we need to demonstrate that the system can work at demo time. In order to do so, we have previously listed data collection as an important task. We are currently behind on the timeline for data collection. To catch up, we are spinning up a web app this weekend on AWS that presents users with a text prompt, which they will read while they application records them. At the end of recording, we write the audio file user id into a database. We plan to collect this data over the next week and also over break, allowing us to get back on track. We will be able to collect data remotely thanks to having the web application that can be used anywhere.

Team Member Status

Richa

This week I spent a lot of time thinking about how to design the backend cloud system. I did some research about how to use Redis as a data store.

For the backend of the cloud system, the final design involves having an EC2 instance for the webapp which is connected to another EC2 instance (which the GPU will be on) through Redis. Since each login and register will need access to all the data in the database (for each login the voice recording will be compared to n binaries for n users), we decided that both the EC2 instances should have direct access to the database.

I also spent some time trying to debug the ajax call to pass the voice recording from the javascript in the html to django views and store it in a django model object. I am currently working on creating an EC2 instance for the webapp and switching the database from SQLite to MySQL.

Ryan

This week I,

- Developed and trained a bi-pyramidal LSTM model for speaker verification. I hoped that imposing temporal structure to the network and using a base network architecture that is popular for speech recognition could help performance. The model did not perform as well as hoped on Mel features. I plan on experimenting with MFCCs this coming week.

- Prepared NIST-SRE data for training. This took longer because the data was large and we needed to find a way to preprocess it.

- Tested contrastive training and discovered that it does not improve performance out of the box and can in fact degrade the model without careful tuning. This experimentation leads me to believe that more work needs to be done on tuning the verification model sooner rather than later in this regard.

Nikhil

This week I worked on implementing a critical of the speech ID pipeline, which is using logistic regression to build one-vs-all binary classification model for each speaker in the embedding space learned for verification.

I spent most of the time designing the algorithm and writing the baseline code. I plan to test the performance on real data from Voxceleb and NIST-SRE this coming week.