This week the team focused on finalizing the design presentation, as well as the design document. We spent a significant amount of time narrowing down our requirements, then finding solutions to accomplish these requirements. We initially struggled with finding a value for the false positive and false negative rates for the cat door opening. We were unable to find statistics on raccoon behavior, or a value for how much damage raccoons can cause. We then realized that our goal simply needs to be better than the current design of a regular cat door. For clear reasons, a regular cat door will always let a racoon in because there is no locking mechanism. Therefore, any value for false positive less than 100% would be an improvement. In addition, we decided to challenge ourselves to achieve a 5% false positive rate, as this rate is achieved by competent facial recognition algorithms. We also chose 5% as our false negative rate because if we assume a cat uses the door four times a day, the user would be alerted once over five days that their cat may be stuck outside, which is reasonable.

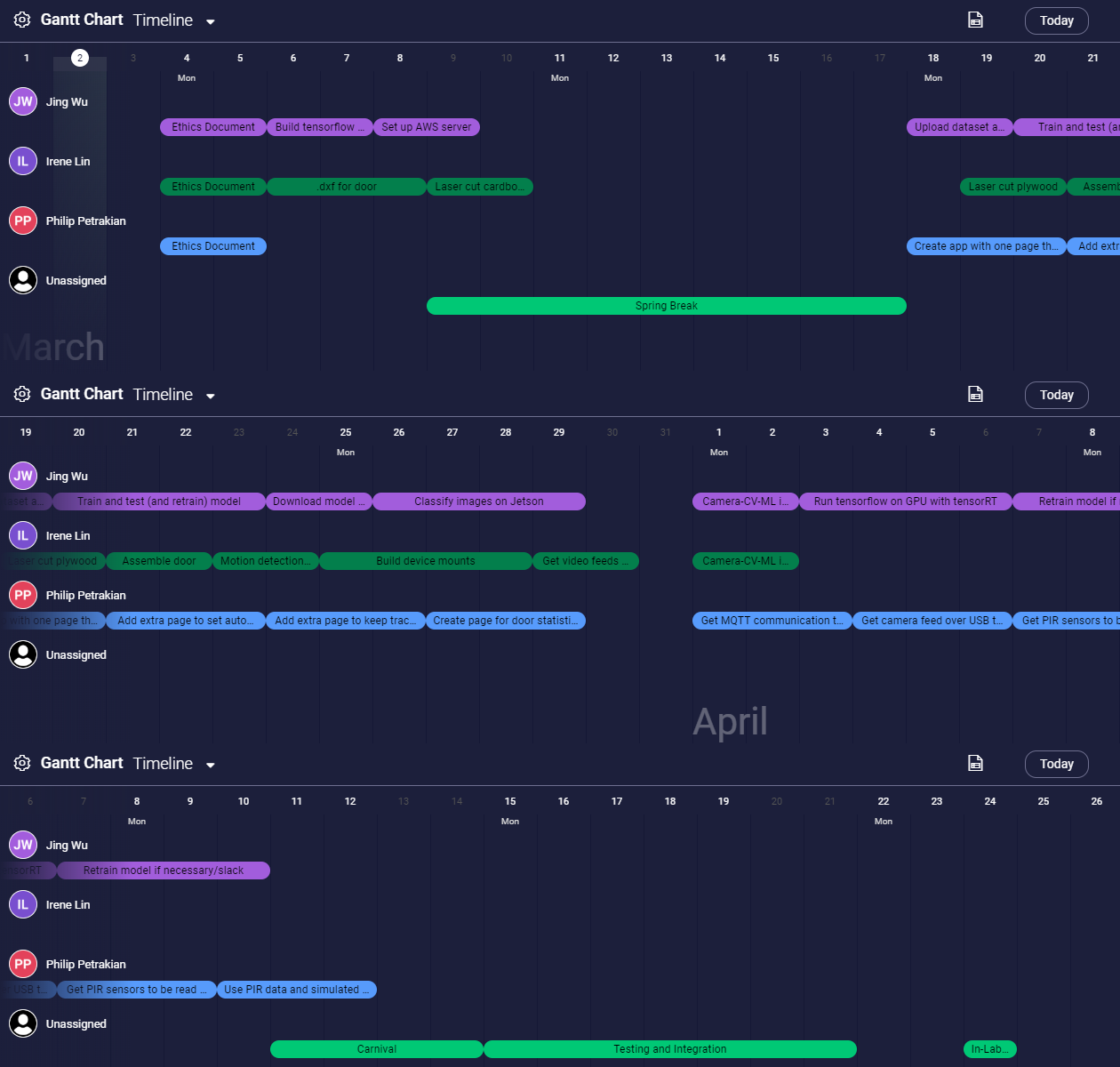

More on the project management side, we decided that in addition to our two meetings a week during class time and our Saturday meeting, we should meet most days for a “stand up.” These meetings will be done over Zoom and will allow us to communicate our accomplishments over the past 24 hours and what we wish to accomplish in the next 24 hours. We believe that this will help us work better as a team, as we will be staying in touch on a daily basis. This is especially important as the semester goes on, when we start implementing our designs.

Our team is currently on track!