One risk that we will have to consider is that our device’s attachment mechanism will not be sufficient or easy enough to use. When looking into how we could create a universal attachment for all types of glasses, we narrowed down our options to using either a hooking mechanism or a magnetic mechanism. With a hooking mechanism, we risk that our users may not be able to easily clasp our device on, and with a magnetic mechanism we risk that our device may not be secure enough. To manage the risk with a hooking mechanism, we can iterate over multiple designs and receive user feedback for which is easiest to use. For the magnetic mechanism, we can try to increase the strength of the magnet so that the attachment is more secure. However, it is worth noting that in the worst case scenario, if neither of these solutions work, the image capturing and audio description functionality of our device will still be testable.

Another risk to consider is the latency of the graph description model; after doing some more research into how exactly the CNN-LSTM model works (see Nithya’s status report), we discovered that the generation of the graph description may take longer than we originally anticipated. Specifically, the sequence processor portion of the model needs to generate the output sequence one word at a time, and the way that a particular word is generated is by performing a softmax over the entire vocabulary and then choosing the highest-probability output. This is discussed more in the “changes” section below, but we can manage this risk by (1) further limiting/decreasing the length of the output description, and (2) modifying our use case and design requirements to accommodate for this change.

The biggest change we made was adding the new functionality of the Canvas scraping software. We figured that it might be unnecessary, annoying, and difficult for the visually-impaired user to have to download the PDF of the lecture from Canvas, email it to themselves to get it on their iPhone, then upload it to the iOS app in order for our ML model to parse it before the lecture. We felt like it also might take too much time and discourage people from using our project, especially if they have lots of back to back classes with short passing periods. So, we decided to add the functionality where the user could simply click a button on the app, and have the Flask server automatically scrape the most recent lecture PDF depending on which button the user clicks. This incurs the following costs:

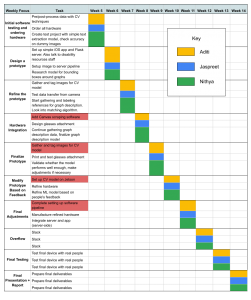

- We need to add an extra week to Aditi’s portion of the schedule to allow her to make the change.

- Professors must be willing to provide their visually-impaired students with an API key that they will put into the app so that the application will have access to the lectures in the Canvas course.

- The visually-impaired user will have to ask their professor for this API key.

To address cost (1), Aditi is already ahead of schedule, and this added functionality will not put her behind. However, if it does, one of the other team members can help take on some of the load. To mitigate (2), we will provide a disclaimer from the app to explain to the professors that the app will only scrape materials that are already available for the user to see, so there will not be a privacy concern. The app will only scrape the most recent lecture under the “Lectures” module, so unpublished files will not be extracted. We felt like it was not necessary to mitigate (3), because asking for an API key will still likely be faster and less time consuming than having to download and upload the new lecture PDF before every class.

Another change is the graph description model latency which was mentioned above. We will need to change the design requirements to be slightly more relaxed for this specific portion of the before-class latency, which we set at 10 seconds. We still don’t have a specific estimate for how long the CNN-LSTM model will actually take given some input graph, but we may need to increase this time bound; however, this should not be a problem as we have a lot of wiggle room. In our use-case requirements, we stated that the student should upload the slides 10 minutes before class, so the total latency before class need only be under 10 minutes, and we are confident that we will be able to process the slides in less than this amount of time.

We have adjusted our schedule based on the changes listed above, and have highlighted the schedule changes in red.