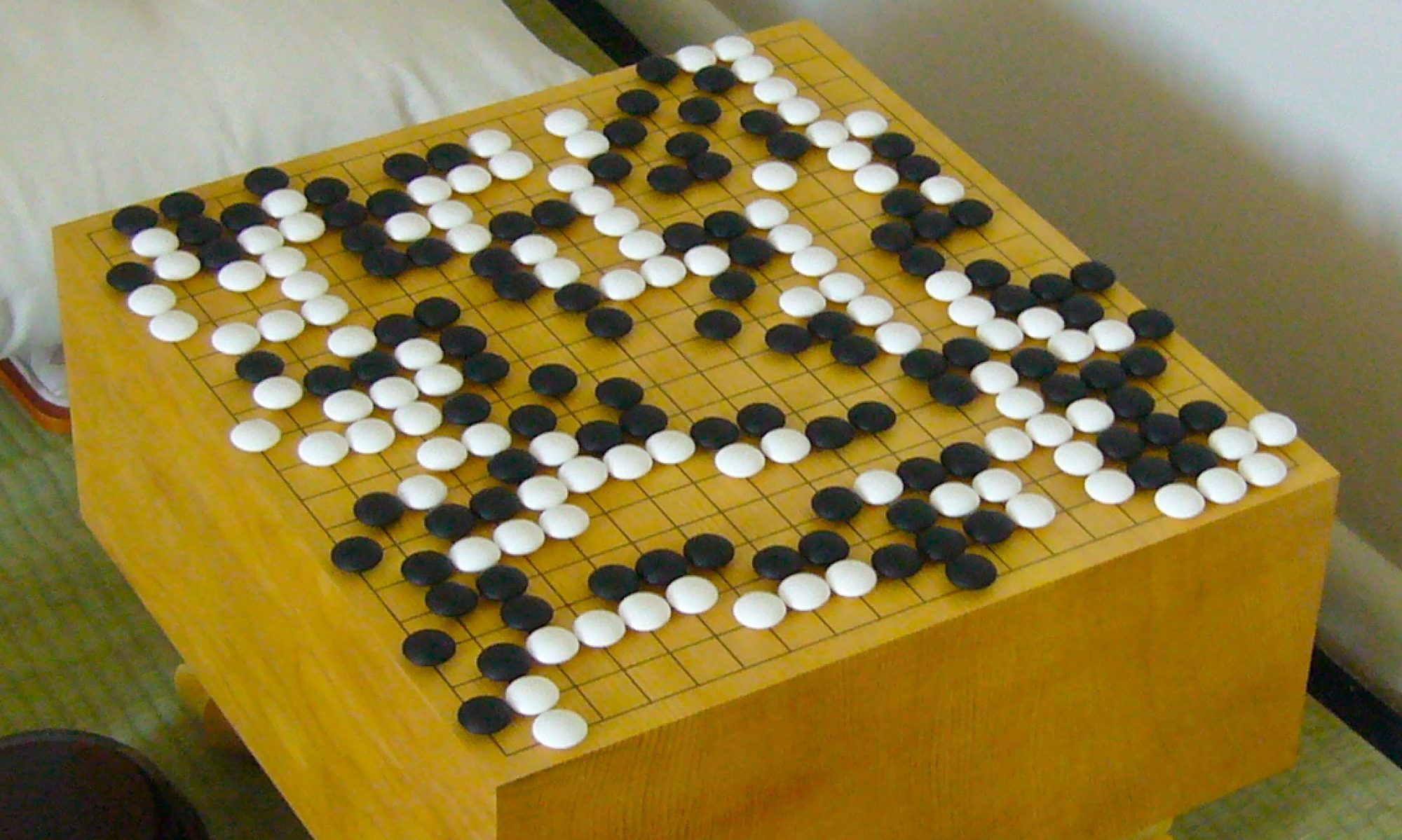

As is mentioned in our team status report for the week, we have transitioned our project away from Mancala and into the game Go instead. Fortunately, the division of labor remains relatively similar and I am still broadly responsible for the training of a reinforcement-learning engine that will eventually be used to give move suggestions and positional evaluations to our users.

Accordingly, almost all of the research I did last week is still applicable, as the same self-play techniques can be used, and, in fact, have been proven to work in the cases of AlphaZero and MuZero. After making the transition to Go this week, I had to do a quick catch-up on the rules and gameplay, but after that, along with the two above-linked papers, the research phase of my project has come to a close.

Of course, with the design presentations coming up next week, a good amount of my time this week was devoted to preparing for that as well, and the rest was spent building the platform for the reinforcement learning. The current consensus for optimal Go engine creation is a combination of deep learning and Monte Carlo Tree Simulations (MCTS). MCTS works by using self-play to simulate many game paths given a certain position, and choosing the move providing the best overall outcome. I have started work on creating the framework to perform these simulations as quickly as possible (holding game state, allowing the candidate engine to make moves against itself and returning the new board, etc.).

With regards to classwork helping me prepare for this project, I think the two ECE classes that helped the most are 18213 and 18344. I have not taken any classes in reinforcement learning or machine learning in general, but the research I did in the Cylab with ECE Prof. Vyas Sekar certainly helped me a huge amount, both in the subject matter of the research (deep learning) and the experience of reading scholarly papers to fully understand techniques you are considering using. What 18213 and 18344 provided was the “correct” way of thinking about setting up my framework. I need my simulations to be as efficient as possible while also maintaining accuracy, and I need my system to be as robust as possible, as I will need to make frequent changes, tuning parameters, etc. These combined with the research papers read last week, and the two above papers are what influenced my portion of the design the most.

Finally, in the next week I plan to finish the Go simulation framework, and begin work on setting up the reinforcement learning architecture, to begin training in the week after. MCTS simulation is quite efficient, but with the distinct limit on computational resources I have, allocating proper time is vital.