As I’m sure is common among many groups, this week our team was basically exclusively focused on getting our project ready for demo. On that front:

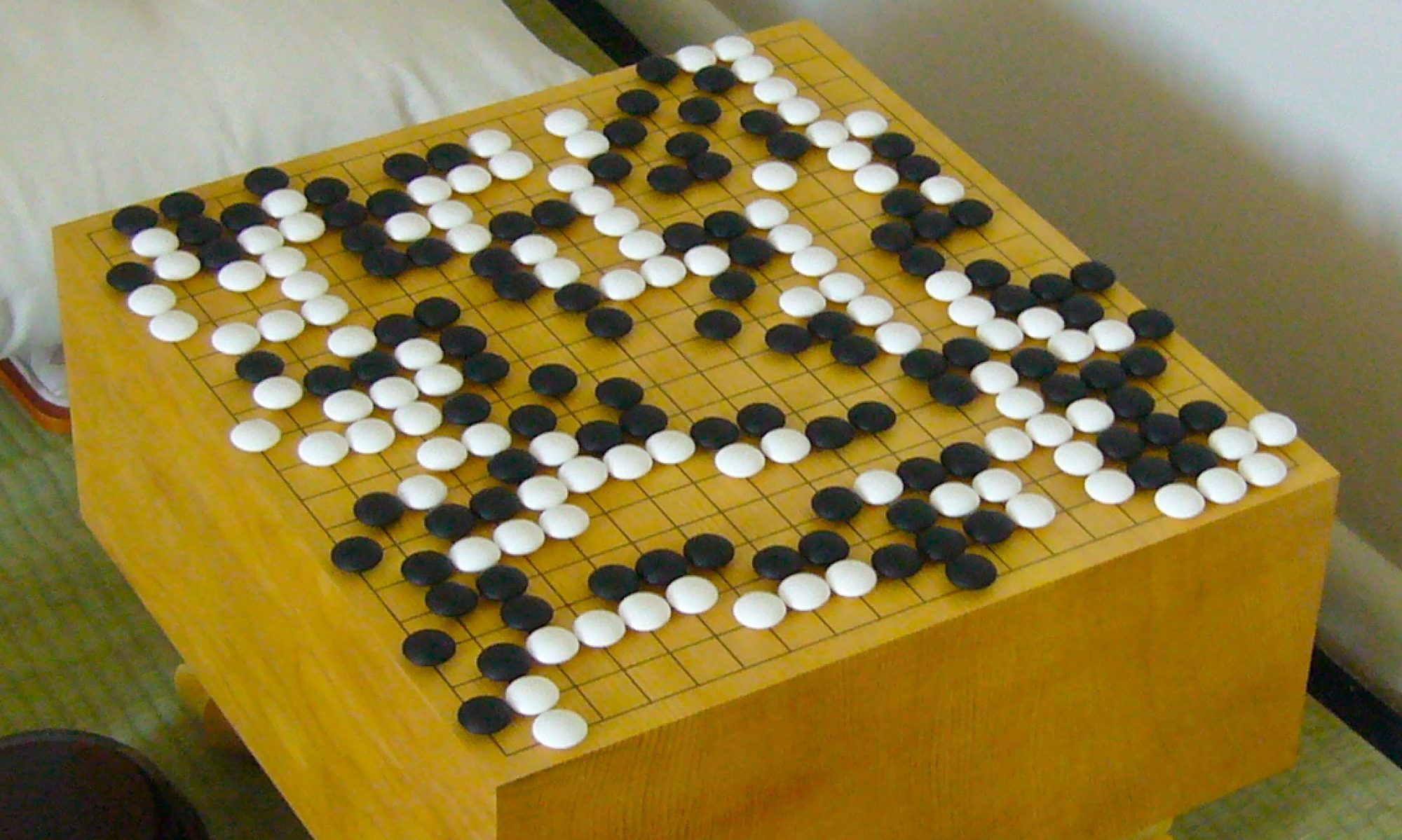

Engine: The engine is fully operational with high accuracy value and policy networks. In the demo version, a simulation depth of 25 is used, meaning the engine is looking up to 25 moves into the future (though the average would tend to be between 8-10, with a minimum of 4). The engine has been integrated fully into the web app, and both real-time and historical analysis work as intended.

Web-App: The web-app is fully operation on its own. Integration with the engine has been completed, as mentioned earlier, however integration with the physical board is not quite complete. That being said, the analysis function works as intended, and communication with the physical board is almost complete, pending a full debug of said board.

Physical Board: The physical board is almost fully complete. The LEDs have been soldered, meaning the only remaining issue is debugging 2 of the muxes that are having slight issues. This should be done easily before demo, as it is a known issue that has been fixed on 2 of the other muxes already. Full integration with the web-app has been done, but the overall product has not been fully tested, as it is not possible without working muxes. That is, with the outputs coming from the muxes currently, the web-app and engine work exactly as expected, so there is a 99.9% chance no more debugging will be needed once the final two muxes are done.

Once the muxes are debugged we are ready for demo, and the next week will be spent on the video and on the final report.

ABET:

Unit Tests:

Engine:

Basic board positions to insure all suggestions are legal moves. Found error with how passes were signified on a 9×9 board, pass was changed to be signified by an index of 81 and not 361.

Complex board positions to insure engine maximized or minimized depending on whose turn it is (minimize for black, max for white). Found error where exploration wasn’t inverted, so for black’s moves the optimization wasn’t working correctly. Fixed.

Physical Board:

Sensors tested manually when disconnected from board (i.e. only connected to Arduino) to make sure they would fit our purposes. These ended up having thresholding issues, which caused our change from 1 megaOhm to 10 megaOhm resistors in series with the photoresistors.

Tested multitude of board positions to ensure the correct board state is sent to arduino. Found issue with muxes, which is currently being debugged.

(Each intersection was tested individually)

Tested each individual button to make sure each signalled the web-app in the expected fashion.

Web-app:

Tested input states to make sure they were rendered as intended.

Tested responses to RPi signals (from Arduino) to ensure intended behavior.

Pytest suite for endpoints and web-socket.

Integration Tests:

Web-App -> Engine:

Tested interactions to make sure a prompt suggestion is delivered while also making sure all suggested moves are legal (implying the sent state is processed correctly). This identified a secondary issue with how passes were being conveyed which was again fixed.

Timing analysis was performed to be sure that the engine and communication components complied with out use case requirements.

Board->Web-App:

Extensive unit tests comprising of setting up the board and making sure the exact state on the board is the exact state on the web app. This caught issues with the muxes, which are currently being debugged, so I can’t give a super detailed writeup on the solution yet.