This status report involves work done for the last two weeks since we were off on Fall Break last week.

Since we went off for Fall Break some significant progress has been made on several aspects of the project. We’ve met our interim Proof of Concept milestones for the Physical Interface. Originally we intended to build a prototype of the physical interface as a proof of concept for the final build. However, timing constraints and the fact that we could only get 5N solenoids on hand quickly through Amazon made us pivot toward a series of tests that would give us the confidence we needed to commit towards the final build.

John, Angela, and I worked on developing some Arduino sketches and test circuits on a breadboard that could control the solenoids we ordered using shift registers. The goal was to prove we could control multiple solenoids at once using a single output pin from an Arduino. This goal was met after , check out this video where John shows the solenoids being actuated to different bit patterns (i.e 01010, 11111, 00101, …).

Our next goal was figuring out how many times per second we could actuate a single solenoid. In our previous posts, we talked about how most high-end piano keys can pressed 15 times per second, as well as how the average human speaker pronounces 7 syllables per second. This means we need our physical system to at least be able to play the keys on our piano somewhere between 7-15 times per second. Using the 5N solenoids we got from Amazon, we were able to find that we can actuate the solenoids 16 times per second without any significant temperature rise! Check out this video where John demonstrates the solenoid being actuated at 16 times per second.

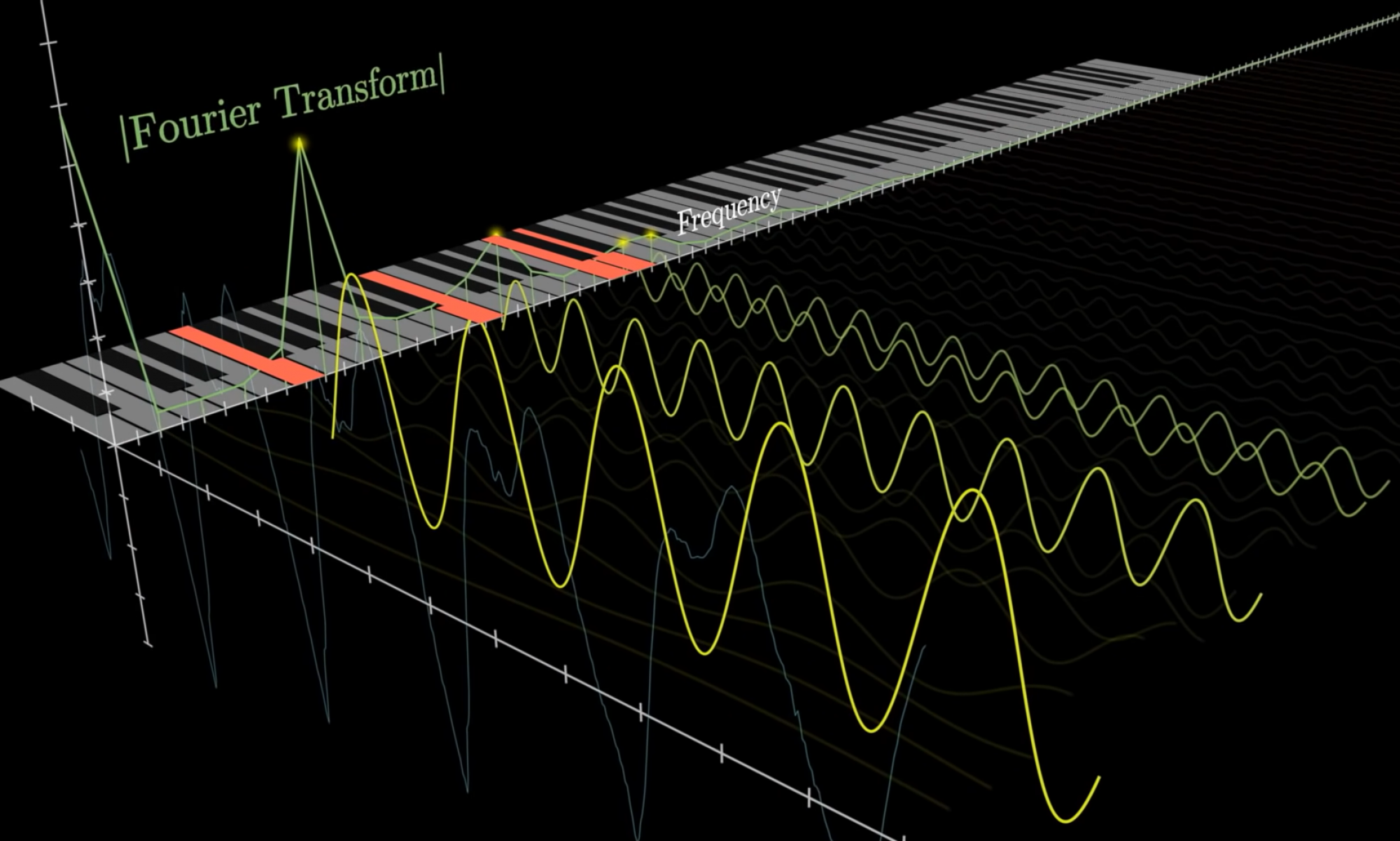

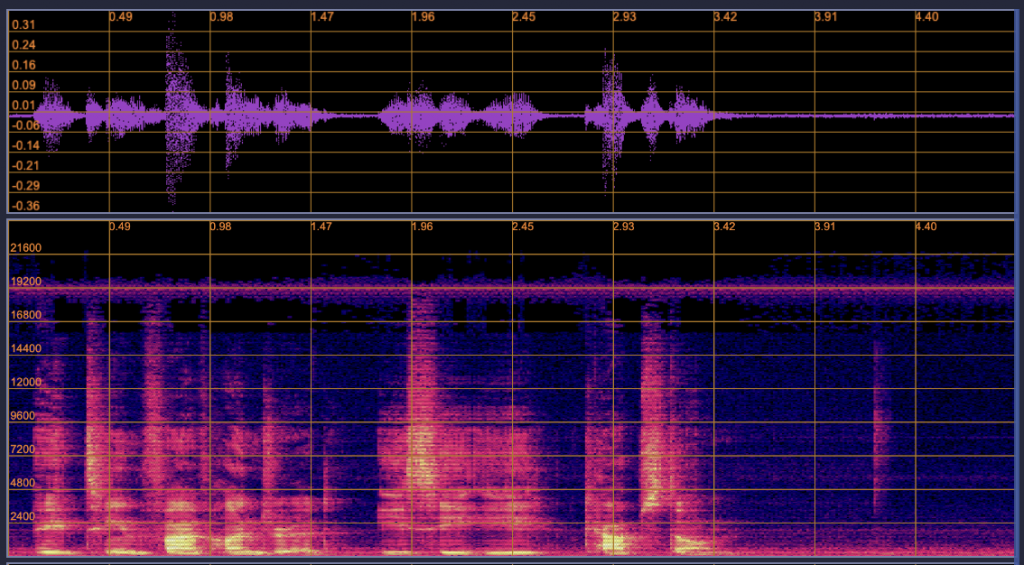

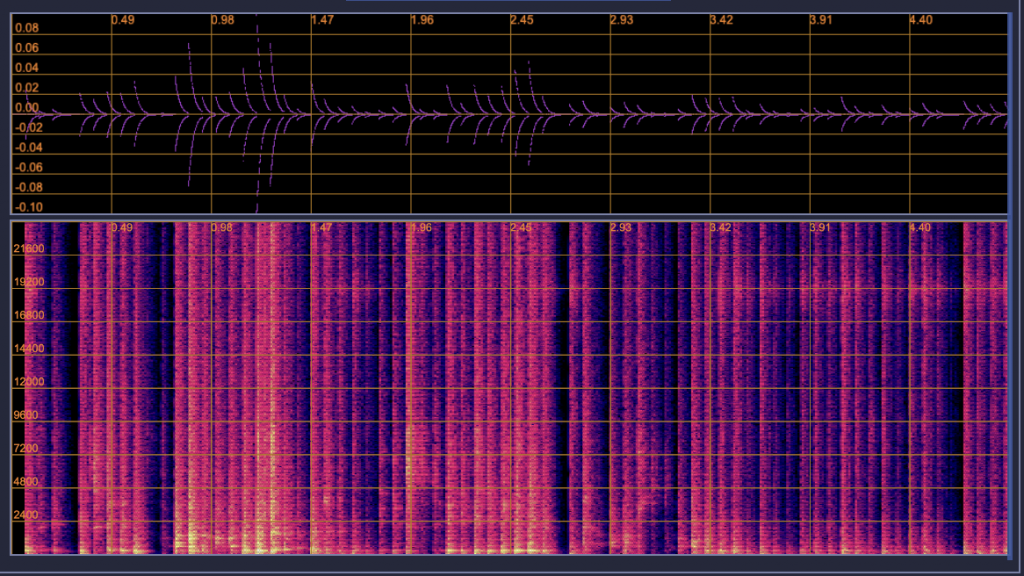

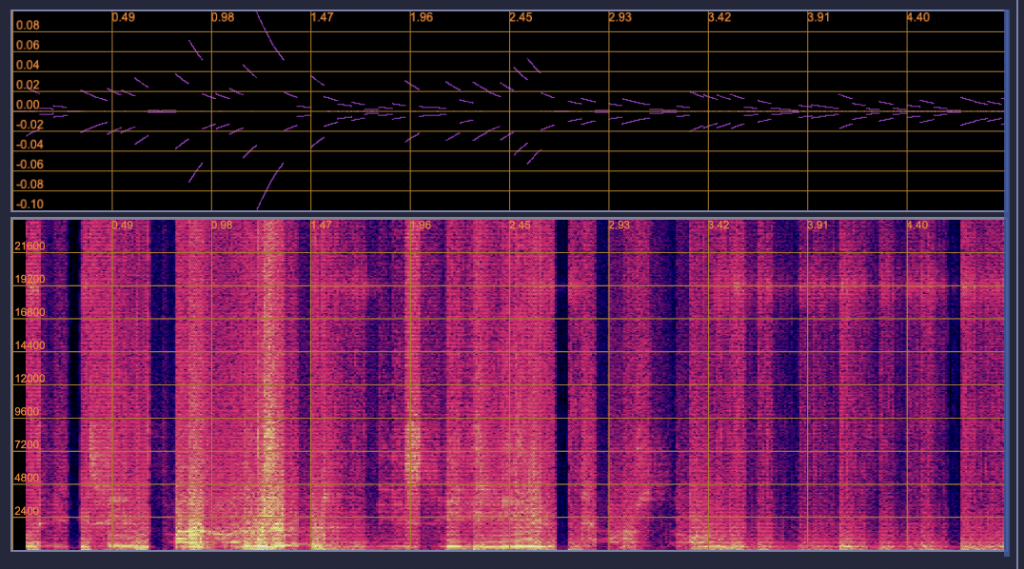

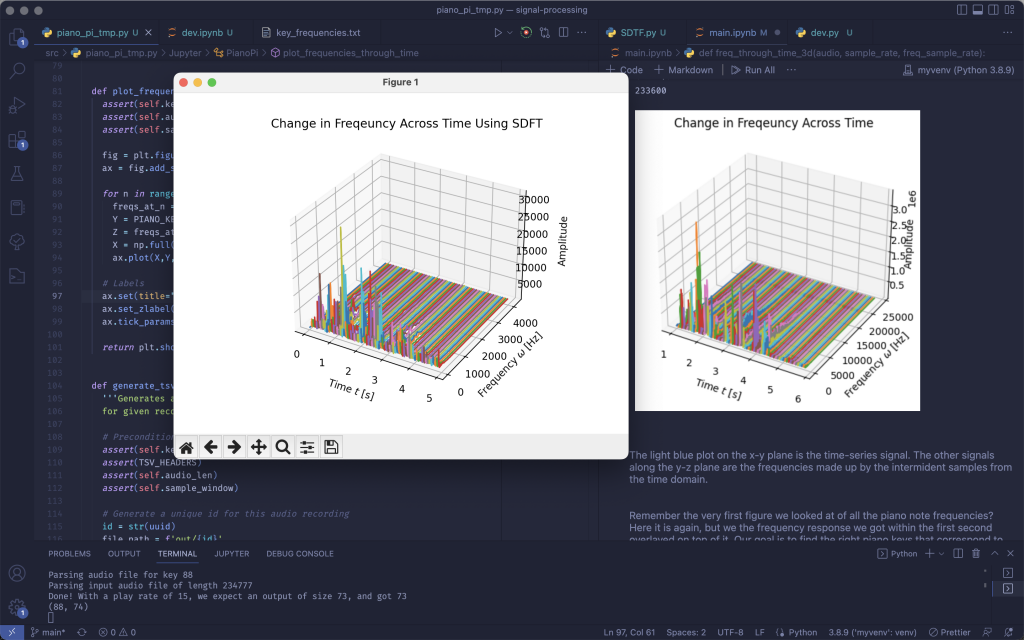

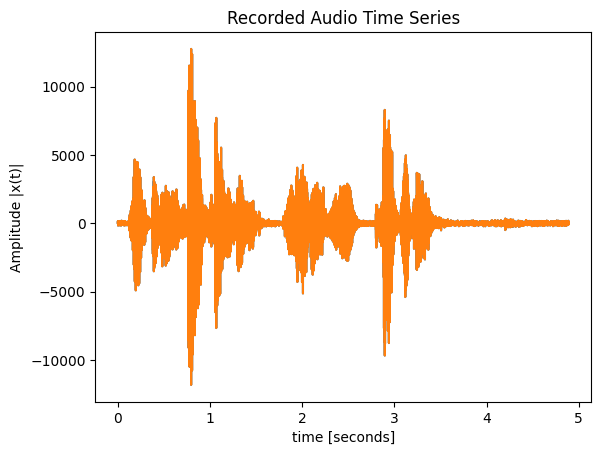

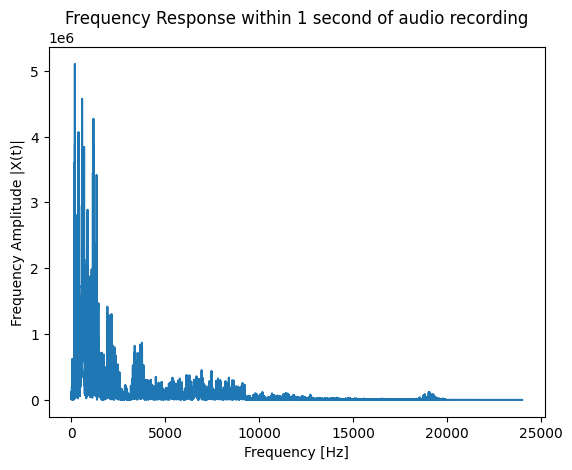

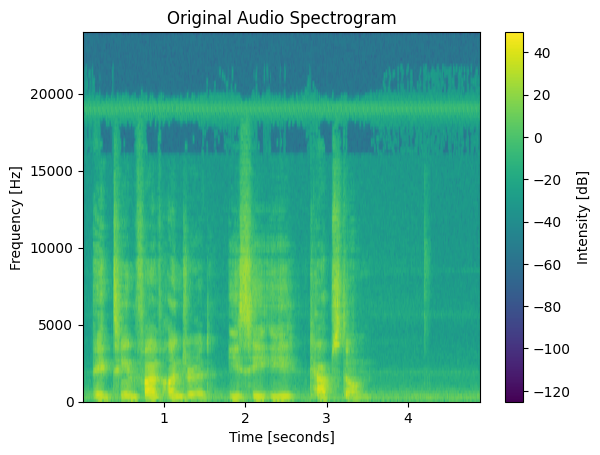

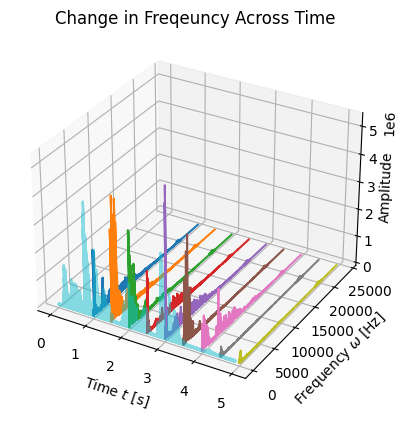

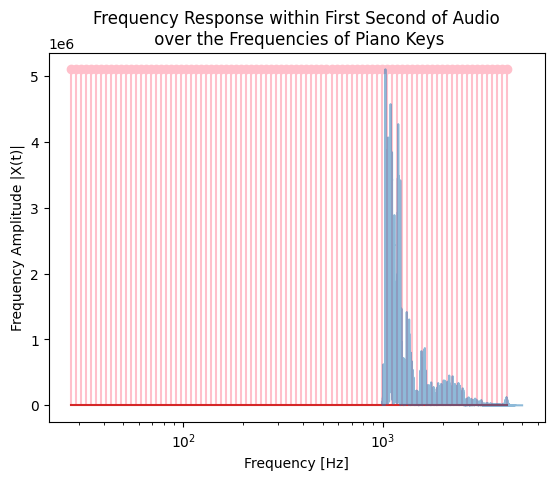

We’ve also made progress on the audio processing module that controls the keys being played! Marco’s been working on making a Jupyter Notebook that walks us through all of the signal processing involved with the audio processing module. If you’re interested in learning more about that, the notebook file can be found here!

This week we also worked through some safety concerns with the physical interface. Each solenoid is rated for 400mA and 12V, with 69 solenoids we’d need 69*0.4A = 27.6A of current to power all of them. Running ~30A along one bus is extremely dangerous if exposed to human contact. Anything over 10A will likely result in death. In order to make sure we’re being safe while building our physical interface, we’ve divided the 69 solenoid array into 9 segments of 8 solenoids (the last segment will only have 5). Each segment will have their own individual power source, and with 9 segments, each segment needs 27.6A / 9 = ~3.1A. 3A and 12V is a very common power supply specification, which means we can plug in an array of 9 3A/12V power supplies onto a power strip, and still power our entire system safely.