1. What are the most significant risks that could jeopardize the success of the project?

This week, our team was focusing on solidifying the technical details of our design. One of the main blockers for us was flashing the AGX Xavier board and getting a clean install of the newest OS. Because the necessary software was not available on the host devices that we had access to, we spent some time setting up the Xavier temporarily on the board itself. During this process, we also considered the pros and cons of using an Xavier when compared to the more portable, energy efficient Nano.

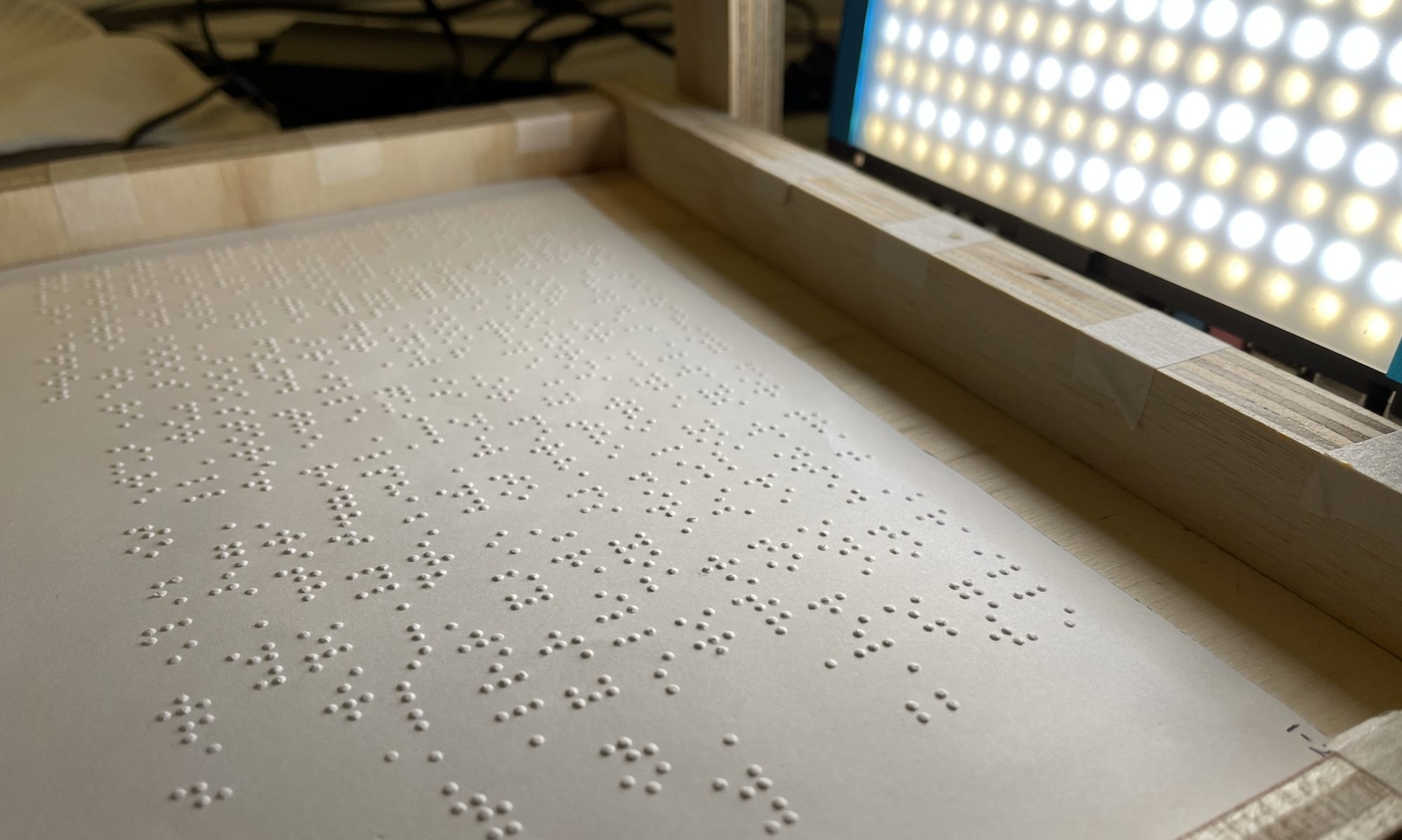

Our work is split into three parts: pre-processing, where the initial picture is taken and processed. In our initial phase, due to the difficulties of natural scene braille detection, we are currently initiating our image-processing phase with reasonably cropped images of braille text. However, since our use-case requirements and apparatus model provides a headwear mounted camera, we might need to consider different ways the camera will be mounted to provide more reliable angles of photo capturing in case ML based natural scene braille detection does not return 90% use-case requirements accuracy.

The second phase of our algorithm is the recognition phase. For this phase, because we want to work with ML, the greatest risks are poorly labeled data and compatibility with the pre-processing phase. For the former, we encountered a public dataset that was not labeled correctly for English Braille. Therefore, we had to look for alternatives that could be used instead. To make sure that this phase is compatible with the phase before it, Jay has been communicating with Kevin to add the pre-processing output to the classifier’s training dataset.

The final phase of our algorithm is post-processing, which includes spellcheck and text-to-speech in our MVP. One design consideration that was made was whether to use an external text-to-speech API or build our own in-house software. We decided against an in-house solution because we think the final product would be better served if using a tried and true publicly available package, specifically for our latency metrics.

2. How are these risks being managed?

These risks are being mitigated by working through the process of getting the Xavier running with a newly flashed environment. This will allow us to work through some of the direct technical challenges like connecting to ethernet, storage capabilities, and general processing power. By staying proactive and looking ahead, we can try and scale down to the nano if necessary, or if steady progress is made on the xavier, then we will be able to demo/use it for our final product. Overall, we have divided our work in such a way that each of us is not heavily reliant on each other or on the hardware working perfectly (of course it is necessary for testing and requirements).

3. What contingency plans are ready?

As far as our core device is concerned, we have currently set up a Jetson Xavier AGX in REH340 and can run it via ssh. We will also be ordering in Jetson nano since we have concluded that our programs could also be run in nano under reasonable latency along with other perks such as supportability of wifi or relative compactness of the device. For the initial image pre-processing phase, in case ML based natural scene detection returns unreliable accuracy, various methods, to mount the camera in regulated manners to adjust the initial dimensions of the image, are being considered. For the second phase of our primary algorithm, recognition, we researched into the possibility of using Hough transform of which are also supported by openCV houghtTransform libraries in case ML based recognition returns unreliable accuracy. For our final phase, audio transition, various web-based text-to-speech translation APIs are being currently investigated.

4. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)?

Overall, there were no significant changes made to our existing design of the system except for creating a solidified testing approach. This testing approach both validates the physical design of our product, quantifies “success”, and tests in a controlled environment. Alongside our testing approach, we are still currently in the process of deciding on whether or not the xavier is the correct fit for our project, or if we will have to pivot to the Nano for its wifi capabilities and simplistic design. This would only change our system specs at the moment.

5. Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

Adding a fully defined testing plan will allow us to properly measure our quantitative use case requirements, as well as give our audience/consumer a better understanding of the product as a whole. In addition, the Nano will not cost any more for us to use as it is available, but it may cost time to get adjusted to the new system and capabilities. This system has a significantly lower power draw (positive), but a slower processing speed (negative). Overall, we think that it will still be able to meet our expectations and mold well into our product design. Because we are still ahead of schedule, this cost will be a part of our initial research and design phase.

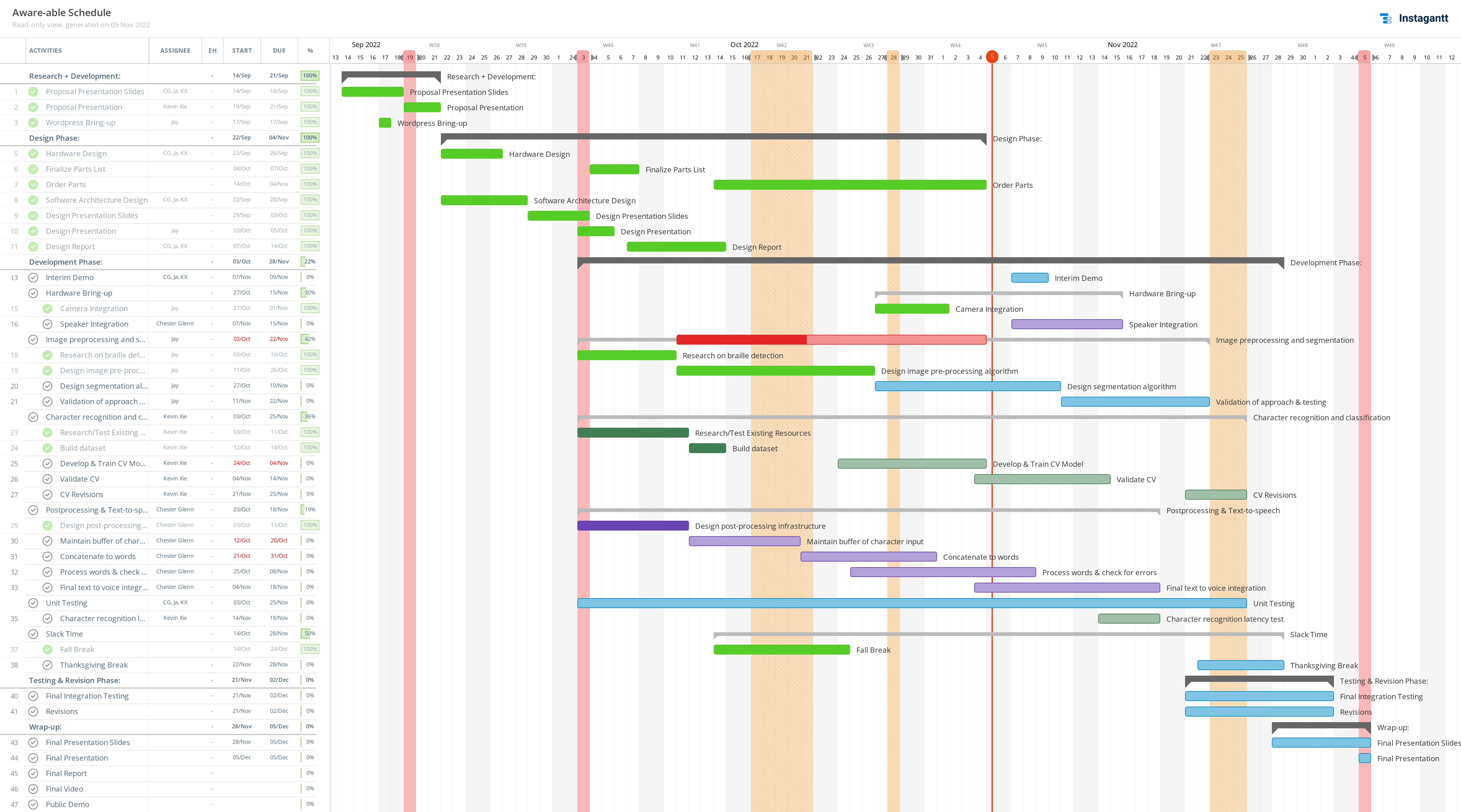

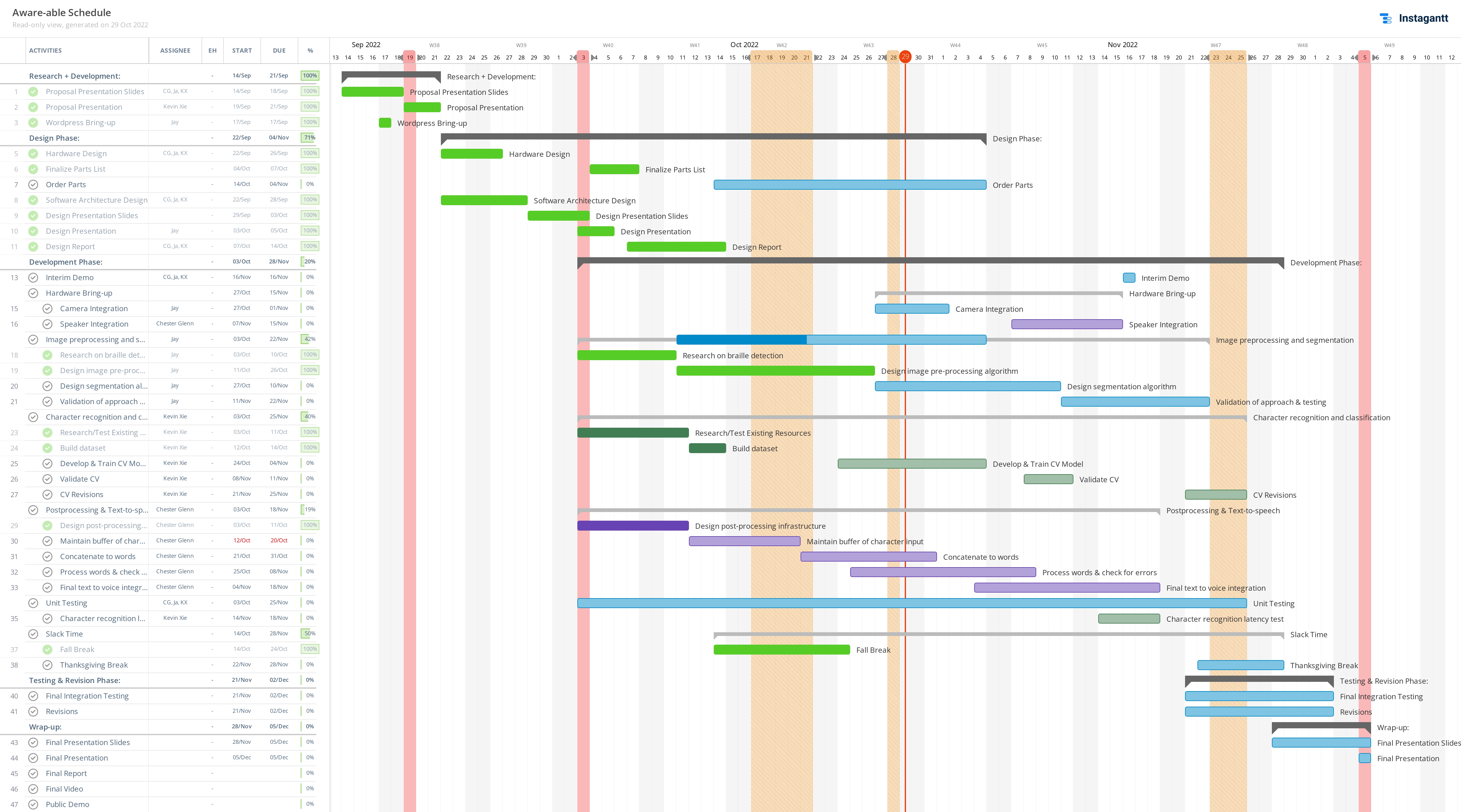

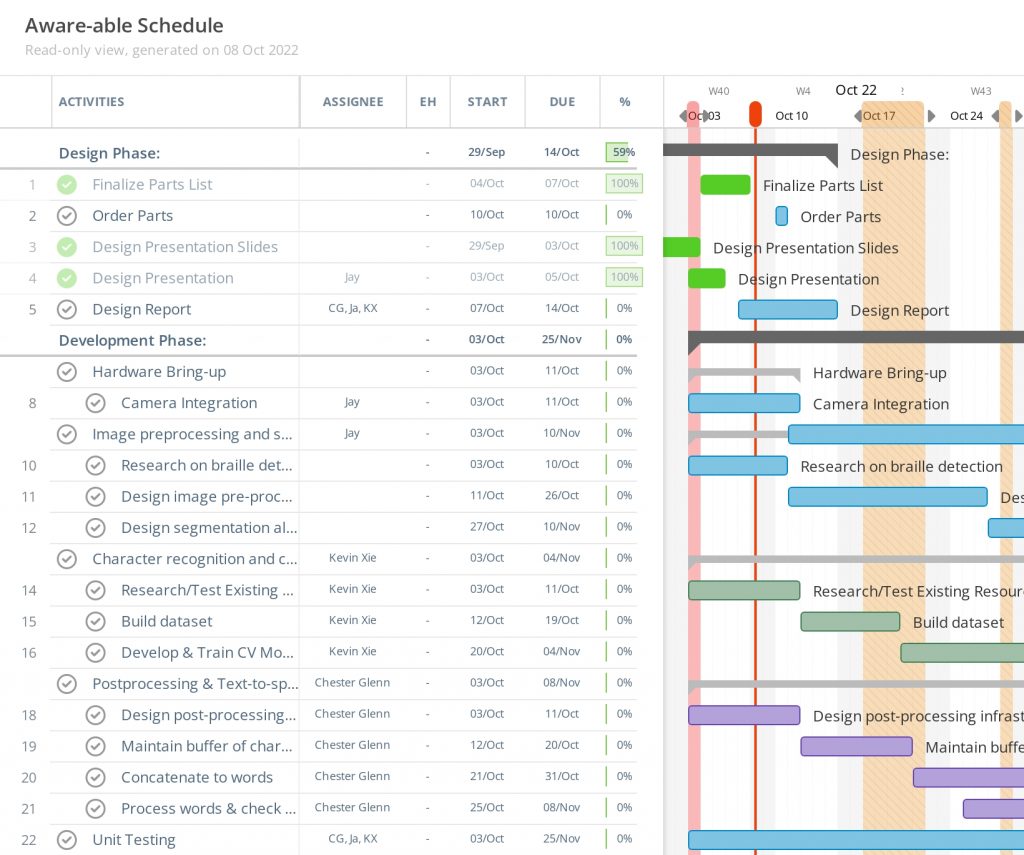

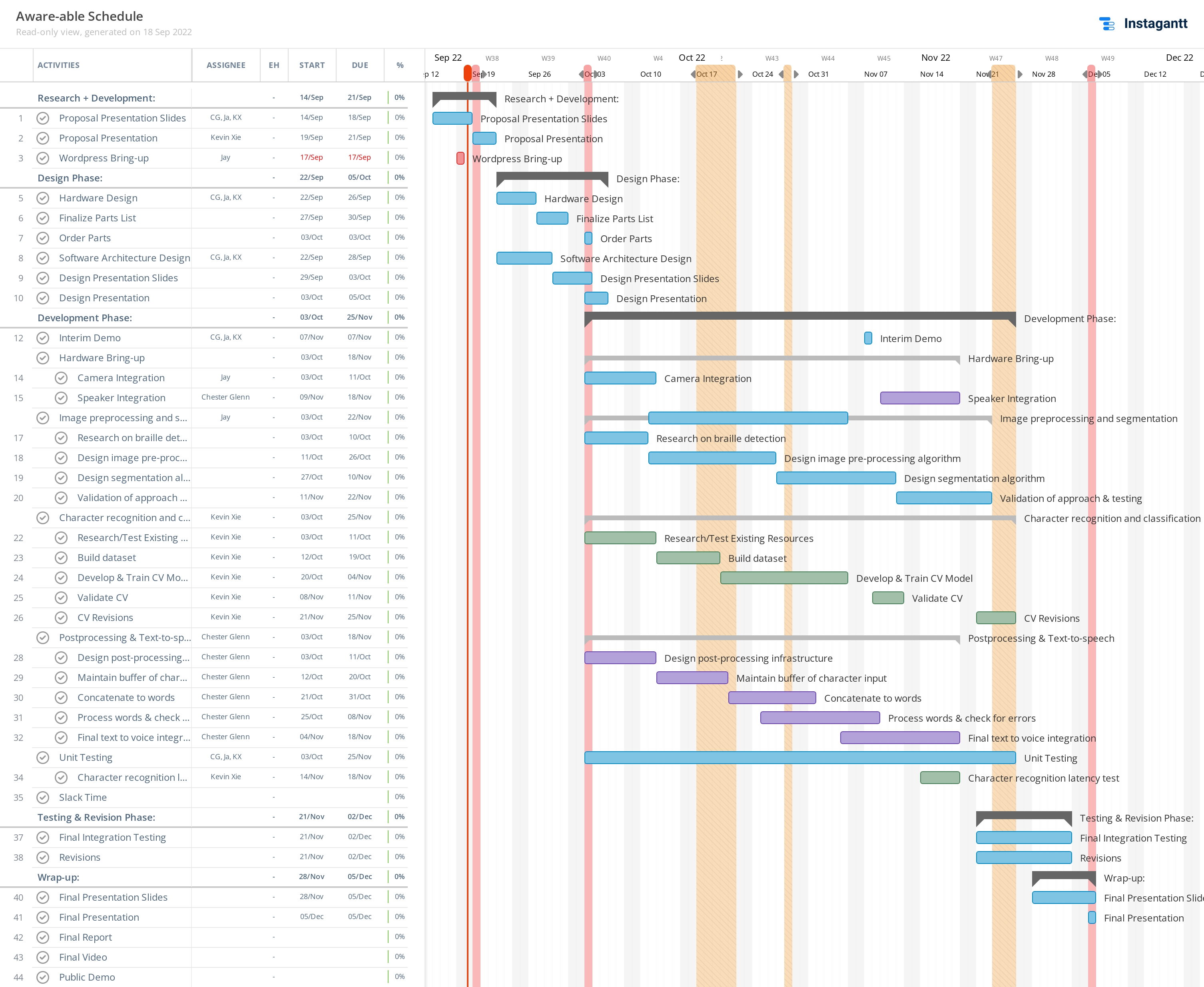

6. Provide an updated schedule if changes have occurred.

Everything is currently on schedule and does not require further revision.