- What are the most significant risks that could jeopardize the success of the project?

This week, the team debriefed and began implementing changes based on feedback from our Interim Demo. We primarily focused on making sure that we had measurable data that could be used to justify decisions made in our system’s design.

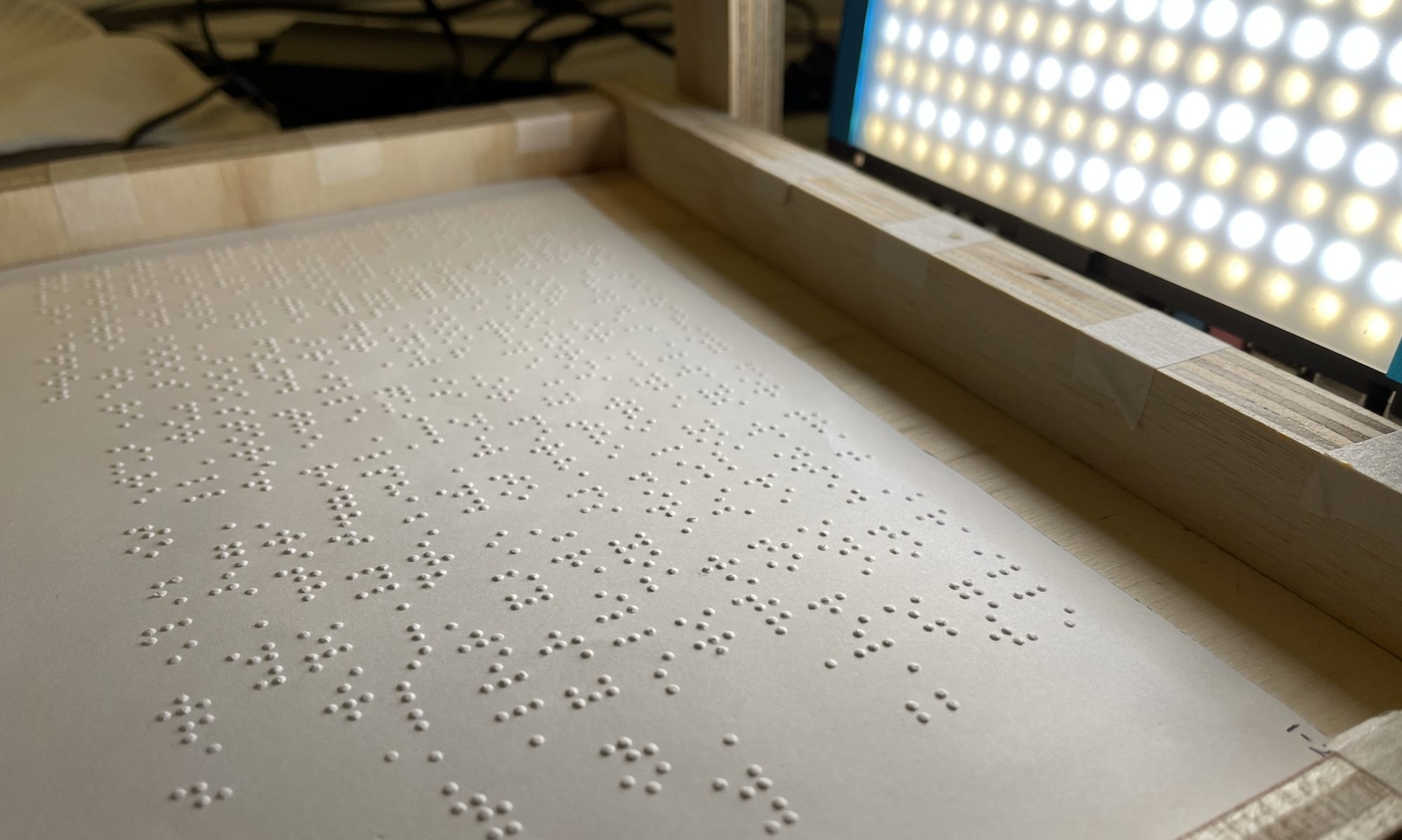

Pre-processing: I am primarily relying on the stats values (left, top coordinates of individual braille dots,as well as the width and height of the neighboring dots) from “cv2.connectedComponentsWithStats()” function. I have checked the exact pixel locations of the spitted out matrices and the original image and have confirmed that the values are in fact accurate. My current redundancies of dots come from the inevitable flaw of the connectedComponentsWithStats() function, and I need to get rid of the redundant dots sporadically distributed in nearby locations using the non_max_suppression. There is a little issue going on, and I do not want to write the whole function myself so I am looking for ways to fix this, but as long as this gets done, I am nearly done with the pre-processing procedures.

Classification: Latency risks for classification have been mostly addressed this week by changing the input layer of our neural network to accept 10 images for a single inference. The number of images accepted per inference will be tuned later to optimize against our testing environment. In addition, the model was converted from MXNET to ONNX, which is interoperable with NVIDIA’s TensorRT framework. However, using TensorRT seems to have introduced some latency to inference resulting in unintuitively faster inferences on the CPU.

Post-processing: The primary concern with the post-processing section of the project at the moment is in determining the audio integration with the Jetson Nano. Due to some of the difficulties we had with camera integration, we hope that it will not be as difficult of a process since we are only looking to transfer audio outwards rather than needing to recognize sound input as well.

- How are these risks being managed?

Pre-processing: I am looking more into the logic behind non_max_suppression in getting rid of the redundant dots to facilitate the debugging process.

Classification: More extensive measurements will be taken next week using different inference providers (CPU, TensorRT, CUDA) to inform our choice for the final system.

Post-processing: Now that the camera is integrated, it is important to shift towards the stereo output. I do think it will integrate more easily than the camera, but it is still important that we get everything connected as soon as possible to avoid hardware needs later on.

- What contingency plans are ready?

Pre-processing: If the built-in non_max_suppression() function does not work after continuous debugging attempts, I will have to write it myself.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)?

Classification: The output of the classification pipeline has been modified to include not only a string of translated characters, but a dictionary of character indexes with the lowest confidence, as well as the next 10 predicted letters. This metadata is provided to help improve the efficiency of the post-processing spell checker.

- Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

This change was not necessary but it will help improve the overall efficiency of the pipeline significantly if it is able to stand on its own. It also does not require any significant overhead in time or effort so it is easy to implement.

- Provide an updated schedule if changes have occurred.