- What are the most significant risks that could jeopardize the success of the project?

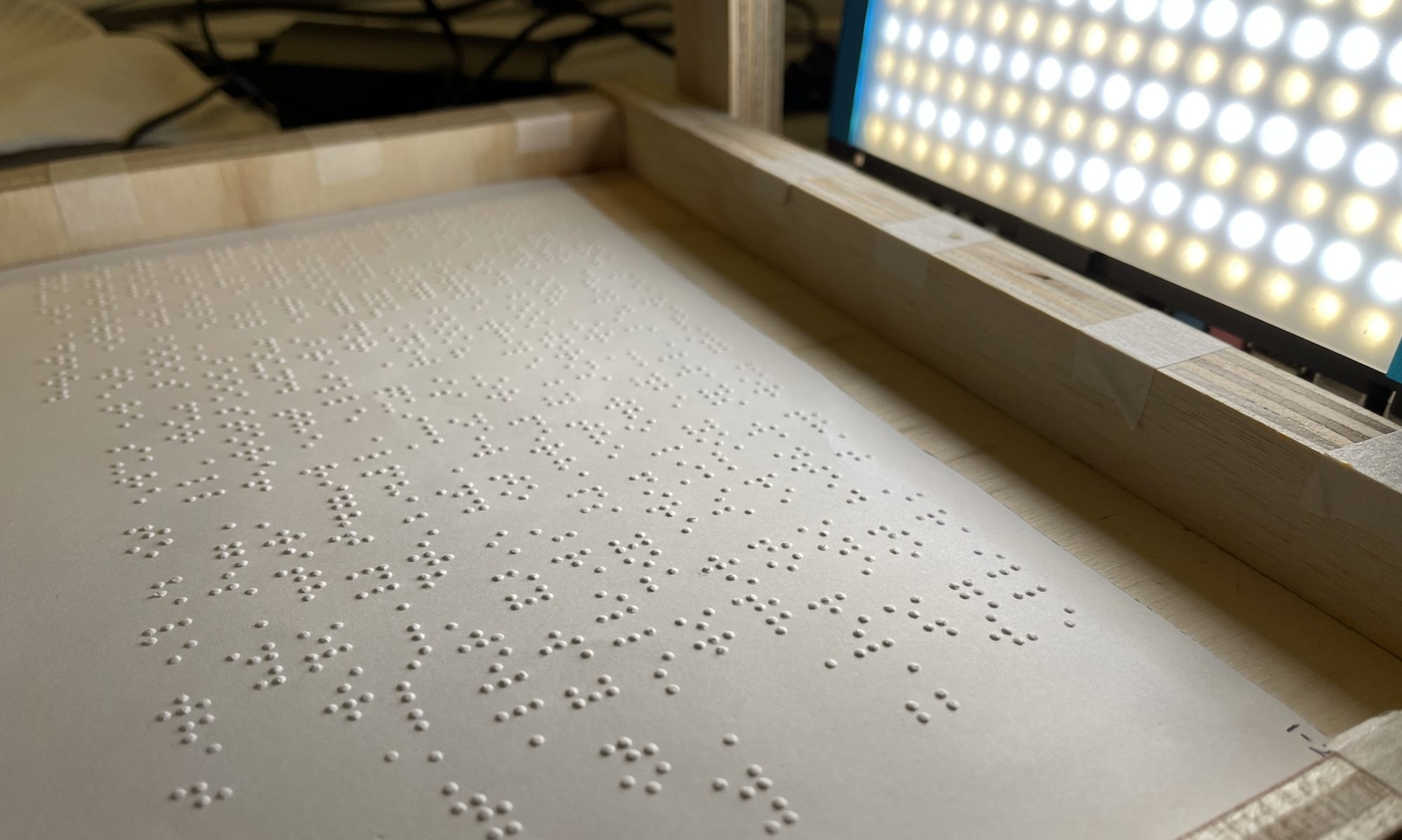

- Pre-processing:

- Currently, I am relying on openCV’s “cv.2connectedComponentsWithStats()” function that outputs various statistical values in regards to the original image inputted, including the left, top coordinates as well as the width, height, and area of the most commonly appearing object (braille dots in our case). However, depending on the lighting or the image quality of the original image taken, the accuracy of this stats function needs to be further tested in order to modify further modifications.

- Classification:

- On the classification side, one new risk that was introduced when testing our neural network inference on the Jetson Nano was latency. Since each character has around a 0.1s latency, if we were to process characters sequentially, an especially long sentence could produce substantial latency.

- Hardware:

- The Jetson Nano hardware also presented some challenges due to its limited support as a legacy platform in the Jetson ecosystem. Missing drivers and slow package build times make bring-up particularly slow. This is, however, a one-time cost which should not have any significant impact on our final product.

- Post-processing:

- Another hardware related risk to our final product is the audio integration capabilities of the Nano. Since this is one of the last parts of integration, complications could be critical.

2. How are these risks being managed?

- Pre-processing:

- On primary level, pixel by pixel comparison between image and printed matrices on terminal would be undergone to understand the current accuracy level and for further tweaking of the parameters. Furthermore, cv’s non_max_suppression() function is being further investigated to mitigate some of the inaccuracies that can rise from the initial “connectedComponentsWithStats().”

- Classification:

- To address possible latency issues as a result of individual character inference latency, we are hoping to convert our model from the mxnet framework to NVIDIA’s TensorRT, which the Jetson can use to run the model on a batch of images in parallel. This should reduce the sequential bottleneck that we are currently facing.

- Hardware:

- Since hardware risks are a one-time cost, as mentioned above, we do not feel that we will need to take steps to manage them at this time. However, we are considering using a docker image to cross-compile larger packages for the Jetson on a more powerful system.

- Post-processing:

- After finishing camera integration, we will work on interacting with audio through the usb port. We have a stereo adapter ready to connect to headphones.

3. What contingency plans are ready?

- Classification:

- If the inference time on the Jetson Nano is not significantly improved by moving to TensorRT, one contingency plan we have in place would be to migrate back to the Jetson AGX Xavier, which has significantly more computing power. While this comes at the expense of portability and power efficiency, it is within the parameters of our original design

- Post-Processing:

- There is a possible sound board input and output pcb that would allow us to attach to the nano and play sound. This comes with added expense and complexity, but it seems more likely to be proven effective.

4. Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)?

Integrating each of our individual components into our overall software pipeline did not introduce any obvious challenges. Therefore, we did not think it is necessary to make any significant changes to our software system. However, in response to interim demo feedback, we are looking to create more definitive testing metrics when deciding on certain algorithms or courses of action. This will allow us to justify our choices moving forward and give our final report clarity. In addition to the testing, we are considering a more unified interaction between classification and post-processing that helps create a more deterministic approach to which characters might be wrong more often.

5. Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

The minor changes that we are making to the individual subsystems are crucial for the efficiency and effectiveness of our product. Making sure that we stay on top of optimal decisions and advice given by our professors and TAs.