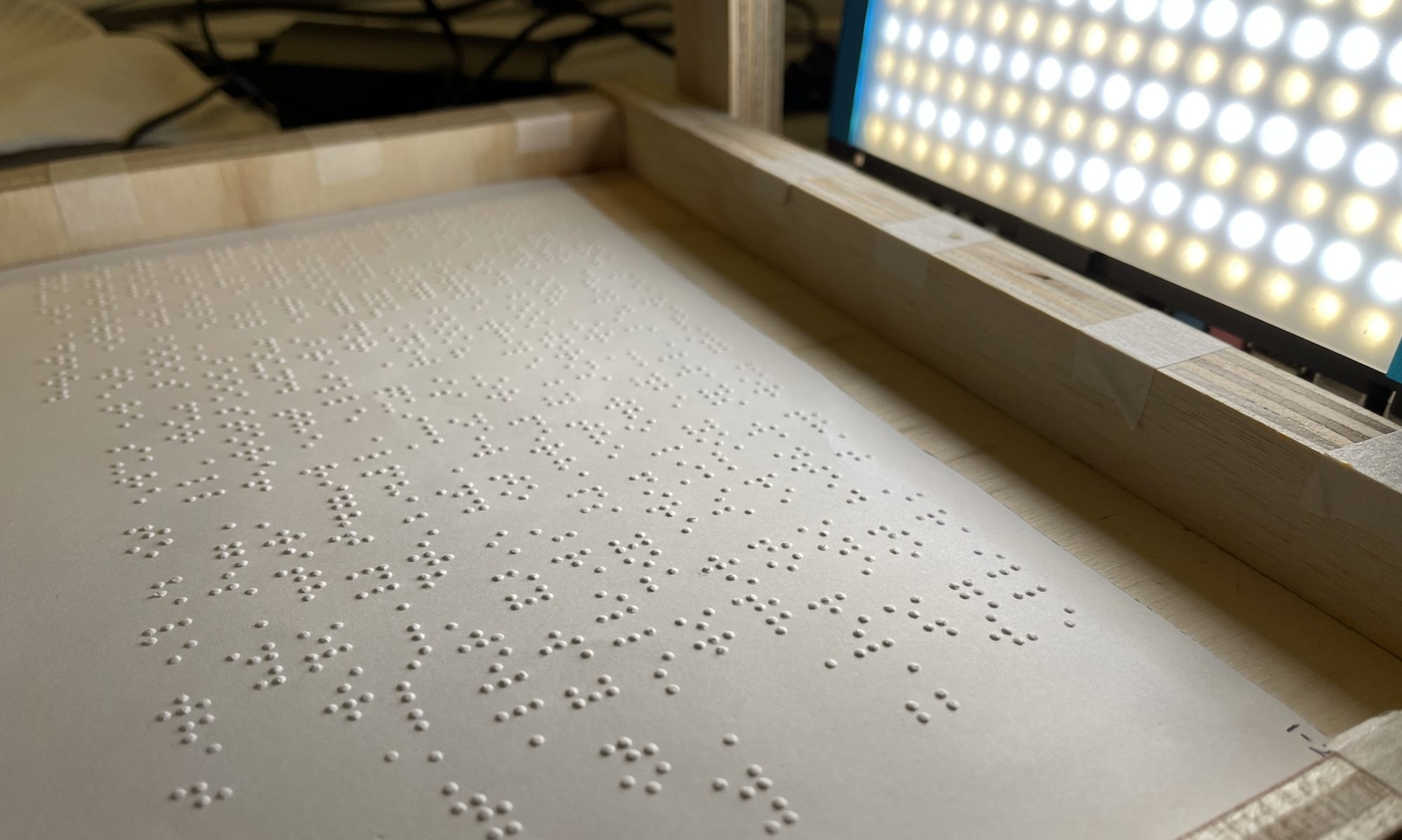

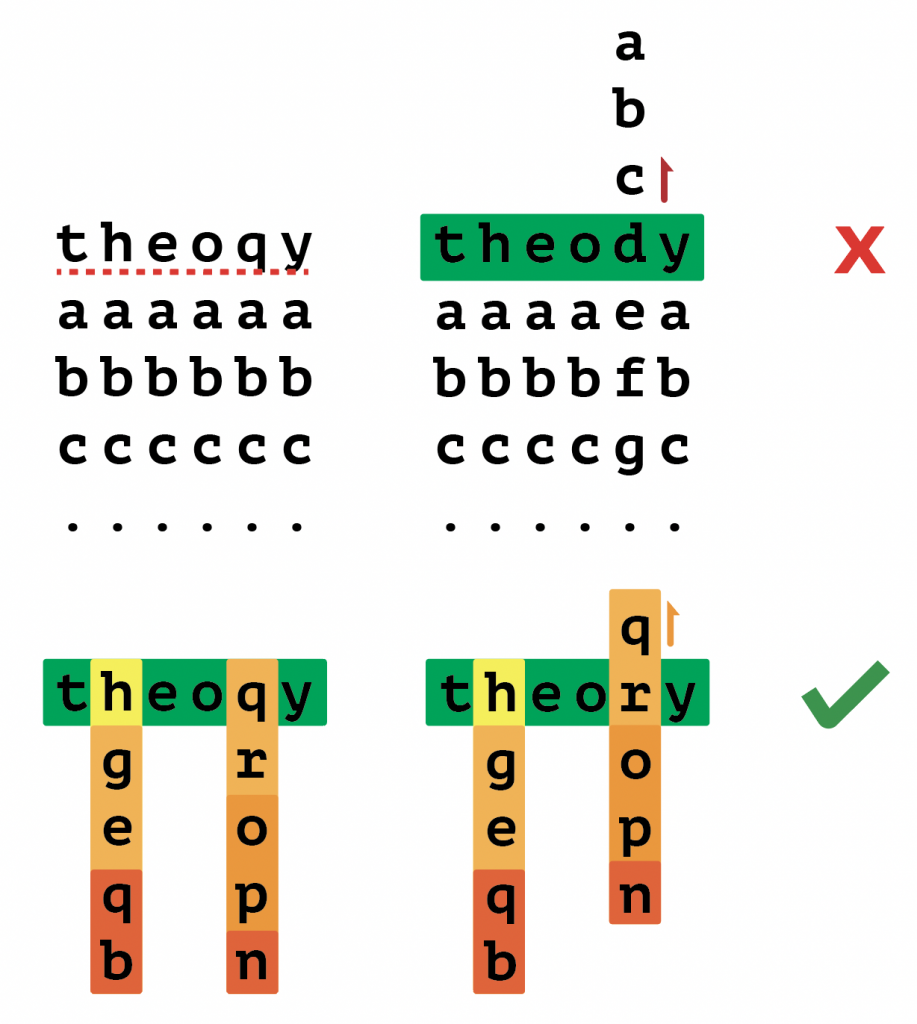

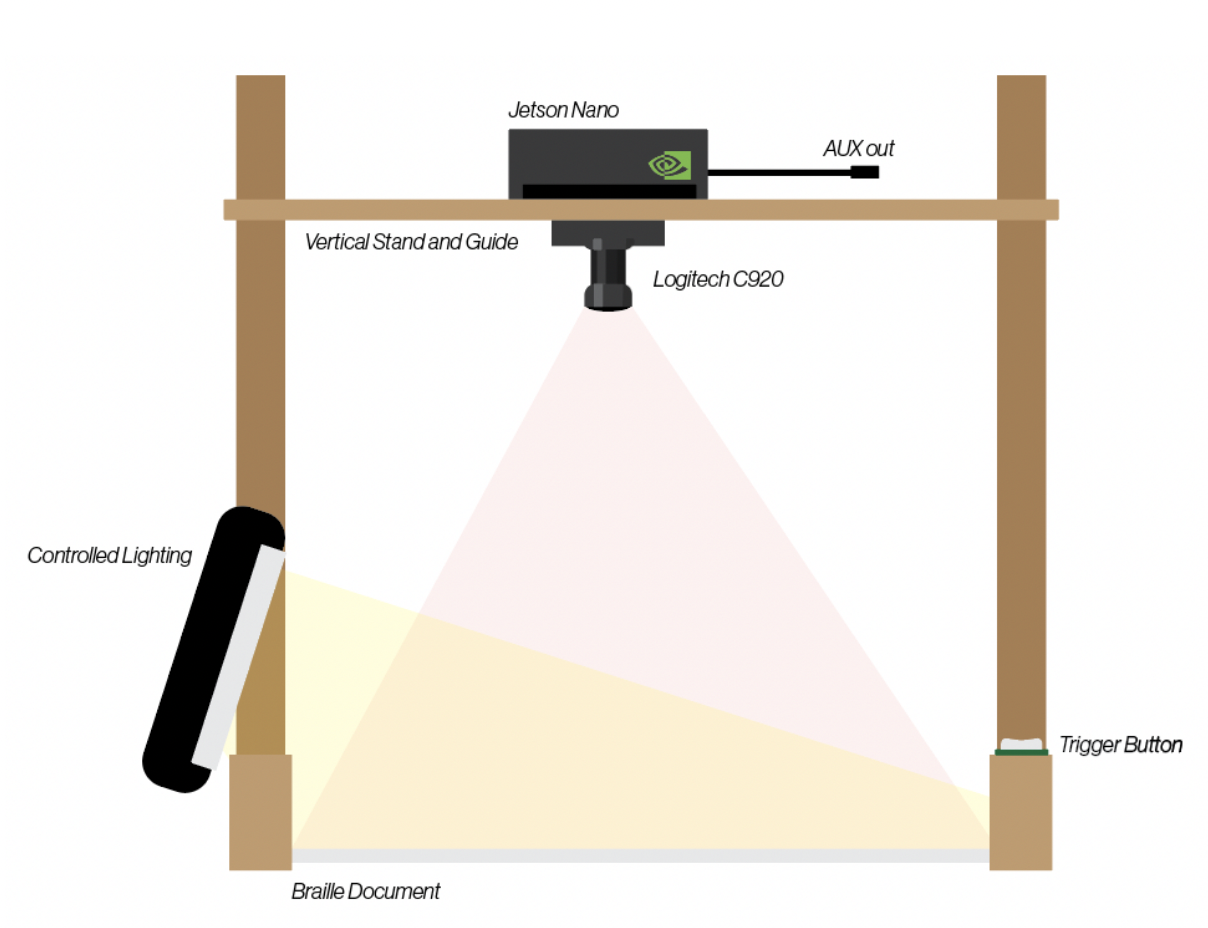

This week started with final presentations, for which I prepared slide content and updated graphics:

Following presentations, I continued to work on integration and was able to put all our software parts together and verify functionality on the AGX Xavier.

AGX Xavier

This week, I was able to get TensorRT working on the AGX Xavier by re-installing the correct distribution of onnxruntime from NVIDIA’s pre-built Jetson Zoo. I was also able to install drivers which enabled us to use a USB Wi-Fi dongle instead of being tethered by Ethernet. Once the Xavier was set up, I was able to measure inference performance for my classification dataset:

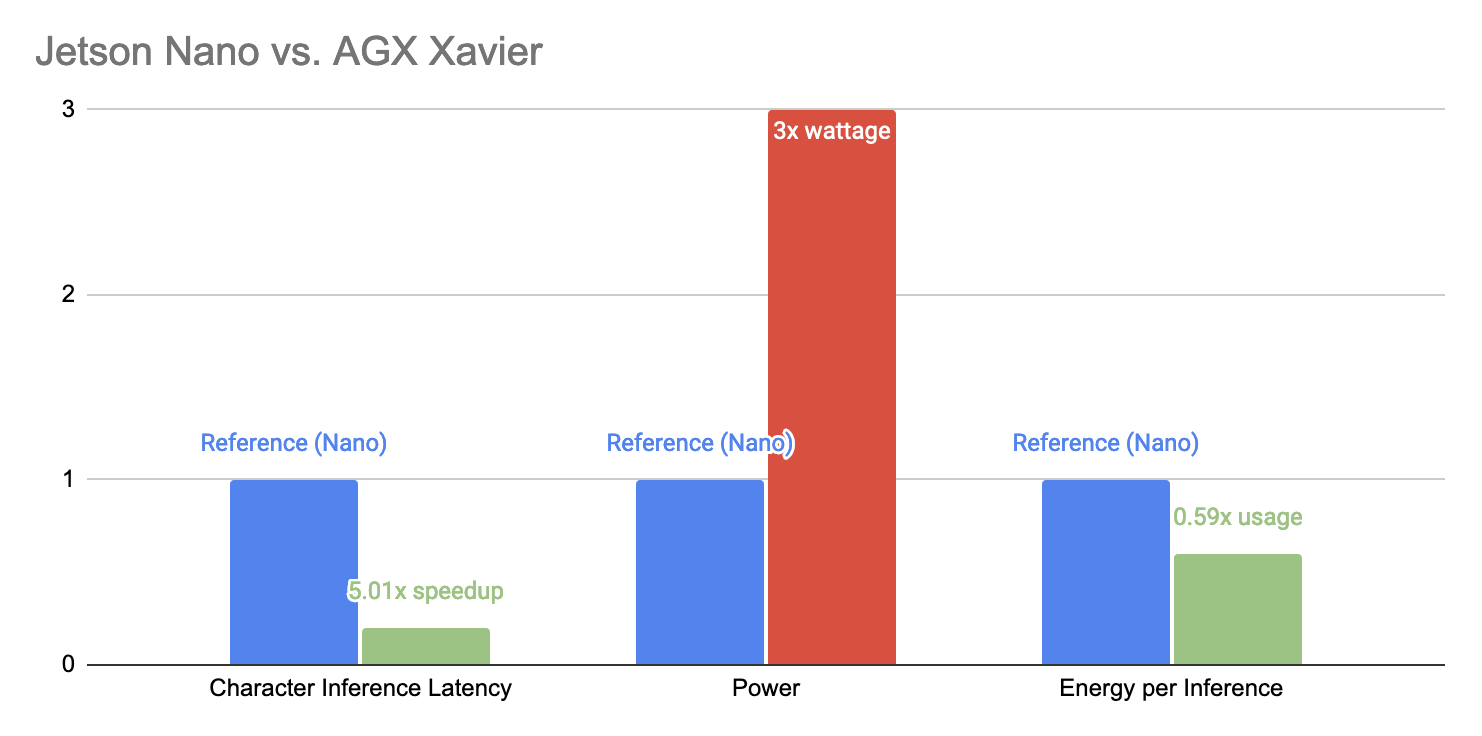

It quickly became clear that Xavier had a huge performance advantage over the Nano, and given our new stationary rescope, it seemed reasonable to pivot to the Xavier platform. Crucially, the Xavier provided more than 7x speedup over the Nano when running inferences with TensorRT. This meant that we could translate 625 characters in one second’s latency – more than 100 words – far exceeding our requirements. Combined with only 3x the maximum power draw, we felt that the trade-off favored the Xavier.

Integrating software subsystems was fairly straightforward once again, allowing me to perform some informal tests on the entire system. Using the modified AngelinaReader to perform real-time crops, we were able to achieve 3-5s latency from capture-to-read. Meanwhile, our own hardcoded crops / preprocessing pipeline was able to reach under 2s of latency, as we had hoped.

Experimental Feature: Finger Cursor

Because I had some extra time this week, I decided to implement an idea I had to address some of the ethical concerns that were raised regarding our project. Specifically, that users will become overreliant on the device and neglect learning braille on their own. To combat this, I implemented an experimental feature that allows the user to read character by character at their own pace as if they are reading the braille themselves.

Combining the bounding boxes I can extract from AngelinaReader and Google’s MediaPipe hand pose estimation model, I was able to prototype a feature that we can use during demo which allows users to learn braille characters as they move their fingers over them.

Using the live feed from the webcam, we can detect when the tip of a user’s index finger is within the bounding box of a character and read the associated predictions from the classification subsystem out loud. This represents a quick usability prototype to demonstrate the educational value of our solution.