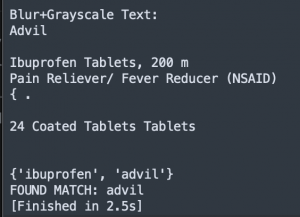

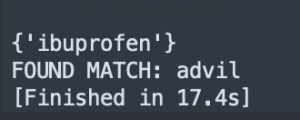

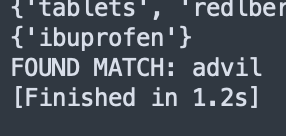

This week, I finished text orientation and improved efficiency and readability of my code. I initially used Tesseract’s image_to_osd function to correct the text orientation, but it gave me an error that the resolution of image is 0 dpi even though I made sure to test with an image with resolution of 300 dpi. I couldn’t fix the error so instead of using image_to_osd function, I wrote a function to check the skewness and correct the image. Additionally, my other main concern was efficiency. Depending on the image, my code was running in between 2.5 sec to 17.4 sec. This was mainly because of my code efficiency and the inconsistency of resolution of the images I used to test. This week, I worked on improving efficiency and readability of my code by breaking down my code into multiple helper function and files and making sure the code stops if a matching text is found from our product info dictionary. I also wrote a helper function to save our product info to json file which would be useful for web app later. I was able to improve the best case image from 2.5 sec to 1.2 sec, and the worst case image from 17.4 sec to 5.9 sec.

This definitely still needs some improvement since the user would not want to wait ~6 sec each product to be scanned. For next week, I am hoping to improve more efficiency with consistent test images, and with the camera I got from Raymond, I will take some pictures of the items and have them test in my code. Also, I am hoping to improve on my image preprocessing and start integration with Raspberry Pi and web app for next week. I think I am better on track than last week since I have improved some code.