The week of 12/4 I prepped the final presentation as well as the finishing touches on the system in general for the techspark expo next week. I worked with Alan on the cursor smoothness metrics and we talked about changing the system at a working level by changing which gestures correspond to what. Mainly, we changed click and drag to be a fist, and single click to be an ok sign. This coming week, we’re going to work on planning out the final report and video as well as the techspark demo. I also spent a good amount of time refactoring the code by placing it in classes to make it much neater, since we originally had all the gesture recognition and flow chart logic in the main function of the demo file, so I put that all in a separate class and just called the class functions in the main. I added comments on all the major functions as well, so if we want to reuse this code and work on it in the future, it will be easy to modify. Most of the brunt work for the project is done, and all that’s left is getting our metrics set for the final report including the user testing and questions that we will include in our final report. Overall, I am on track with my work, and we are set to be good for the presentation portions next week.

Brian’s Status Report for 12/4

For the final two weeks of the semester I scheduled most of my time for refining the feel of using our product as well as preparing for our final presentation and public demo.

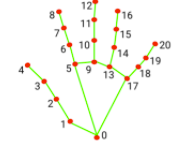

For the refining portion, I added a minor smoothing to the movement of the mouse by averaging the location of the 6 landmarks surrounding the palm of the user’s hand.

Following that, the location of the hand is averaged across 3 frames of calculation to act as a low pass filter on the noise generated by our CV system.

Further, in order to circumvent an issue with the product where it could not differentiate between a click and a click and hold, I segmented each action into its own gesture. Click and drag/holding is now the closing of the hand into a fist, while a simple click is touching your index finger to your thumb, like an ASL number 9.

Finally, in preparing for our final demo and presentation I spent a few hours running experiments involving different model architectures and data augmentation to provide reasonable insight into tradeoffs and design decision outcomes.

Alan’s Status Report for 12/4

This week and last week, I started doing system and component testing of our project and worked on and presented the Final Presentation for our group. For our metrics, I ran a bunch of trails of our clicking detection and jitter distance tests to contribute results to our group average. I also helped in contributing code to increase smoothness of mouse movement (by averaging positional inputs) and increase practical detection of gestures (by adjusting the number of detections needed to execute a mouse function). The coming week, I will continue to gather metrics for the other mouse functions and work on the final deliverables. I will continue adjusting numbers in our code to get us the best measurements possible so that we can surpass our requirements. I will also get new users such as my roommates to participate in user testing. I am on schedule and should be good to meet the final deadlines and contribute towards giving a successful final demo.

Team Status Report for 12/4

This week and last week, the team started doing testing, verification, and metric collection alongside consistent integration and improvement of our system. All of the risks and challenges throughout the semester have been handled and at this point we are just working on refining our product to function as well as possible for the final demo. We are mostly doing testing and making small system changes and adjustments to meet/surpass our requirements. The schedule and design for our project is still the same, and we will be focusing on finishing the final deliverables in the coming week.

Brian Lane’s Status Update 11/20

This week was spent with further improvements to the model. Specifically, I spent this week performing some data augmentation in order to improve overall validation accuracy.

The model as it exists currently outputs high confidence intervals for a couple gestures, namely open hand and a fist, when they directly face the camera. For gestures involving a specific number of fingers, the model is less certain and only predicts correctly when the hand is directly facing the camera.

I am still uncertain how to go about improving the accuracy in classifying more complex gestures, but the problem of hand orientation in relation to the camera has a strait forward solution in data augmentation.

Using math similar to that in my former blog post where I explained a 2D rotation, I spent the week creating a script to perform random rotations of the training data about the X, Y, and Z axes instead of just the initial Z axis rotations.

This data augmentation involved projecting the training data points into 3D space at Z=0 and selecting an angle from 0 to 2pi for the Z axis rotation and -pi/4 to pi/4 for the Y and X rotations. After this, the rotations were each done sequentially and the result was project back onto the XY plane by simply removing the Z component.

Next week is shortened for Thanksgiving break, so my ability to work on this project will be reduced. Even so, next week I plan to add two more gesture classes to the OS interface allowing right clicking and scrolling. Time permitting I will also begin collecting tradeoff data for our final report.

Links:

Wikipedia article on 3D rotation matrices

https://en.wikipedia.org/wiki/Rotation_matrix#In_three_dimensions

Andrew’s Status Report 11/20

This week, I looked into smoothing out the cursor motion again and started a basic implementation of the finite state machine for when the system is in cursor movement mode and in precision mode i.e. when a user is trying to be price and hover over a specific region on screen. While the team didn’t meet this week, we will be working closely this coming week for getting the system to behave a bit more smoothly incorporating the feedback from the interim demo report. I’m thinking of changing the mouse movement function a little bit to make it more drastic like scaling up the movement by some constant so the distance moved is greater so the user doesn’t have to strain as much. Then, since we’re doing some form of FSM, we can change the state to detect when the user is trying to be precise or when he wants to move. We’re placing an emphasis on our project on quality of life and user likability, and that’s what’s prompting this decision. As I said earlier, I’ll be following up with the team to test this on the webcam as they have the one we’re using right now. As per the interim demo as well, we have a functional project, we’re just working out the little kinks. As such, I’m on schedule with my work.

Team Status Report for 11/20

This week, the team reflected on the feedback for our Interim Demo, most of which was incorporating some quality of life and smoothness changes into our design as well as being better prepared to explain our system and responsibilities for the final demo. The team has also transitioned from pure implementation to begin focusing on observing tradeoffs and collecting metrics before the final presentation, and moreso for the final report. The biggest risk our team has is gathering enough information to present as tradeoffs, specifically for creating a tradeoff graph. The development of our system is still proceeding nicely as we continue to improve our gesture model accuracy and implement smoothness changes to our mouse functions, but we have yet to see how smoothly our metric collection progresses. Our design and schedule are still the same and we are on track to present some tradeoffs and metrics during the final presentation after Thanksgiving break.

Alan’s Status Report for 11/20

This week, I finished implementing quality of life changes for our current mouse functions as well as some test code for implementing the other mouse functions such as right clicking and scrolling. I added a method of differentiating between clicking and holding by sampling gestures over time and determining which one to do based on if the click gesture was detected multiple times over a half a second time frame. I also experimented with other mouse functions such as right clicking (works the same as left clicking) and scrolling (instead of using mouse.move, mouse.wheel is used). mouse.wheel is interesting in the sense that the scrolling sensitivity is determined by how often the function is called, so the change in y positional value of the hand is used to determine how often the function is called instead of directly assigning a “distance” to scroll. Since we have a working system, for now the team and I will focus on gathering trade offs and metrics to prepare for the final presentation after Thanksgiving break. I am on schedule and will turn my focus towards finishing the implementation of other mouse functions once the gesture recognition model is more accurate as well as noting trade offs and collecting metrics for the final presentation.

Andrew’s Status Report 11/15

This week, I along with the other people in the group spent a lot of time preparing for the interim demo. We met a couple times the past weekend and to prep for it and made decent amount of headway with the integration. We now have a functioning system where we can move the cursor to an onscreen position and close our hand getting it to recognize a clicking gesture, and this recognition doesn’t produce noticeable latency within our system. My next steps are working on making the cursor movement run smoother and that will be initially attempted with some form of rolling average. Since the mouse movement gets a bit jittery when you try to click on specific locations, having some sort of rolling average will smooth out the cursor motion and make it so the cursor becomes a lot more precise at specific tasks that require precision. Another thing I’ll be working on in tandem with Brian is a hand landmark frontalization algorithm performed with some three dimensional rotation matrix performed likely with the hands that will hopefully get our gesture recognition more accurate. Overall, like the previous week, I’m on schedule with my tasks.

Alan’s Status Report for 11/13

This week, most of my work was done in preparation for the Interim Demo. We managed to successfully demonstrate the mouse movement as we planned but we even made progress with integrating the gesture recognition model to allow for left mouse clicking and dragging. While Brian continues to refine the model, I will work on implementing the other mouse operations such as right clicking and scrolling. Right now I am working on making quality of life changes to the mouse functions. One example of this has to do with clicking vs dragging using the mouse. Due to the nature of the mouse module functions, if the gesture for holding the mouse is continuously input, it makes it somewhat difficult to differentiate a user who wants to drag something around for a short while, or a user who wants to click. I am working on implementing code that differentiates between these by sampling gesture inputs over a short period of time. This will be implemented along with a sort of “cooldown timer” on mouse clicking. This will prevent the module from accidentally sending spam click calls to the mouse cursor as it continually detects the gesture for clicking, and instead allows for a user to click once every half a second or so. These changes are being made to ensure a smoother user experience. Currently I am on track with the schedule.