This week, I worked primarily on helping the team test the device with more drops, as well as implementing the vectorization algorithm, and integrating it with the arduino code (which controls the motors) and the CV code (which detects the circles). We then worked as a team to test the full detection-to-propulsion pipeline.

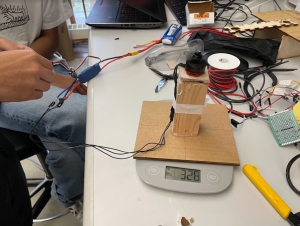

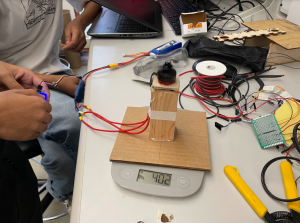

For the drops, we tried various parachute setups (2 vs 3, shorter and longer slack lengths). As usual, I held up the parachutes and let go of the device as Lahari initiated it with the press of a button and Vikram was below to catch it. We noticed a pendulum motion as I dropped the device. We then tried to drop the device, then initiate the motors mid flight, but the same issue persisted. We think this may be a lightness issue, so we will resume drops after the interim demo with additional weight at the base of the device to prevent swinging.

As for the vectorization code, I implemented it in Python, and sent the data over serial so the Arduino can use the values. In short, the algorithm takes in the angle and magnitude of the vector towards the target, from the center of the frame, and computes the necessary PWMs for each of the three motors that will create a resultant vector that is equal to the target vector. After this, we tested the script by connecting the Arduino (which was running code Vikram wrote to receive the PWM values over serial), and noticed a hanging/slowdown using the serial over the USB connection. We then moved to the GPIO RX and TX pins, and using a logic-level-shifter, connected the Arduino and Pi directly. This solved the hanging issue (the serial over USB is prone to slowdowns as it is dependent on the processor frequency). Then, I helped the team test the vectorization algorithm by inputting various angles to the script, and seeing if the correct motors spun up. Since our motors are defined at angles 0, 120 and 240 degrees, we tried these values as well as values in between (such as 60 and 90), and found that it worked as intended, meaning I was on schedule with its completion.

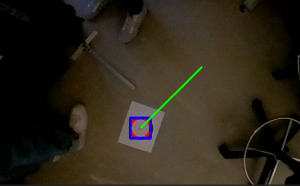

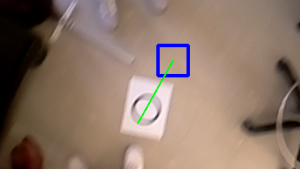

After this, I then worked to integrated the full Camera to Propulsion pipeline by having the CV Python script call the Vectorization algorithm, which then communicates with the Pi over serial. To test this, I placed a circle on the ground as the target and moved it around, checking to see if the angle generated by the CV code was being passed on to the vectorization algorithm, then seeing if the correct motors spun up. With some CV tuning, we were able to get a consistent detection-to-propulsion pipeline, as the circle changes position the motors move accordingly; however, one issue we found that we will fix tomorrow is the relative angle 0 to the camera, and to the device were not matching. This meant that an offset existed between the angles that the device moves towards, and the angle the camera produces . This should be a matter of experimentally deducing which angle is 0 to the camera, which angle is 0 for the device, and matching those. The above was all tested using our new demo setup (described below), as I ran the camera+vectorization script on the Pi and Vikram and Lahari turned the device on or off between tests.

I also helped the team build a testing setup to demo our device for the interim demo on Monday. This involved getting various 80-20 pieces and attaching them in such a way that would allow us to hang the device over a target using string. We tested the setup and found that it was able to hold the device stable, as well as allow it to move a sufficient amount to demonstrate the mobility of the system.