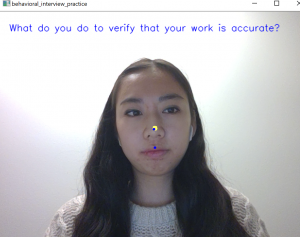

This week, I worked on integrating the behavioral interview questions that Shilika compiled into the facial detection system and implementing the behavioral portion of the tips page. Shilika created a file at the beginning of the semester that contains a large number of common behavioral interview questions. I took a handful of these questions and placed them into a global array in the eye contact and screen alignment code. Then, using Python’s random library, I choose one of the questions in the array at random. This chosen question is displayed at the top of the video screen while the user records themselves, so that they can refer back to the question whenever they want to.

I also worked on implementing the behavioral portion of the tips page that we decided to change from the original help page last week. I used articles from Indeed and Glassdoor to provide the user with information about behavior interviews, as well as helpful tips and common behavioral interview questions. This gives background to the user on how to prepare for behavioral interviews and what to expect during a typical interview. The tips page will be divided into two sections, one for behavioral and one for technical. I implemented the behavioral section, and Mohini and Shilika will be working on the technical section for technical interview information and tips.

I believe that we are on track for the facial detection portion, as most of the technical implementation is complete and now we are working on improving and fine-tuning. Next week, I plan on working on combining the eye contact and screen alignment parts together for the first option on the behavioral page. I also plan on figuring out how to keep track of the amount of times that the user had subpar eye contact and/or screen alignment during their video recording, so we can provide this information to the user in their profile page.