This week, we continued working on our respective parts of the project. We decided that instead of storing the behavioral interview videos, we will be storing a summary for each video with the amount of times the user had subpar eye contact and/or screen alignment. This is because we found out that it is difficult to display videos embedded in HTML from a local directory (for privacy and security reasons in HTML5), and it did not make sense to store all the videos in a public space. We also realized that it may be more helpful to the user if we provide them with a summary of how many times they had subpar eye contact and/or screen alignment for each behavioral interview practice they do. This way, they have concrete data to base their improvement off of.

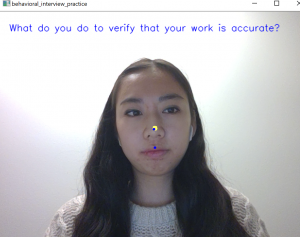

Jessica worked on integrating the behavioral interview questions that Shilika wrote into the rest of the facial detection code and the behavioral portion of the tips page on the web application. She used the questions that Shilika researched at the beginning of the semester, and selected a handful of them to place into a global array. Using the Python random library, one question is selected at random and displayed at the top of the video screen for the user to refer to as they wish. She also implemented the behavioral section of the tips page to provide the user information about behavioral interviews and how to prepare for them. Mohini and Shilika will be working on the technical section of the page. Next week, she plans on combining the eye contact and screen alignment portions together for Option 1 of the facial detection part. This will provide users with alerts for both subbar eye contact and screen alignment. She also plans on figuring out how to keep track of the amount of times that the user had subpar eye contact and/or screen alignment during each video recording. This will be the summary available to users in their profile.

Mohini worked on improving the accuracy of the speech recognition algorithm through the addition of another hidden layer in the neural network and adding more training data. While both these attempts were not successful, she plans to continue trying to improve the accuracy by tweaking some of the other parameters of the neural network. Additionally, she continued working on the technical page. The chosen category, question, user’s answer, and correct answer are all displayed on the screen. She then stores all this information into a database so that a list of the logged in user’s questions and answers can be displayed on the completed technical interview practices page, which can be accessed through the profile page. Next week, Mohini will continue trying to fine tune the parameters of the neural network and start the testing of the speech recognition algorithm.

Shilika worked on the neural network portion of the speech recognition algorithm. She was able to successfully add another layer and update all parameters and variables accordingly. This required changes to Mohini’s working neural network algorithm which had one hidden layer. The accuracy of the updated algorithm did not reach the expectations that we have set (60% accuracy). Next steps for Shilika include continuing the improve the accuracy of the speech recognition by changing variable parameters such as the number of hidden units and the amount of training data. She will also work on the profile page of the web application.