This week, I worked on implementing the saving of practice interview videos, the alerts given to the user, and the facial landmark part for screen alignment. Each time that the script is run, a video recording begins, and when the user exits out of the recording, it gets saved (currently, to a local directory, but eventually, to a database hopefully). This is done through the OpenCV library in Python. Similar to how the VideoCapture class is used to capture video frames, the VideoWriter class is used to write video frames to a video file. Each video frame is written to the video output created at the beginning of main().

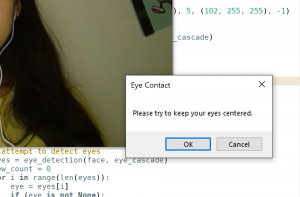

I also worked on implementing the alerts given to the user for subpar eye contact. Originally, I thought of doing an audio alert – particularly playing a bell sound when the user’s eyes are off-center. However, this proved pretty distracting, although effective in getting the user’s attention. Then, I experimented with a message box alert, which pops up when the user’s eyes are off-center. This proved to be another effective way of getting the user’s attention. I plan on experimenting with both of these options some more, but they both work well to alert the user to re-center as of now.

I began researching into the facial landmark portion, and have a basic working model of all of the facial coordinates mapped out. Instead of utilizing each facial feature coordinate, I thought it would be more helpful to get the location of the center of the nose and perhaps the mouth. This way, there are definitive coordinates to use for the frame of reference. If the nose and mouth are off-center, then the rest of the fact is also off-center. Next week, I plan on attempting to get the coordinates of the center of the nose and mouth utilizing facial landmark detection. This requires going through the landmarks array and figuring out which coordinates correspond to which facial feature. I also plan on doing more testing on the eye detection portion, and getting a better sense of the current accuracy.