This week I added audio Feedback to our application. Instead of only returning written feedback, our application would also return audio feedback. Since the user may be doing a leg raise or pushup and may not be looking at the screen, the audio feedback would allow the user to perfect his or her better. I converted some text to mp3 files and changed the outputs sent to the User Interface. I used Amazon’s Joanne as the audio voice. In terms of the feedback that we received for the live demo, I looked into the skeleton feedback and realized that we I would have to redo a lot of the posture analysis as well as the image processing because we don’t really have the opportunity to re-record the entire workout. Therefore, the best I can do is to feed our current screen recording into the algorithm. However, the screen recording does some processing to the live feed, so the HSV values are not consistent with what it was originally. We realized that it may be too much work to re-record since we are all in different physical locations.

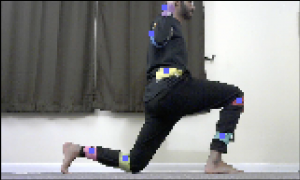

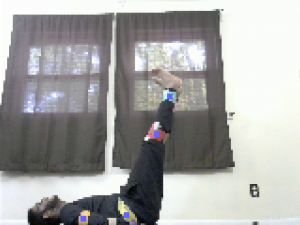

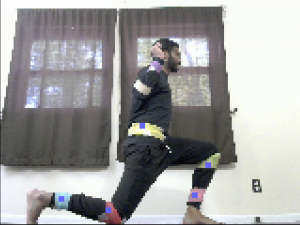

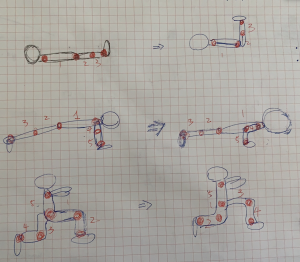

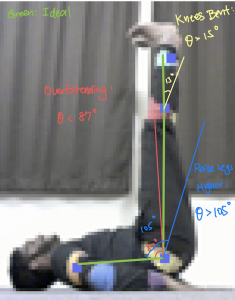

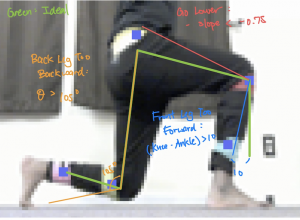

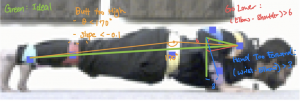

Since the final presentation is this week, I had to create the slides and organize the metrics and testbenchs that I have created in the previous weeks. Also, I am mostly in charge of assembling the final video, so I planned out the time stamps for each section of the video. I distributed the tasks to Venkata and Vishal for them to give me short clips of their portions. I generated diagrams for the posture analysis (shown below).

I also edited the code so that it saves images of what the binary mask looks like after every significant step. These diagrams will help me record the technical portions of image processing and posture analysis. I played around with iMovie to get familiar with it. I created an Ending scene and have started to cut and edit the videos that we want.

Next week, I will mainly be focusing on generating the video. The following week will be to complete the final report.