Team

- Our motor controller is broken, including our backup one. We made a contingency plan for the broken motor controllers by evaluating different cases and decided to keep using the same controller with more protection on the backside of the board.

- We found a neural network frame that would be fast enough to run real-time. This could be an alternate Ruohai is now devoting time to train the network, and I am devoting time to integrate the network into the vision pipeline.

- We are currently a little behind schedule as we faced a major obstacle in hardware and our vision pipeline needs extra work. The extra work on vision will be done next week and before the new motor controller arrives Xingsheng will focus on coding on STM32 and environment setup.

Ruohai Ge

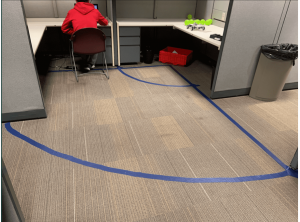

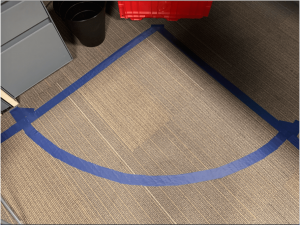

- Set up the physical environment at the D level. The 1m movement range is inside the blue line with respect to the origin. And the user’s three-meter range is also specified and bounded by the area between the inner and outer blue line

- I started to use Object recognition to reduce noise from our vision pipeline

- I collected and labeled all the data up to 230 images

- Find Yolov3 darkernet as a pretty good training neural network

- Train all the self-made datasets and yield pretty accurate results, speed is not fast enough. Each inference takes 0.1 seconds without GPU.

- I am on schedule and be reassigned to neural network task

- Next weeks deliverables

- Setup the physical environment and camera mounting at D level

- Transfer to the real world frame from camera frame

- Gather more datasets to retrain the neural network with GPU support. I previously used OpenCV.dnn which does not support GPU. I am going to use PyTorch to retrain so that the inference can use GPU

Xingsheng Wang

- I managed to let the mobile platform move in different directions through Bluetooth control. The accuracy of the motion still needs improvement.

- The controller board somehow short-circuited and was not functional. This happened at 10.28. We then used a backup board and it worked fine on 11.1. However, on 11.2 when we tested the platform again, the board short-circuited and was not functional. I identified the issue but are out of controller boards and are purchasing new boards now. The boards are shipped from China and would take around a week. Meanwhile, I can still work on controlling code for the platform.

- I am still on schedule. The program has tested to be working.

- For next week I will be assembling all the parts together and work on PID codes for the platform.

Zheng Xu

- The integration with Kalman filter trajectory estimation has been fixed. The tested result is in the following videos.

- The green square shows the capture frame of the ball, the red points show the predicted motion of the ball in the next couple of frames. The prediction is in 3D, the red points are the projection of 3D points.

- The working example of trajectory estimation is set up according to the demo place in D level.

- I am on schedule.

- For the next week, I will help establish the entire working environment. I will also integrate and test the trajectory estimation with neural network way of detection and tracking.