Team

Most Significant risks and contingency plans

Hough circle detection cannot detect ball-type trash continuously. It sometimes detects other non-ball-type objects. During very quick movement of the ball just horizontally, the detection mark does not show continuously.

Zheng needs to do literature reviews to find other methods which are both quick and stable for certain ball-type detection.

Changes made

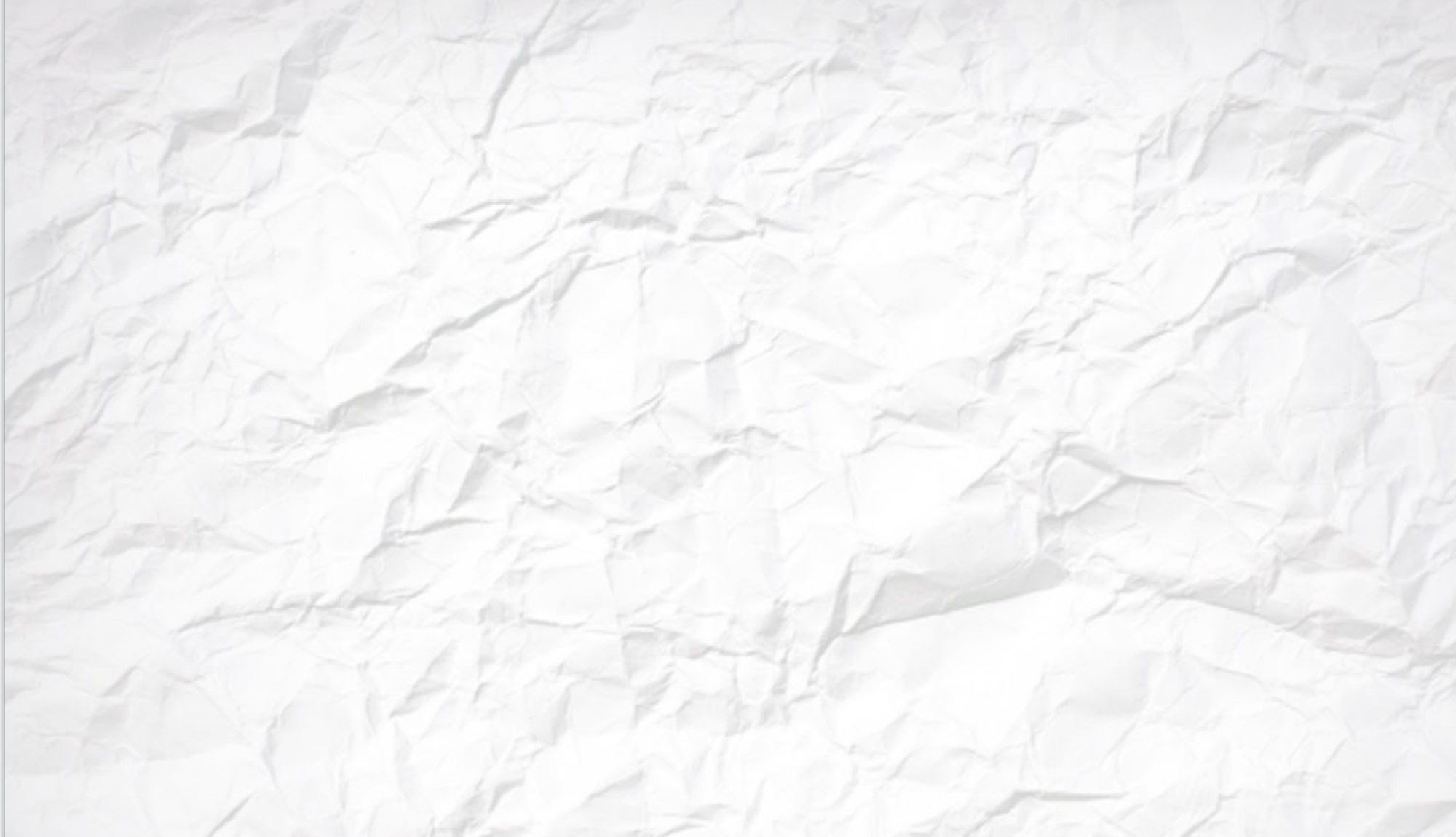

Based on the feedback from Professor Nace, faculties and TAs, We rethought thoroughly and re-did our requirements completely.

Use learning to recognize the trash is too slow, so we decided to add to the requirements that our trash is a certain type of ball with a diameter of 9.6cm and around 46g (similar to normal trash size and mass.) In this way, we can use traditional cv methods such as Hough circle detection to detect the trash.

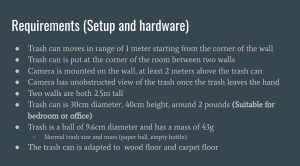

Schedule Update

We are on the schedule right now, so no big change made. Zheng now uses traditional cv method for motion capture and finds out hough circle transform is not a good way. So his schedule changes

Ruohai Ge

Accomplishments

Pick Kalman Filter as the algorithm for trajectories prediction after doing related paper literature review and discussing with a postdoc under Professor Howie Choset who has many experiences about CV, motion tracking

He said it would be very hard to track any item and under a dynamic environment. So as stated above, We decided to use a ball with a diameter of 9.6cm and mass of 46g as our “trash”. This size and mass can represent normal trash and also be easier to track.

A lot of paper use learning-based methods to do the tracking prediction. These methods can provide very accurate results under different environment but with very low speed, which is not suitable for our projects.

For traditional trajectories prediction, the Kalman filter is a widely used method. Under linear Gaussian assumptions, the Kalman filter is the optimal minimum mean squared error (MMSE) estimator. It is basically a (recursive) weighted sum of the prediction and observation. The weights are given by the process and the measurement covariances. So it adapts to some noise caused by the sensors and provides the result extremely fast.

Study and Understand Kalman Filter

https://towardsdatascience.com/kalman-filters-a-step-by-step-implementation-guide-in-python-91e7e123b968

http://ais.informatik.uni-freiburg.de/teaching/ws11/robotics2/pdfs/rob2-19-tracking.pdf

https://statweb.stanford.edu/~candes/acm116/Handouts/Kalman.pdf

Find Code Examples which could give some insights for our projects

https://github.com/NattyBumppo/Ball-Tracking

https://github.com/SriramEmarose/Motion-Prediction-with-Kalman-Filter/blob/master/KalmanFilter.py

Decide to use OpenCV’s Kalman filter Library to help me

Learn its documentation and Read its official example

https://github.com/opencv/opencv/blob/master/samples/python/kalman.py

Research Backup Plan if Kalman Filter cannot predict the trajectory

Linear Dynamic System (LDS) could also be used for tracking

http://ais.informatik.uni-freiburg.de/teaching/ws11/robotics2/pdfs/rob2-19-tracking.pdf

Find a suitable video which could be used for trajectory prediction test

The ball’s size is similar to our project’s trash size

The speed is the same and the view of the camera also is the same

Deliverables for next week

Implement the Kalman Filter and able to predict the tiny basketball in the video stated above

Schedule Situation

On Schedule

Finished literature review and decided to use the Kalman filter.

Xingsheng Wang

- Accomplishment

- Went through all available IDEs and selected two IDEs for Windows and Mac development environment.

- Read through hundreds of pages of technical documentations for both STM32F746ZG board and HC-05 bluetooth module and finished hardware connections for the board and the bluetooth module.

- Started initial test of bluetooth transmission by creating a project that allows user to toggle an LED on the board through bluetooth control. Code is adapted from http://solderer.tv/communication-between-the-stm32-and-android-via-bluetooth/. There are a few changes that need to be made to make it work with our board and code modifications are in progress.

- Designed the system communication protocol for position information transmission. The computer is going to send a string containing the position that the trash can needs to be and the STM32 board will receive the information and parse the string to get the coordinates. Motor movements are calculated on-board.

- Searched for the mobile base for the trash can and ordered a suitable mobile base for the project. Base has omniwheels to simplify motion of the trashcan and save time for trash can movements to catch trash in time. https://www.amazon.com/Moebius-Mecanum-Platform-Chassis-Raspberry/dp/B07JK5S67V/ref=sr_1_2?keywords=omniwheel&qid=1567790269&s=gateway&sr=8-2

- Deliverables next week

- A fully working LED controller via bluetooth to show that the transmission system is fully working. After that I will proceed to write drivers for the motors of the trash can.

- Schedule Situation

- On schedule.

Zheng Xu

- Accomplishment

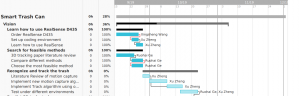

- Setup working environment for Realsense D435

- Roughly tested camera capabilities and limitations

- The camera is able to capture both depth and color frame at 640*480 resolution at 30fps, which meets our requirements.

- The camera does not measure depth for objects within 40 cm from the lens, leading to the requirement of hanging the camera at least 2 meters above the ground.

- Moving objects in the camera color frame are blurry, and could cause inconvenience in object detection and tracking.

- Decided initial design for visual recognition and tracking modules

- We decided to use Hough Transform as our initial detection module. This algorithm detects circles and has a very fast runtime speed. https://docs.opencv.org/master/da/d53/tutorial_py_houghcircles.html

- We decided to use Kanade-Lucas-Tomasi Tracking algorithm, based on our prior experience in its effectiveness and further research on its speed. http://www.cs.cmu.edu/~16385/s17/Slides/15.1_Tracking__KLT.pdf

- We will not include machine learning recognition in our MVP, as the runtime speed is not ideal for our purpose.

- Implemented a rudimentary vision project to recognize ball and get its position from the camera.

- The algorithm captures a round object and reports its depth from the camera lens. With slight operation it will transform into 3D position with respect to camera.

- However, we discovered that performance of Hough Transform on raw image input is not ideal. The circle’s size will vary, and the algorithm fails to track the circle while moving.

- We plan to use some background removal before object detection to reduce error and improve consistency.

- Setup working environment for Realsense D435

- Deliverables next week

- Does some literature review on background removal and motion capture in computer vision.

- Implement chosen literature.

- Schedule Situation

- On schedule