Celine:

This week I worked on preparing material for our mid-semester demo and was able to test performance of warped vs. unwarped pages. To show the necessity of taking the step to dewarp pages, I have below a comparison of the text from warping a slightly curved page vs. the text of a relatively flat page.

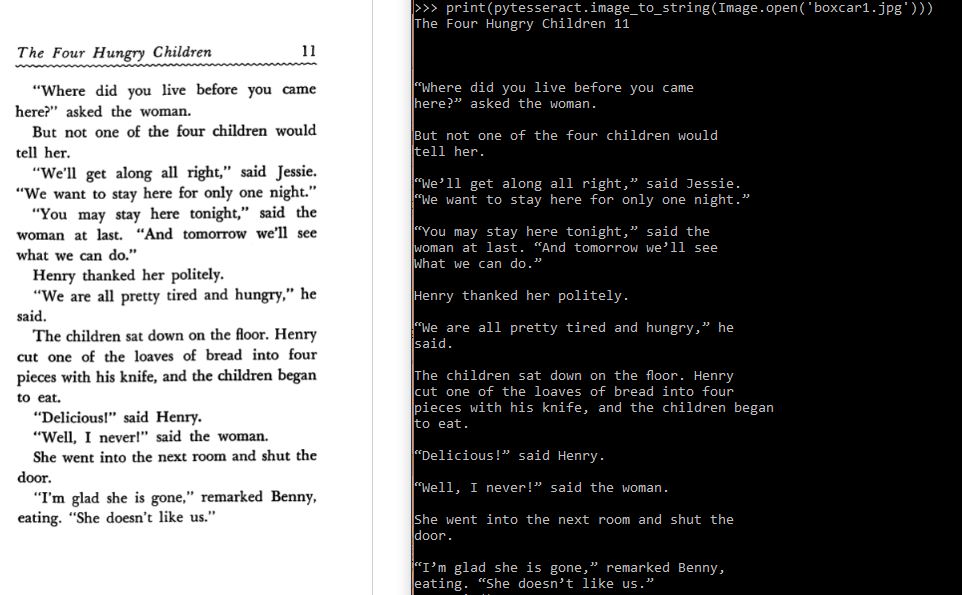

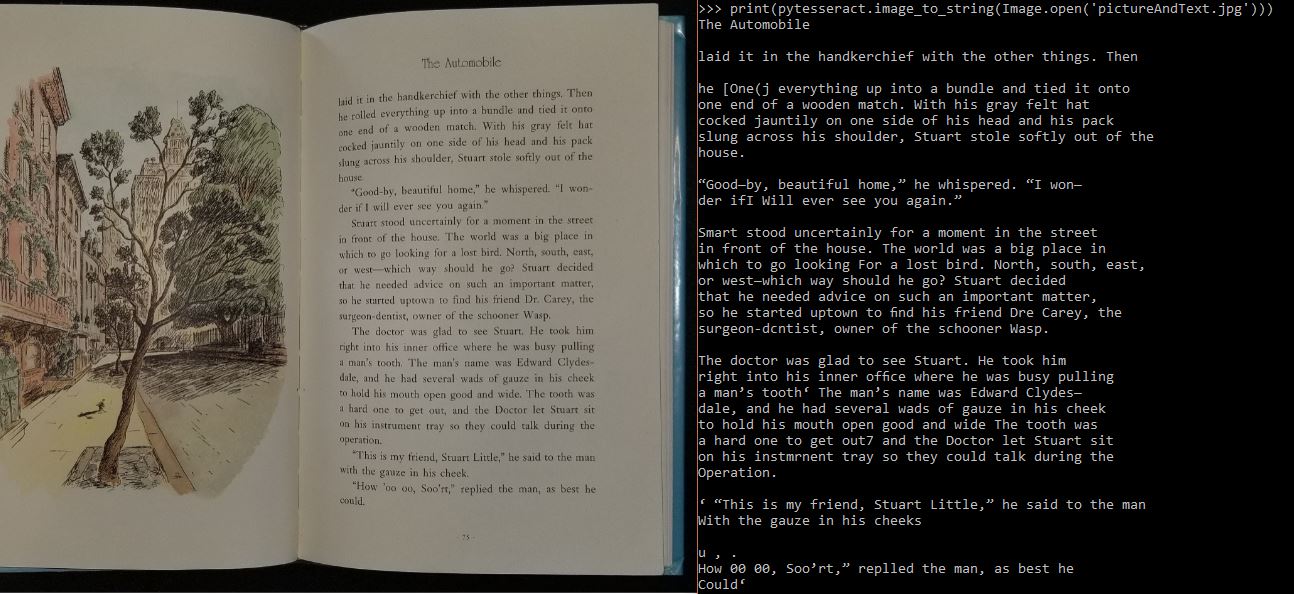

The left column is a curved page, and the right is a relatively flat page. The source pages look like so:

Thus I believe it is beneficial to pursue the dewarping strategy of improving OCR. I was able to test dewarping of the left image using a program I found online, and it produced text like so:

While it still isn’t perfect, this dewarping definitely improved the performance. I did some research and found that Python has some autocorrect packages we can use to check the outputs that Tesseract produces. I’ve also concluded that the structure of our device needs to include some lighting, as this greatly enhances the program’s ability to threshold and process the pages.

This coming week I will complete my own dewarping program and start looking at implementing autocorrect!

Effie:

This was an exciting week! Following up on setting up the pi last week (formatting the SD card, installing raspbian, and registering the pi on cmu wifi), getting some books to try out from the library, and working with Indu on talking through the design for the stand and wheel-arms she’ll be building (and going on adventures to find scrap wood!), this week I had fun getting things moving! As more parts came in I worked on soldering the motor-hat pins and connecting it to the pi to connect and drive a stepper motor (for the conveyer belt) and the “teensy” dc motor (for the wheel). Additionally I found some drivers online and tweaked them to operate a servo. I am able to drive and control the two motors, servo, and camera all independently. It is possible we might need to buy a separate hat for the servos (not sure they can be running on the same pins as they are used by the motors)… I hope I’ll know by next week. Last week we had connected together all the camera extension parts only to find out that our 8MP camera wasn’t working since the connector soldering was messed up – but thankfully Sam was able to fix our camera! So now we are able to get great pictures! I am working on writing a script to automatically take and save pictures at pre-determined time intervals (to then send off to Celine’s code for processing to text). I met up as well with this week with Greg Armstrong in the Robotics Institute who gave me valuable advice on how to potentially operate our wheel-arms.

So next week I want to work on a few things: I plan on integrating my code to speak to several of the components at once, to work with Indu on physically connecting components together for a prototype of the arm she is designing, and I will attempt to figure out how to motorize the camera we bought to programatically zoom/pan – though I fear it won’t be possible (and might not be necessary anyways), but would be cool if I could!

Indu:

This week I primarily worked on building out the page-turning device. I spent a few hours with a mechanical engineering student, Krishna Dave, and talked out what I had drawn out for the design of the entire device, to ensure I was thinking about everything properly and had it mapped out well. From there I started constructing the turning part with the gear. I went to the Maker Space and talked with an employee for a while about the best way for me to mount the motor hub into the gear (the hub’s diameter is larger than the gear’s diameter). Since I do not have machine shop training I left those parts with the employee for him to provide this service when possible.

I was originally planning on building the stand as well, but my mechanical engineering friend suggested I use a tripod to mount the camera instead of building another part of the system, at least for now, since that will be height-adjustable. Due to this advice I went to Hunt Library and looked at their assortment of tripods and found one that may possibly work for the design. I have yet to test this with the camera, as I need to mount the camera on wood before I can mount it to the tripod, which will be done this weekend.

I plan on continuing the building process of the device, as there is still more thinking that needs to go into the wheel-part of the page separation device, due to after talking with my mechanical engineering friend, seems to be that we should focus less on trying to have the wheel work by gravity.