Everyone:

Please see this link for a demo: https://www.youtube.com/watch?v=weT1ZYr_ntI&feature=youtu.be

Celine:

This week was a very busy week for us all! I finally got the opencv installation finished on the pi, but it had to be done in a virtualenv because of the conflicting python 2 and 3 installations on the pi. In the coming week or so I can try to get it working off of the virtualenv, as I’ve read online that virtual environments can slow down processes. Nonetheless, this setup worked for our purpose of being able to demonstrate our working page flipping and reading system.

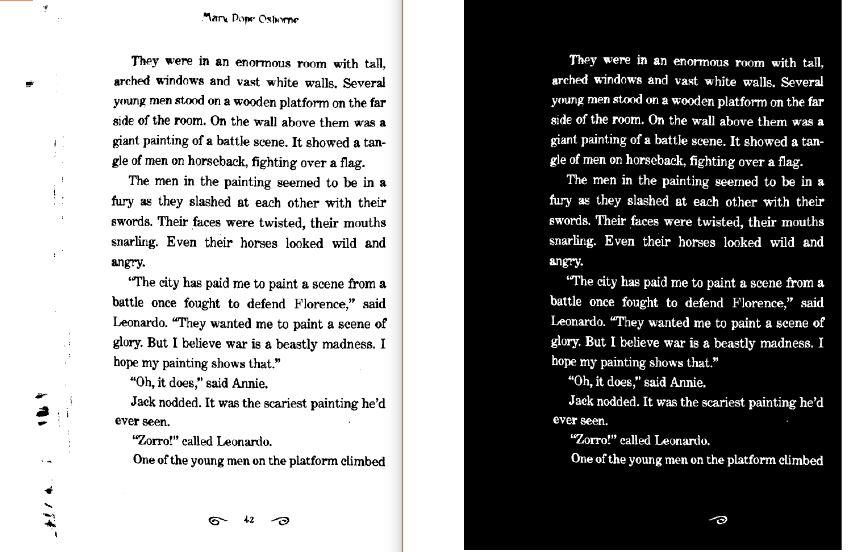

For my individual work, I was able to implement the header removal that I mentioned last post by doing a connected components analysis (numbers all areas that are of the same intensity, 1, with a label corresponding to the area number) and removed everything besides the largest connected component, which is always the text. I know this is true for this book series because the book pictures do not cut the text into pieces, and I remove the images before running and dewarping or processing in the keepText function.

I fixed the issue where the script would hang indefinitely while running. It turned out to be a stdout flushing error. After adding flush=True as a parameter into my print statements, I no longer see problems with indefinite hanging while running the script.

To my code I’ve also begun adding debugging and timing statements so that I can work on optimizing the parts that take the longest to run. I know that the most time consuming part of the code now is the optimization of projection parameters in the dewarping phase of the image processing. The entire process lasts at most two minutes, which happens when running on a page set with two pages full of text, and the dewarping takes up around 80 to 100 seconds of this 120 seconds.

Because of the slow run-time of the OCR script on the Pi, there are two solutions I can implement to solve this problem: have the Pi ssh onto my laptop and run the code there, or to push the input image to AWS S3 and either trigger an AWS lambda function that would run the OCR code, or have it ssh and run the code on an AWS EC2 instance.

For the next few weeks I will be focusing on optimization of the OCR and enabling ssh on my laptop. As a team we will discuss whether or not we want to move our processing to the cloud by weighing the additional benefits running in the cloud might bring.

Effie:

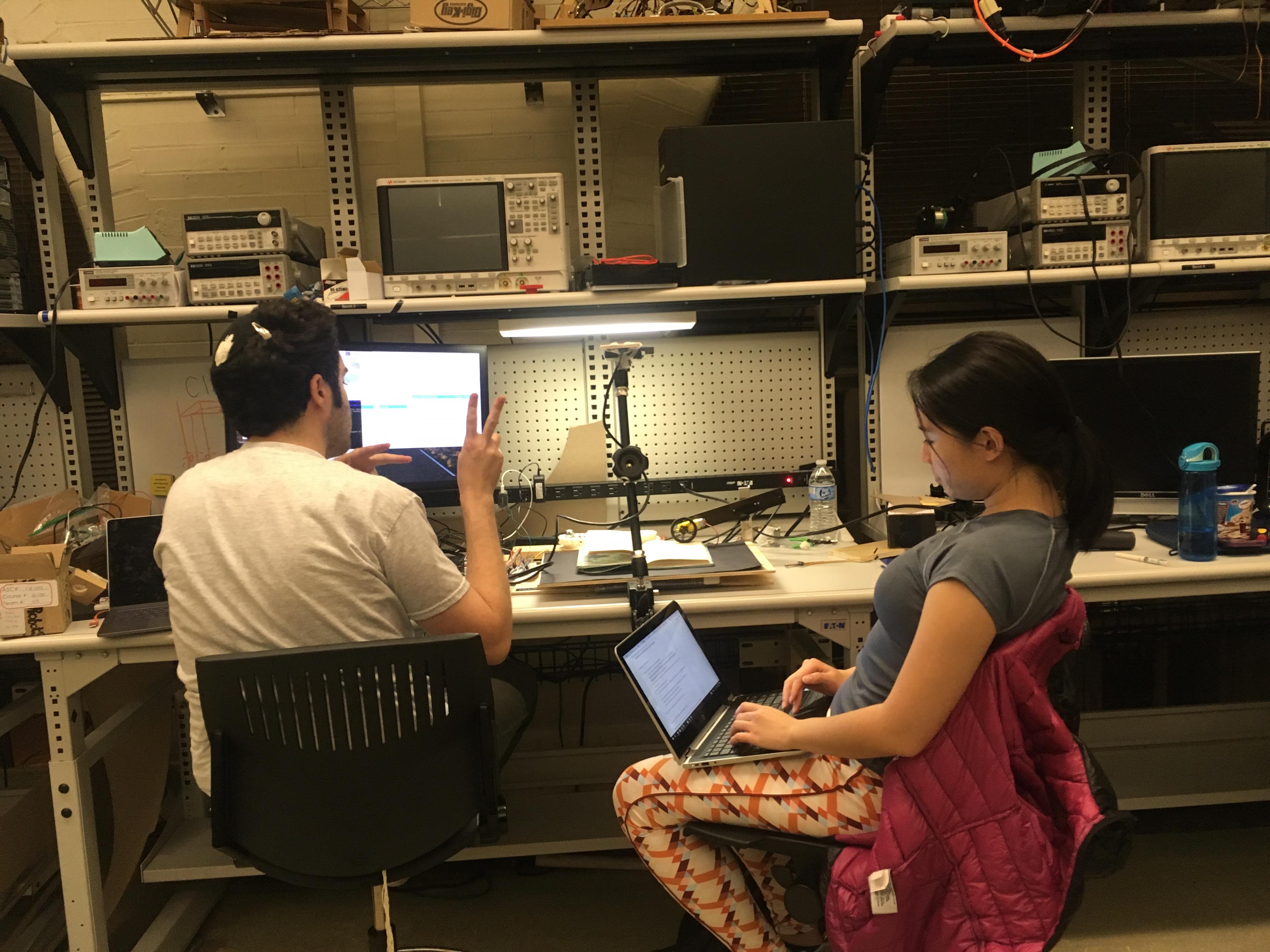

This week I mostly spent on integrating all the moving components and helping my partners out to bring everything together for demo. In particular after having several path/dependency issues to resolve, I had to rewrite a good chunk of my code from last week to work properly with the text-detection script Celine worked on (by capturing images, spawning a new thread that hangs while waiting for a text file to be created based off that image, spawning another thread to output the audio, all while still running the page flipping. I wrote a basic version which worked for the demo – but still have some issues of threading and working with Espeak (our current choice of text-to-audio library that is, well, temperamental) to hammer out this week. As the week progresses, I am to have my code much cleaner and simpler (since I quickly scrapped together a current version to showcase for demo), and will look more into other text-to-speech libraries since Espeak sounds funny.

Indu:

On Monday, with the help of the employees at the Maker Space, I was able to finalize building of the wheel pivot device to separate pages. On Monday Effie and I then began testing out the device, but the servo motor wasn’t working with the Raspberry Pi. We all then tried again on Tuesday, but it did not work, so we are waiting for the Pi hat to come in so that we can use that with the motor, which will hopefully lead to the motor working on the device. Currently it can move up and down by hand, so it should work with the motor, as I looked up the torque and confirmed with a Maker Space employee that the torque statistics mean that it should work based on the dimensions I calculated for the device.

On Tuesday we also worked on our timeline for the rest of the semester, so I will be spending the rest of the semester building out all the parts for final demo, such as another wheel pivot device for the other side of the book (to flip pages backwards), a height adjustable stand, and a simpler page turning mechanism since the conveyor chain mechanism appears to be not consistent.

Thus, after the demo I went with Effie to get acrylic pieces at the IDEATE work space in Hunt Library. Later on in the week, Celine assisted me in the Maker Space and took more videos of me working, such as me cutting the acrylic.

Please see this video: https://www.youtube.com/watch?v=jl1ilr-w4_A&feature=youtu.be